Ad hoc testing injects human intuition into a delivery pipeline that often relies on automation, scripted test cases, and machine-generated metrics.

It is the moment when an expert walks up to a build, pokes at it like a curious customer, and refuses to stop until something breaks. In a world that celebrates precision and repeatability, the unscripted nature of ad hoc testing may sound reckless. In practice, it is a disciplined software testing tactic that exposes hidden risk and provides rapid feedback to senior decision makers.

This guide explains how to run ad hoc testing inside a modern software development lifecycle, how to measure its impact, and how to convince skeptical stakeholders that a two-hour freeform session can save a seven-figure launch.

Why Structured Organisations Still Need Unstructured Tests

Formal verification is bound by written requirements. If the requirement is incomplete, the automated suite will tell a comforting lie. A seasoned testing team that ignores the manual and follows instinct quickly finds the gap. That discovery prevents production incidents, reputational damage, and emergency patch cycles.

Engineering leaders, therefore, keep ad hoc testing on the roster for three strategic reasons:

- Risk discovery at speed. A single hour of exploratory testing can cover dozens of edge cases that would require days of script writing and countless test cases.

- Requirement validation. Unstructured testing by experienced testers confirms whether the specification matches real-world behaviour.

- Cost avoidance. A defect found before release is one-tenth the cost of the same defect found by a customer service desk.

Key Principles That Separate Ad Hoc Testing From Random Clicking

Though it typically doesn’t include elaborate test planning, ad hoc does not mean undisciplined testing. Professional ad hoc testing prioritizes intuition, user empathy, real-time evidence capture, and tight developer feedback loops. Effective sessions follow four non-negotiable rules.

- Intuition before documentation. The tester relies on experience to sense brittle logic or missing validation.

- User-centric thinking. Every action is framed as something a real user might do when bored, confused, or frustrated.

- Immediate evidence capture. Screen recordings, console logs, and input payloads are saved as soon as an anomaly appears.

- Rapid feedback loops. Insights are delivered to developers while the affected code is still in active memory.

When teams follow these rules, ad hoc testing becomes more than exploratory. It becomes a disciplined, high-signal practice that drives faster fixes and sharper product insight.

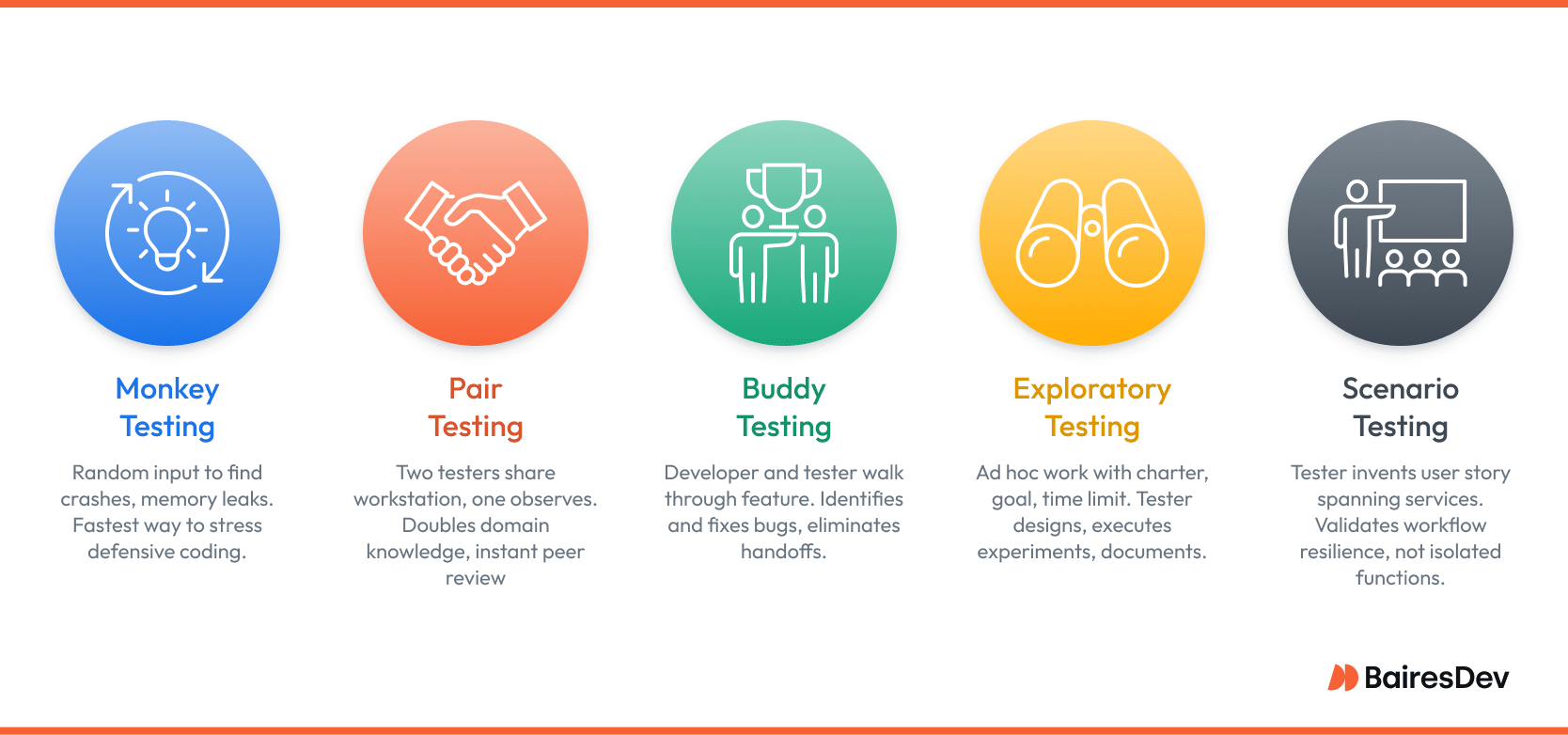

Core Types of Ad Hoc Testing

Ad hoc testing includes several distinct formats, each suited to different team setups and risk profiles. Monkey testing exposes edge-case failures through randomized input, while pair testing leverages collaborative exploration, and so on. Engineering leaders can selectively apply these methods to increase coverage without introducing unnecessary process overhead:

Monkey Testing

A tester provides random input, rapid navigation, and repeated state changes to see whether the application crashes, leaks memory, or corrupts data. Although the activity looks chaotic compare to structured testing, it is the fastest way to stress defensive coding paths that rarely receive scripted coverage.

Pair Testing

Two testers share one workstation. One manipulates the interface while the other observes, challenges, and suggests alternative paths. The live discussion doubles domain knowledge and delivers instant peer review of every discovery.

Buddy Testing

A developer and a skilled tester walk through a new feature side by side. The developer supplies technical context about intended behaviour. The tester counters with user driven what‑if scenarios. Many bugs are identified and fixed during the session, eliminating cross team handoffs.

Exploratory Testing

Exploratory sessions resemble ad hoc work but include a brief written charter that states a goal and a time limit. The tester designs and executes micro experiments in real time while documenting observations. The structure satisfies auditors without crushing creativity.

Scenario Testing

The tester invents a believable user story that spans multiple services, devices, or roles. For example, an HR administrator imports five hundred employee records, corrects three errors, and then attempts a second import. Scenario testing efforts validate workflow resilience rather than isolated functions.

Each of these approaches offers a tactical way to extend coverage beyond scripted tests. By matching the method to the context, teams can extract meaningful insights without sacrificing speed or flexibility. The key is intentional execution, not just improvisation!

Placing Ad Hoc Testing Inside a Continuous Delivery Pipeline

Formal testing methods already includes static analysis, unit tests, integration suites, and canary deployments. Adding unscripted exploration is straightforward when treated as an overlay instead of a new phase.

- Trigger event: Choose a repeatable event such as promotion of a release candidate to staging.

- Time‑boxed window: Allocate one or two hours per high risk feature. The limit forces focus and protects the schedule.

- Expert ownership: Assign senior testers who know the domain. Their intuition is the engine of discovery.

- Evidence in real time: Record video, take annotated screenshots, attach payloads to issues, and tag logs with session identifiers.

- Feedback into automation: Convert confirmed defects into formal regression cases so the suite grows with each cycle.

By embedding ad hoc testing into existing pipeline events, not as a separate stage but as a focused overlay, teams gain rapid insight without disrupting flow. The result is tighter feedback loops, faster detection of high-risk defects, and a regression suite that improves with every iteration.

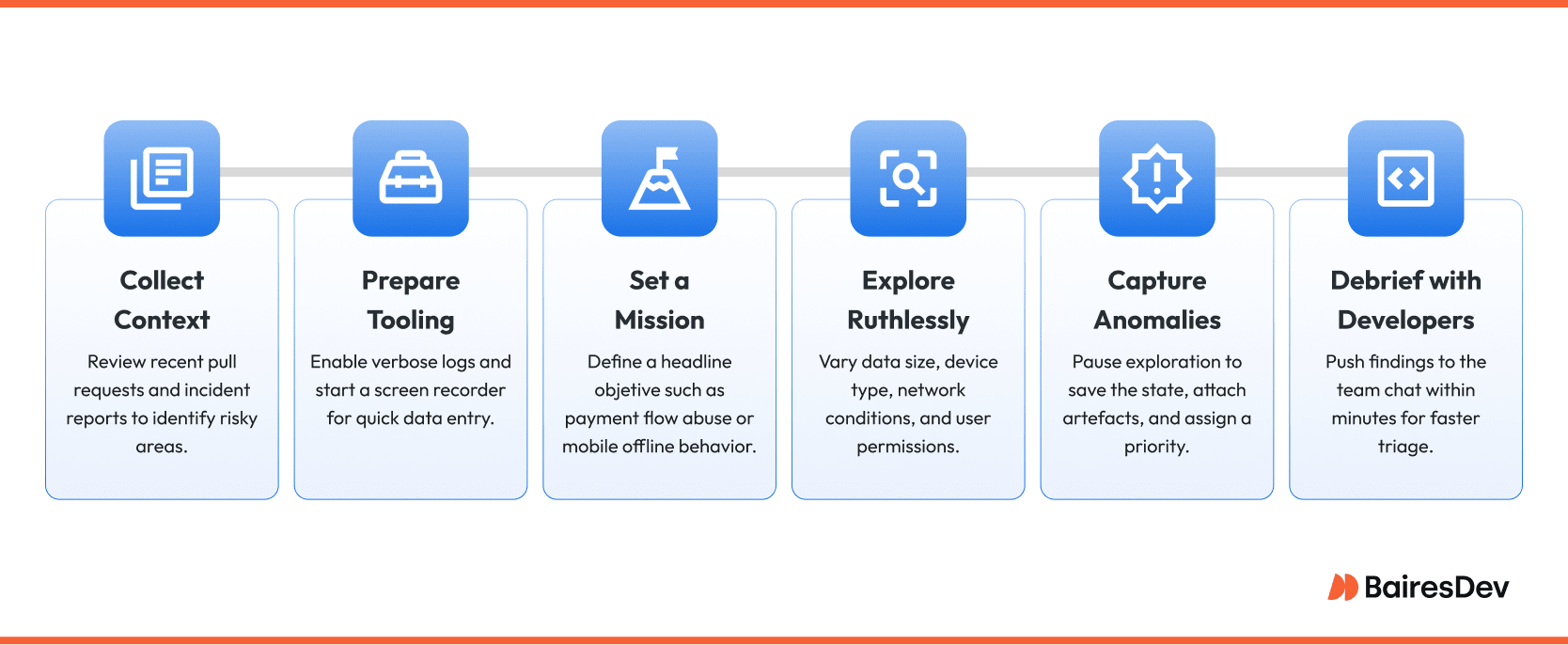

Executing a High Value Session: Step by Step

Ad hoc testing only delivers value when sessions are intentional and well-executed.

The following workflow outlines how testers can maximize signal in a short window by combining technical prep, focused objectives, and disciplined follow-through:

- Collect context. Review recent pull requests, architecture diagrams, and incident reports to identify risky areas.

- Prepare tooling. Enable verbose logs, start a screen recorder, and open an issue tracker template for quick data entry.

- Set a mission. Even a true ad hoc session benefits from a headline objective such as payment flow abuse or mobile offline behaviour.

- Explore ruthlessly. Try different testing strategies. Vary data size, device type, network conditions, and user permissions. Switch language locale mid-flow. Interrupt API calls.

- Capture anomalies immediately. Pause exploration long enough to save the state, attach artefacts, and assign a priority.

- Debrief with developers. Push findings to the team chat within minutes. Fresh code context means faster triage.

High-impact sessions don’t happen by accident. When experienced and skilled testers enter with context, capture findings in real time, and close the loop with the development team, ad hoc testing efforts start to become a reliable input, not just an exploratory detour.

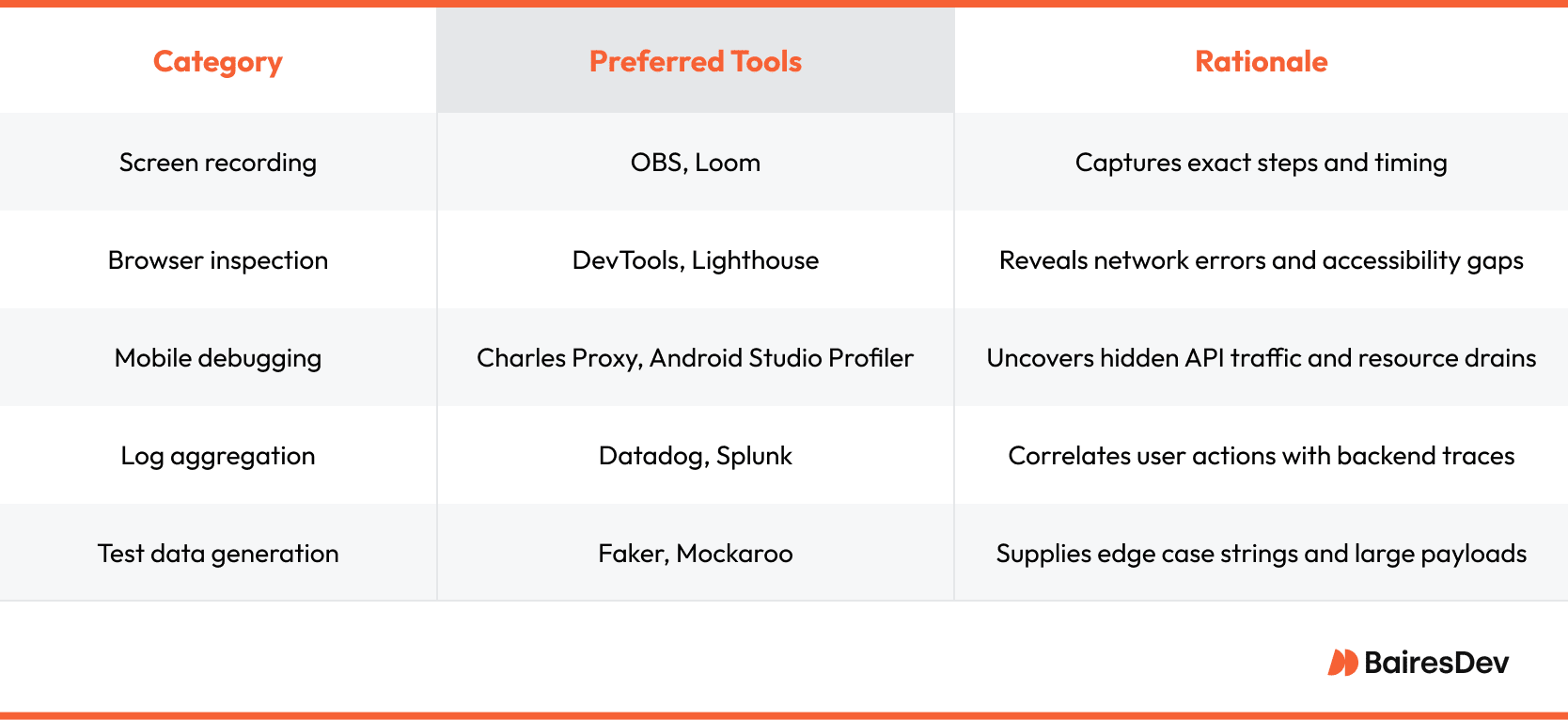

Tooling That Boosts Discovery Rate

The effectiveness of ad hoc testing hinges on visibility. With the right tools, testers can identify subtle failures, trace root causes, and deliver actionable evidence. The tools below consistently raise the discovery rate in high-value exploratory testing.

When built into the team’s workflow, this tooling stack turns gut instinct into concrete signals that developers can act on immediately.

Benefits Summarised for Technical Leadership

For engineering leaders, the case for structured testing processes isn’t just technical, it’s strategic. When integrated thoughtfully, adhoc testing efforts deliver compounding returns across engineering efficiency, team dynamics, and product quality. Here’s how the benefits play out in practice:

- Early warning. Exploratory testing identifes concurrency flaws that load tests miss.

- Better return on testing spend. One skilled session covers risk that would otherwise demand hundreds of pre-written test scripts.

- Higher tester engagement. Varied work keeps senior engineers invested, reducing turnover.

- Customer empathy. Freeform interaction surfaces usability friction that numeric metrics ignore.

- Improved audit trail. When paired with quick recording, each defect includes a history of exactly how the system failed.

These benefits aren’t hypothetical. They show up in faster root cause analysis, lower defect leakage, and tighter alignment between engineering and end users. For leaders under pressure to do more with less, ad hoc testing offers a rare combination of agility, depth, and operational leverage. Provided it’s done right, naturally.

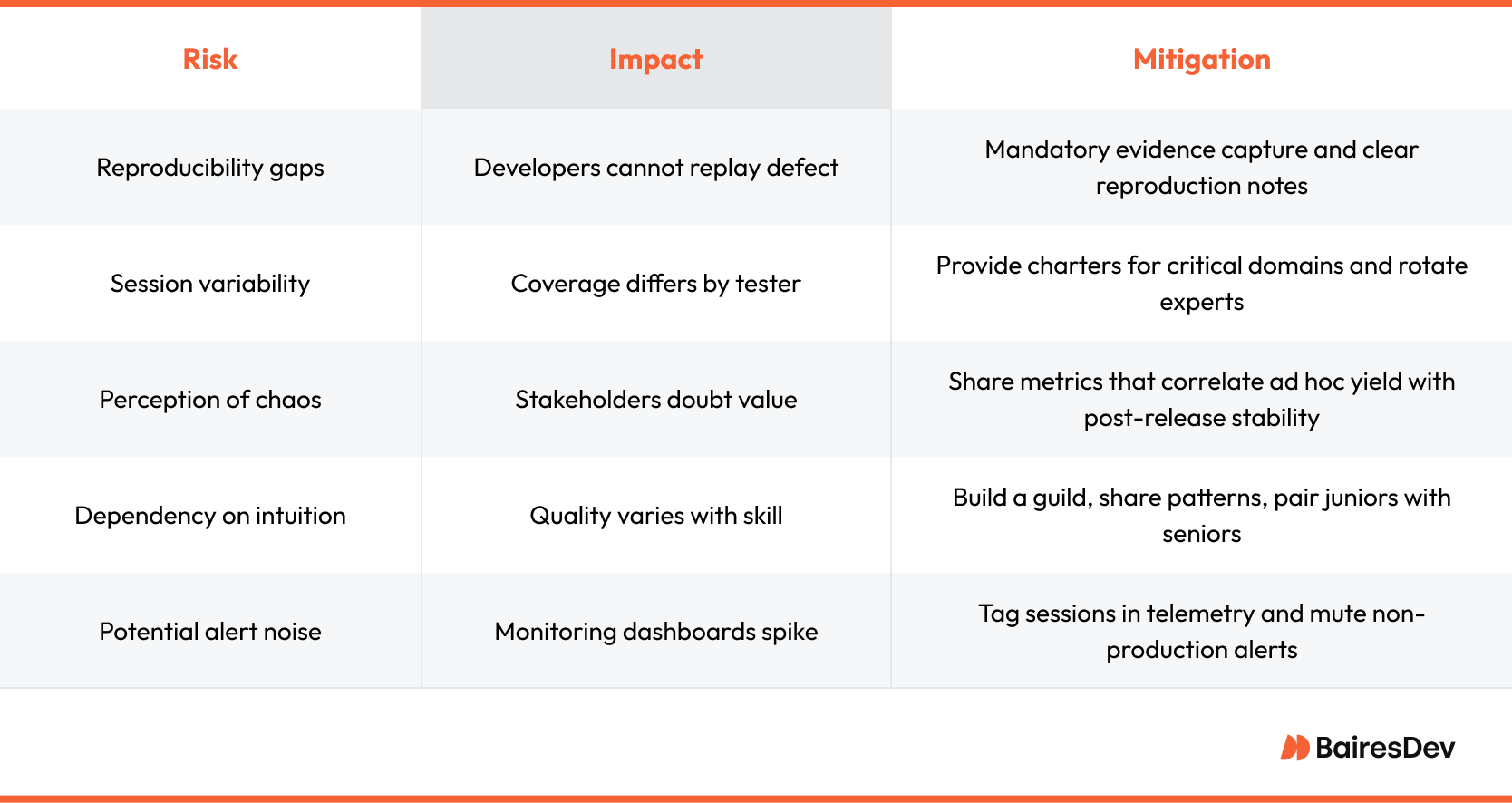

Limitations and Mitigations

While ad hoc testing brings agility and insight, it also introduces specific risks that can undermine its value if left unmanaged. The table below outlines common limitations, their operational impact, and pragmatic steps to contain them.

With the right scaffolding, these risks don’t have to be deal-breakers. Instead, they become manageable constraints, ones that allow ad hoc testing to deliver value consistently without compromising reliability, visibility, or stakeholder trust.

Real-World Scenario: Preventing a Holiday Checkout Disaster

Imagine a high-traffic marketplace planned a flash sale scheduled for midnight Eastern Time on Cyber Monday. Formal suites passed. Performance tests cleared ten thousand concurrent users. Two senior testers scheduled a 90-minute ad hoc testing session. They created thousands of small carts, switched currencies, toggled shipping addresses between domestic and international, and interrupted network traffic with a proxy.

At the 53-minute mark, the checkout service produced duplicate payment events. A third-party fraud engine flagged the pattern and would have blocked the merchant within minutes of launch. Engineers traced the defect to a bug triggered only when currency conversion and partial shipping were combined.

Because it was caught and patched before launch, the business avoided a potential six-figure loss in chargebacks and reputational damage.

Implementation Checklist for Engineering Managers

To operationalize ad hoc testing without compromising quality or predictability, engineering managers can follow this lightweight but effective implementation checklist:

- Define a repeatable trigger for every release candidate.

- Maintain a roster of domain experts willing to rotate.

- Allocate one or two hours depending on feature risk.

- Require evidence capture as a first class artifact.

- Convert every confirmed defect into an automated regression script.

- Track yield per session and present savings to finance.

By standardizing these actions, teams can turn an inherently informal testing process into a repeatable source of insight, defect prevention, and cost justification.

Integrating Lessons Learned Back into the Software Development Lifecycle

Ad hoc testing activities are only the start of a positive feedback loop. Each discovery should trigger a lightweight improvement action.

- Convert the defect into a regression script added to the automated suite so that the bug never reappears.

- Refine product requirements where the user journey was unclear or incomplete.

- Educate developers through root cause workshops that dissect why the scripted path overlooked the flaw.

- Strengthen monitoring by adding specific alerts that would catch similar issues in production.

- Adjust risk models and future testing charters based on new knowledge of weak spots.

With this loop in place, unscripted exploration continuously raises the baseline of quality and resilience.

Unscripted, Not Unreliable: The Case for Modern Ad Hoc Testing

Ad hoc testing is not a relic of the past; it is a proven tactic that complements automation, strengthens formal test execution, and protects brand reputation.

By injecting structured intuition into fast-moving delivery cycles, engineering leaders gain a flexible instrument for identifying defects that scripted methods and formal testing processes cannot see. The practice is inexpensive, easy to schedule, and immediately measurable. Used wisely and documented carefully, it turns a chaotic reputation into a disciplined source of competitive advantage.

Senior stakeholders who decide to use ad hoc testing and adopt the principles outlined in this guide can expect fewer emergencies, smoother launches, and a measurable drop in post-release incident cost. In an era where software defines the customer relationship, that outcome is worth every minute of exploratory work.

Frequently Asked Questions

Does adhoc testing conflict with compliance frameworks such as ISO 27001?

No. Compliance requires traceability, not rigid scripts. A screen recording paired with a signed defect report satisfies the control.

How much time should a product team budget per sprint?

Teams in a two week cadence typically allocate four to six tester hours to perform adhoc testing focused on high risk domains.

Can automated chaos tools replace monkey testing?

Synthetic chaos covers infrastructure level failure. A human still uncovers nuanced workflow surprises that synthetic events overlook.

Is ad hoc testing the same as negative testing?

Negative testing checks known invalid input. Unlike other testing methods, Ad hoc testing seeks unknown behaviours, which sometimes include negative cases but also combine flows in unpredictable ways.

What metric proves value to executives?

Track the number of production incidents tied to areas that received ad hoc test coverage versus those that did not. A downward trend supports the investment.