Software has never moved faster. Generative coding copilots push fresh commits into your repository every hour, while product managers expect those features in production by the afternoon. Granted, this is an exaggeration, but the result of AI productivity gains is a widening gap between the rate at which code is written and the speed at which it can be validated. In this climate, manual testing is a luxury few teams can afford, and even automated testing is becoming bottlenecked.

Selenium remains the most widely adopted solution for browser-level automation, and the acceleration brought on by AI in 2024 and 2025 has only strengthened its relevance. Every new pull request represents a potential regression that can erode user trust or destabilize revenue-critical workflows. Automated tests catch those failures before your customers do, and Selenium continues to dominate that layer of the stack because it speaks the language of the browser itself.

This article assumes you already know the pain of unstable releases. It will not walk through a demo or compare syntax quirks. Instead, it explains why Selenium still anchors many enterprise QA strategies, where it excels, and where it falls short. The goal is to help you decide how much of your future quality assurance investment belongs in Selenium, how that effort integrates with CI pipelines, and when partnering with a specialized QA team delivers better economics.

What Is Selenium? Framework Overview for Modern QA Teams

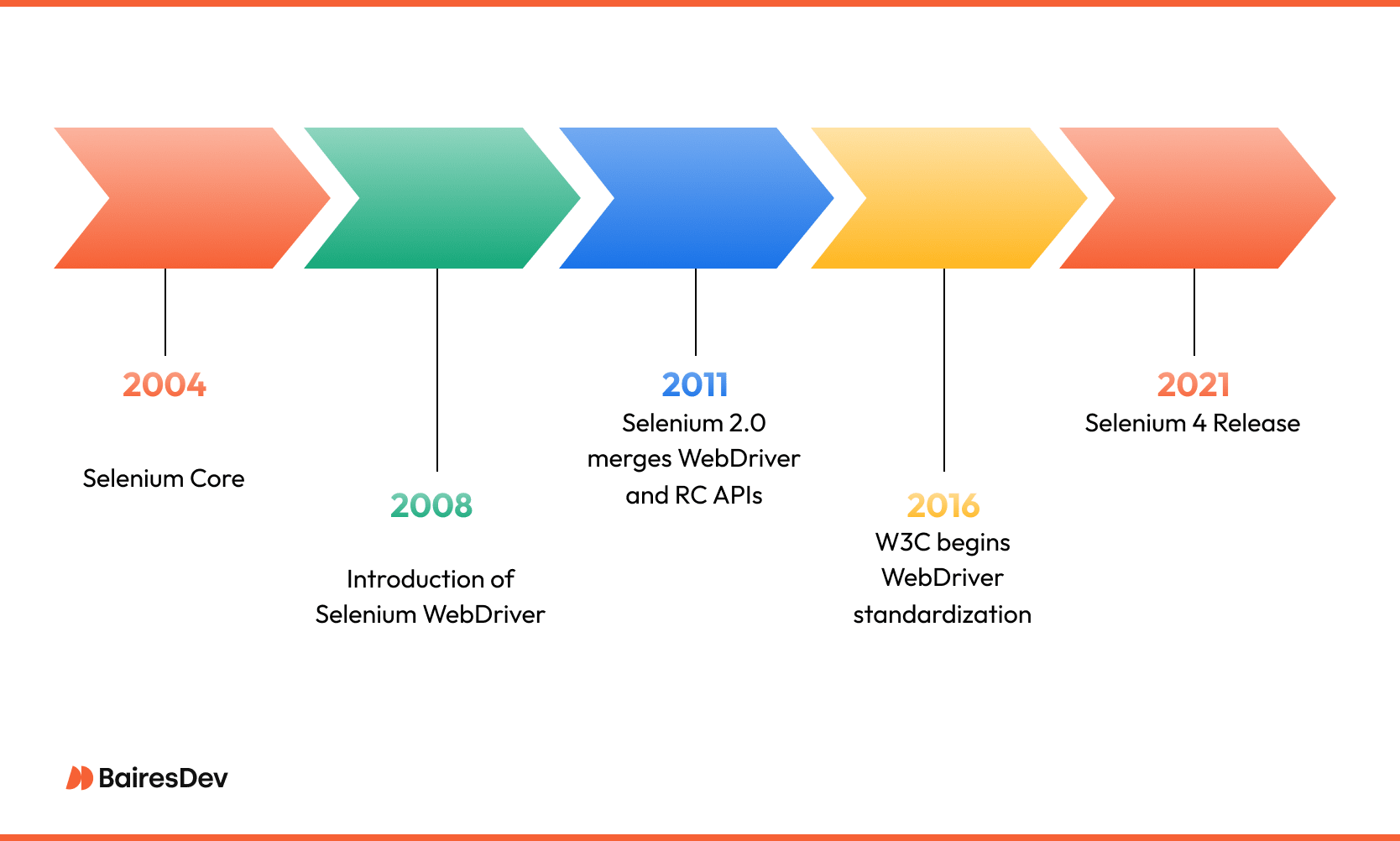

Selenium is an open-source suite that lets engineers drive real browsers with code. It began as a JavaScript test runner in 2004 and matured into today’s three-part toolkit:

- Selenium WebDriver exposes a language-agnostic API that maps high-level commands to browser-specific drivers such as ChromeDriver or GeckoDriver.

- Selenium Grid distributes those commands across many machines, enabling parallel execution that slashes wall-clock time for large test suites.

- Selenium IDE records user interactions inside Chrome or Firefox and converts them into runnable scripts, helpful for quick proofs of concept even if most production teams outgrow it.

Together, these pieces form a thin control layer rather than a complete turnkey product. They do not provide assertion libraries, result dashboards, or flaky-test triage. That design is a feature, not a defect. It lets teams assemble a stack that matches their language preferences, reporting needs, and infrastructure constraints. WebDriver can slot into a Java or Python codebase with equal ease, and Grid can run on bare metal, inside Docker, or on a managed cloud farm.

Unmatched Cross-Browser Support

Because Selenium operates at the browser boundary, it remains the de-facto standard for cross-browser validation. No other framework matches its driver coverage across Chrome, Firefox, Safari, Edge, and the vendor-branded variants shipping on mobile devices.

Competing tools like Cypress and Playwright offer friendlier APIs for modern JavaScript apps, yet they still integrate with or fall back on Selenium drivers when a test must cover legacy browsers or enterprise security constraints.

Selenium in the Enterprise: Where It Fits and Where It Doesn’t

Enterprise teams adopt Selenium for three primary reasons.

First, it delivers complete browser fidelity. Tests interact with the same rendering engine users see, which surfaces layout shifts, cookie policies, and CSP violations that headless unit tests miss.

Second, Selenium is language-neutral. A company can standardize on Java for its back-end services, Python for data science, and JavaScript for front-end code, then use the same WebDriver contracts everywhere.

Third, it scales horizontally. By sharding tests across a Selenium Grid you can reduce a ten-hour regression suite to minutes, which keeps nightly pipelines under control as your codebase grows.

That flexibility brings trade-offs. Selenium exposes the raw DOM, so poorly chosen selectors will break each time a front-end engineer renames a CSS class. Dynamic single-page applications can trigger race conditions that create flaky tests, where the same Selenium test script passes or fails depending on timing. Visual regressions are another blind spot. WebDriver confirms that elements exist, not that they render correctly. Teams often bolt on tools such as Percy or Applitools to capture screenshots and detect pixel shifts.

The decision to lean into Selenium therefore hinges on context. If your product spans multiple browsers or embeds in customer-controlled environments, Selenium is difficult to replace. If your front-end is a React SPA delivered only on Chromium, you might pair lighter tools like Playwright for component tests with a slim Selenium layer for smoke tests. Either way, frameworks are rarely turnkey. Frameworks need architecture, maintenance, and constant refactoring to keep pace with AI-accelerated feature churn.

Quality-focused service partners close that gap by supplying hardened selector strategies, self-healing locators, and infrastructure-as-code templates that spin up disposable grids in minutes. They also own the reporting stack, so your engineering leadership sees trend lines on test flake and execution time instead of combing through JSON test commands over the weekend. In practice, many enterprises blend in-house coverage with external QA accelerators to protect velocity without hiring an army of specialists.

Building a Maintainable Selenium Test Suite

The first scripts your team creates often pass on day one and then crumble under the pressure of a fast‐moving front-end. Longevity comes from architecture. To build a maintainable Selenium suite at scale, enterprise teams should focus on:

- Clean abstraction layers (e.g., Page Object Model) that isolate UI changes,

- Stable locator strategies using automation-reserved attributes,

- Explicit waits and race-condition mitigation for dynamic content,

- Test data generation that supports parallelism and repeatability,

- Integrated reporting that captures artifacts for debugging and trend analysis.

Everything starts with a clean abstraction layer. Most enterprise teams use a Page Object Model, mapping each view to a class that exposes business actions rather than raw locators. When a designer renames a CSS class, you update one mapping file instead of three hundred tests.

Under that abstraction lives a disciplined selector strategy. Rely on stable identifiers that product teams agree never to change—data-test attributes or generated IDs reserved for automation. XPath expressions that traverse half the DOM save time in the short run but create flake the moment a container div is repositioned. The same principle applies to waits. Explicit waits keyed to a single condition, such as “element is clickable,” outperform blanket sleep calls. They keep suites deterministic while still tolerating network jitter and client-side rendering delays.

Data management is the next pillar. Tests that depend on unique usernames, order numbers, or payment tokens need a controlled way to generate and retire that data. Service virtualization or API-level fixtures can reset state between cases, so the browser layer stays stateless and parallel-friendly. Once data is predictable, parallel execution becomes practical. Running ten threads on a local grid or a cloud farm turns a two-hour suite into a coffee-break task, which keeps build pipelines short enough to serve continuous delivery targets.

Finally, every mature suite embeds reporting from the outset. A failing test should give engineers an artifact they can reproduce—a screenshot, network trace, or video segment—rather than a one-line stack trace. Tools like Allure or ExtentReports sit on top of WebDriver without altering the test code. They surface trends such as rising flake rates or a slow creep in average execution time, allowing leadership to invest in maintenance before the suite degrades.

A well-architected suite does more than guard releases. It becomes institutional knowledge, documenting how the application should behave. That knowledge is expensive to build and fragile without discipline, which is why many enterprises lean on specialized QA partners to enforce patterns, audit flake, and rotate brittle selectors before they break the nightly build.

Scaling Selenium with Infrastructure and CI/CD Integration

Once the test suite is stable, the next constraint is raw infrastructure. A single workstation can drive only a handful of browsers before CPU, memory, or network I/O throttles throughput.

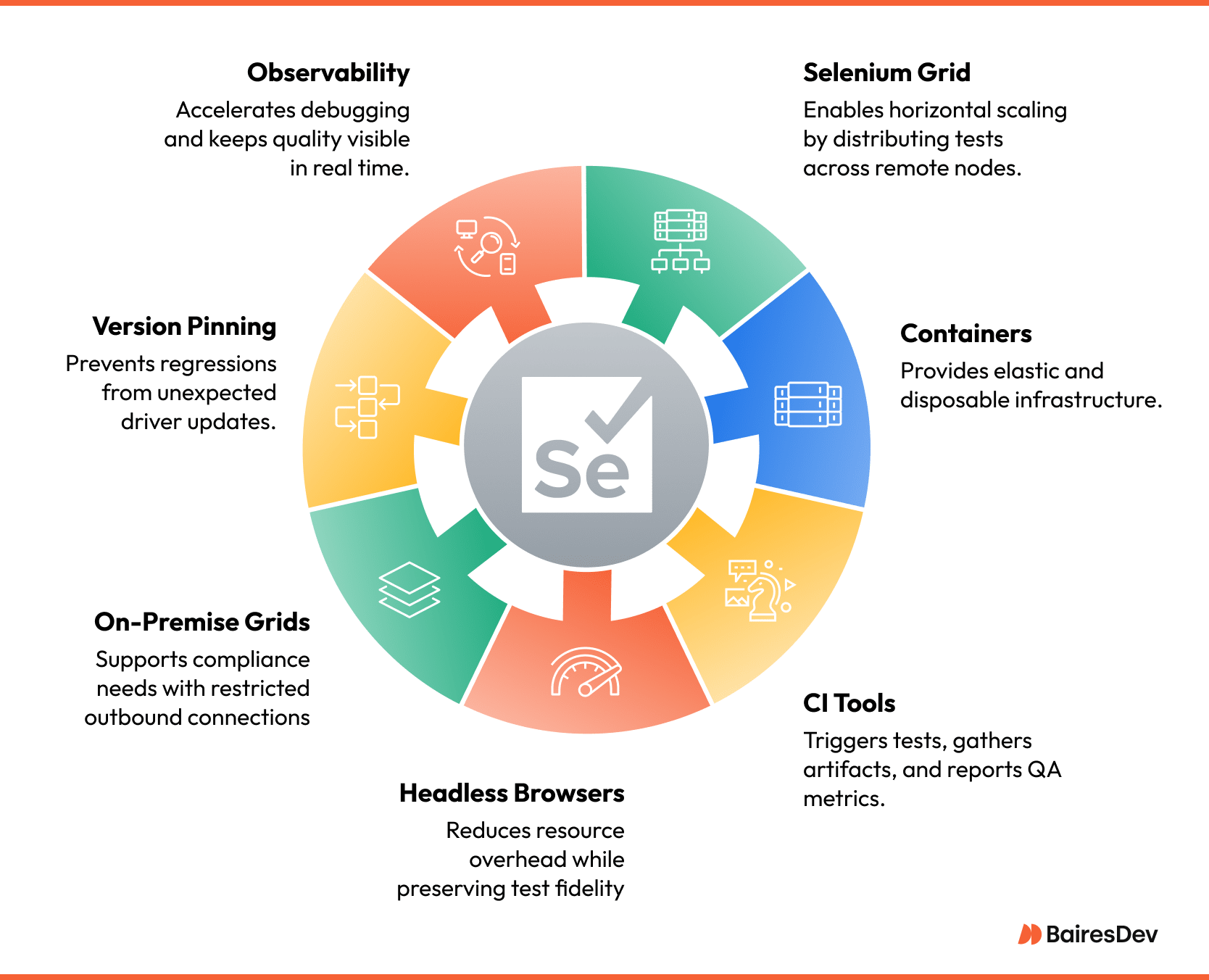

Selenium Grid solves that bottleneck by routing commands to remote nodes, each running its own driver and browser instance. In practice, most teams deploy Grid inside containers. A Docker Compose file or a Kubernetes helm chart spins up a hub and as many node replicas as the pipeline needs, then tears them down when the job completes. This elasticity contains cost and keeps local developer machines free for actual coding.

Integration with the delivery pipeline is equally important.

Jenkins, GitHub Actions, GitLab, and CircleCI all provide parallel executors that can launch Grid containers, run tests, and gather artifacts. A healthy pipeline publishes three core metrics after every run: total duration, pass-through rate, and newly introduced flake. These numbers let engineering leadership decide whether to merge, roll back, or divert effort into test maintenance.

Headless browsers have become the default in 2025 because they eliminate the overhead of GPU acceleration and window managers. Chrome and Firefox both ship official headless modes that behave identically to their headed counterparts, yet consume fewer resources. For visual validations, headless mode streams a framebuffer to the test runner, which still allows screenshot capture or pixel comparison without the weight of a full desktop environment.

Security and compliance add another layer. Some industries prohibit outbound traffic from staging environments, making cloud-hosted device farms a non-starter. In those scenarios, companies deploy on-premise grids behind a reverse proxy or in a hardened DMZ. Container images are pinned to specific driver versions, ensuring that a sudden browser auto-update does not invalidate three hundred tests overnight.

Observability closes the loop. Centralized log aggregation and trace correlation tie a failing Selenium command to the exact microservice request that returned a 500, shortening root-cause analysis. When your CI server pushes results into a shared dashboard, product owners see the state of quality in real time, not at the end of a release sprint.

Scaling, therefore, is less about adding hardware and more about codifying process: immutable infrastructure, versioned dependencies, artifact retention, and feedback that reaches humans quickly enough to influence the next commit. Enterprises that treat these capabilities as first-class citizens release faster, recover from failures sooner, and preserve developer confidence—even as AI tooling doubles commit velocity year over year.

When to DIY and When to Outsource: Selenium as a Strategic Lever

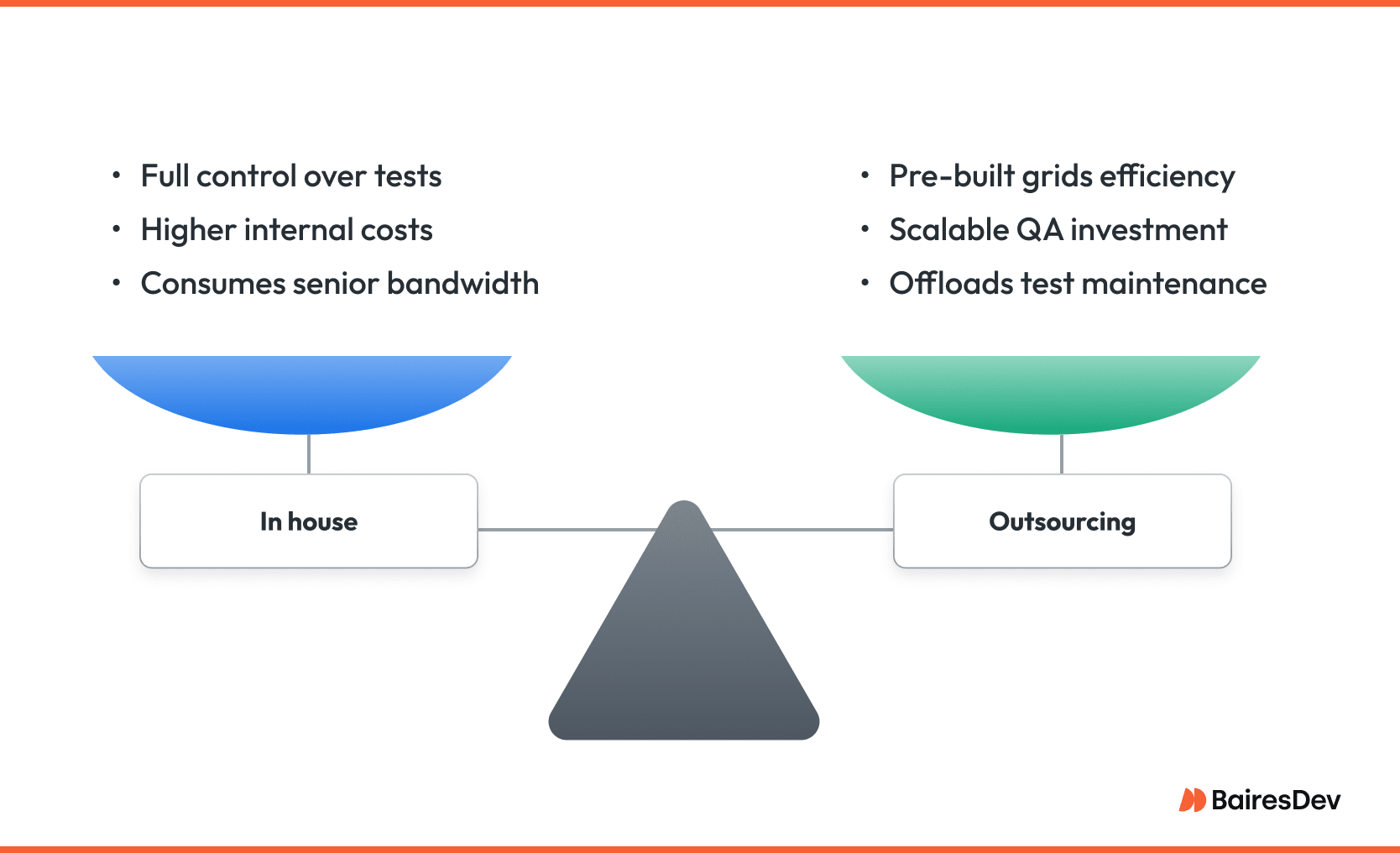

Owning a Selenium stack gives engineering leaders direct control over test coverage, data privacy, and release timing. That control has a cost that rises with every new browser version, feature flag, or regulatory audit. Teams must provision infrastructure, run test scripts, patch drivers, refactor flaky selectors, and educate new hires who often rotate before the suite matures.

Each of those tasks pulls senior engineers away from revenue work.

Outsourcing shifts the burden to specialists who live inside quality metrics every day. A partner brings pre-built grids, hardened selector patterns, and dashboarding that plugs into existing CI systems in a single sprint. More important, they absorb maintenance debt by refactoring locators before flake reaches your pipeline. The business case is strongest when the product roadmap is dense, the front-end changes weekly, or compliance rules demand traceable evidence of every executed test.

A hybrid approach is common. Core smoke tests stay in-house for rapid feedback, while a partner expands regression depth and manages the infrastructure that keeps parallel runs under ten minutes. This model preserves strategic knowledge inside your team and reduces capital spend on servers and headcount. It also turns quality into an operating expense that scales with release velocity instead of a fixed cost that grows every quarter.

Final Takeaway: Testing Requires Craft, Infrastructure, and Ownership

Selenium remains the most versatile browser automation framework because it speaks directly to Chrome, Firefox, Safari, and every derivative that matters in enterprise environments. The rise of AI-driven development has not lessened its value. Quite the opposite, it has increased the volume of code that must be exercised before every merge.

Success depends on disciplined abstractions, predictable infrastructure, and fast feedback. Whether those capabilities live inside your team or with a trusted QA partner, the mandate is the same: deliver stable software at the pace of modern, AI-augmented development without sacrificing user trust.

Frequently Asked Questions

How much parallelism is realistic on an internal Selenium Grid?

With container orchestration, most teams sustain fifty to one hundred concurrent sessions before I/O limits appear; beyond that, cloud grids or hardware sharding become cost-effective.

Does headless mode hide rendering bugs that occur in full browsers?

Headless Chrome and Firefox share the same rendering engine as their headed versions, so functional parity is high. Visual anomalies still require screenshot or pixel comparison tools.

How often should we upgrade browser drivers in production pipelines?

Most teams pin drivers to the browser version used in staging, then schedule monthly updates behind a feature flag to catch breaking changes before they reach users.

When does outsourcing Selenium testing make financial sense?

If the cost of maintaining grids and refactoring selectors exceeds a single QA engineer’s salary, or if release cadences outpace in-house capacity, a managed service usually lowers total cost of ownership.

How do we prove compliance when auditors request evidence of test execution?

Store test artifacts centrally, including logs, screenshots, and versioned driver binaries, and expose them through immutable reports that map each test to the commit and environment under audit.