Most content teams are facing the same pressure: publish more, in more formats, without adding headcount. Generative AI has made that lean approach feasible, and a growing share of what gets published today is either drafted or rewritten by an LLM rather than a human.

According to a report published by the SEO firm Graphite, over 50% of newly published pages on the internet feature AI-generated content, and another report by Ahrefs found that 74.2% of new content is at least partially generated using artificial intelligence.

This shift creates real opportunity. AI content generation lets teams use content more aggressively as part of their business strategy, whether for marketing, process documentation, or user education. Teams that were previously constrained by budget or capacity can suddenly consider much larger content programs.

It also introduces new forms of risk. Misusing AI-generated content can damage the company’s brand or introduce security and compliance issues. The ubiquity of AI means your competitors have access to the same tools, so volume is no longer a differentiator. The advantage comes from how well you control quality and voice at scale.

This article looks at both the opportunities and risks associated with AI and content generation. Once we understand the risk–reward trade-off, we’ll explore practical ways to build an AI content practice and specific methods for scaling both the quality and quantity of AI-generated content.

We’ll start by examining why companies want to use AI to generate content in the first place.

Using AI to Scale the Content Creation Process

Almost every business can benefit from a well-executed content strategy, and AI makes that strategy almost universally accessible by allowing them to scale with relative ease.

- Quantity scaling should be relatively intuitive. Generative AI models are able to generate longer and more complex outputs with each generation. One can get relatively convincing content by simply specifying a topic, a writing style, and some proprietary data to guide the LLM.

- Quality scaling is a less-explored, but equally exciting approach. Good writers are hard to come by, especially in topics like technical writing, where the required blend of effective communication and technical expertise makes the talent pool relatively small. A well-tuned AI system can serve as a writing assistant or a research agent, filling in gaps in a writer’s expertise and allowing writers to generate more sophisticated content.

| Dimension | Quantity Scaling | Quality Scaling |

| Primary goal | Publish more content, faster | Improve depth, clarity, and usefulness of each piece |

| Main lever | Automate drafting, outlining, and repurposing | Use AI as a research partner and editorial assistant |

| Typical workflows | Generate first drafts, variants, summaries, and snippets | Refine arguments, fill knowledge gaps, improve structure and framing |

| Constraints | Review capacity, SEO risk, brand consistency | Availability of strong editors and subject-matter reviewers |

| Success metrics | Time-to-first-draft, content volume per month | Reduced rework, expert satisfaction, engagement and conversion rates |

| Biggest failure mode | High-volume “AI slop” that erodes trust | Polished but shallow content that still lacks real insight |

Beyond generation, AI can act as a force multiplier for existing content. Teams use it to repurpose long-form pieces into multiple formats for different channels and audiences. It’s equally valuable for localization, adapting content for different markets while preserving technical accuracy, or for content refresh, updating documentation, and older SEO articles without full rewrites. These applications extend the ROI of content you’ve already invested in creating.

As exciting as these opportunities are, however, there are serious risks that have to be accounted for and controlled when generating content with LLMs.

The Risks of AI-Generated Content

While AI has a lot of potential in content generation, applied thoughtlessly, AI tools can be quite detrimental to a brand. Let’s briefly examine two major risks, hallucinations and sloppiness.

Hallucinations

One of the most salient challenges in AI-generated content is hallucination. While recent models have become much better at relying on the grounding data provided, hallucination remains a risk in situations where the grounding data is insufficient or where the line between factuality and creativity is blurry.

This is a particularly grave risk in professional communication like advertising, where factually inaccurate claims can have legal consequences, or in contexts like technical documentation, where hallucinated content can potentially cause system failure.

Brand Dilution

Another hidden risk of AI content generation is brand dilution, which happens when the output lacks a distinctive brand voice. This is often the result of human creativity being inserted too late into the process. When a draft is mostly written by AI and only edited afterwards, the tone of the article is set before it ever reaches a human editor.

This risk is often exacerbated by safeguards put in place against hallucinations, since prompts intended to ground the output can often result in an article that is overly factual and light on the kind of human embellishments that give content its distinctive brand voice. A diluted brand is much easier to displace, since a competitor can easily flood the space with similar content. Brand voice issues can also erode consumer confidence, since people might perceive the brand as “generating AI slop” and choose to disengage.

Beyond content quality, there are many operational and strategic risks to consider.

- Data security becomes a concern when teams feed proprietary information or sensitive customer data into external AI systems without proper safeguards.

- Search engines are also evolving their detection capabilities. While penalties aren’t universal yet, relying heavily on AI-generated content carries SEO risk as algorithms improve at identifying and potentially downranking it.

- Quality control becomes a challenge. When AI enables 10x content output, reviewing everything becomes impractical. Errors, inconsistencies, and tone problems slip through because editorial teams can’t keep pace with the volume.

Now that we understand the basic benefits and risks, let’s think about specific methodologies that amplify the benefits and control the risks. Choosing the correct method starts with understanding the needs of your content space.

Assess Your Content Space

Different content spaces need dramatically different AI engineering needs. In terms of AI engineering needs, content can often be divided into factual and editorial.

Factual Content

Factual content refers to pages like technical documentation, policy guidelines, and knowledge-base articles. These pages have very little space for editorializing, and a strong emphasis is placed on current and accurate information.

With factual content, having a strong data practice and fact-checking pipeline is especially important. Therefore, pay special attention to the factual grounding section of this article, where we will go over popular retrieval-augmented generation (RAG) techniques that minimize hallucination and give the model access to an up-to-date knowledge base.

Editorial Content

Editorial content is the flip side of factual content. These pages tend to be more focused on putting forward an opinion or a product, rather than simply transmitting information. These articles still need to be factually accurate, but there is a stronger emphasis on style, persuasiveness, and a distinctive voice.

If you’re generating more editorial content, pay particular attention to the prompt engineering section of this article, where we will go over techniques that help editors and prompters get the most out of AI models.

Of course, almost every use case involves a combination of the two. In an ideal world, we would have both factual groundedness and a distinctive brand voice. However, it is a good exercise to classify your content so that you can more easily assess how much work is needed to get a system to an adequate level of performance.

Now that you have classified your content, we can look at methodologies for scaling your content creation pipeline.

Building a Factually-Grounded AI Content Production Pipeline

When using AI to generate content, it is crucial to minimize hallucinations. To ensure this, you must build a robust data practice.

Your approach should follow the best practices of RAG, or Retrieval-Augmented Generation. Supporting data around a topic should be vetted, processed, stored in a way that can be retrieved reliably and accurately.

One of the key tasks of an AI content generation pipeline is the retrieval of passages and supporting materials related to the topic at hand. Two popular technologies here are vector databases and knowledge graphs, both with unique features that support data management and accurate retrieval.

Specifically, vector databases rely on “vector embeddings”, which are numerical representations of the semantic meanings contained in a particular document, paragraph, or sentence.

The physical distance between two vectors roughly corresponds to how similar the underlying passages are semantically. Therefore, a user can retrieve the X most relevant passages around a topic by querying for passages with the X closest vectors.

Knowledge graphs, on the other hand, build a hierarchical representation of the data. Because knowledge graphs store the explicit relationships between the data, one can reason more easily about the relationships between the entities.

Whereas vector databases excel at quickly retrieving a large, diverse set of supporting data, knowledge graphs allow for more precise, structured data retrieval, making them more suitable for AI content where highly specific facts are required.

In addition to RAG, another technique that is worth mentioning is “LLMs-as-a-Judge”. By crafting specialized prompts and using powerful reasoning models, one can build a pipeline of robust checks that catch many potential issues automatically. Two popular checks are for groundedness, where the LLM is asked to decide if the output comes entirely from the supplied context, and relevance, where the LLM determines whether the output adheres to the content requirement.

While not a substitute for human editors looking over the output, judiciously using LLMs-as-a-Judge techniques can make editors much more productive by automating certain content checks and flagging glaring mistakes.

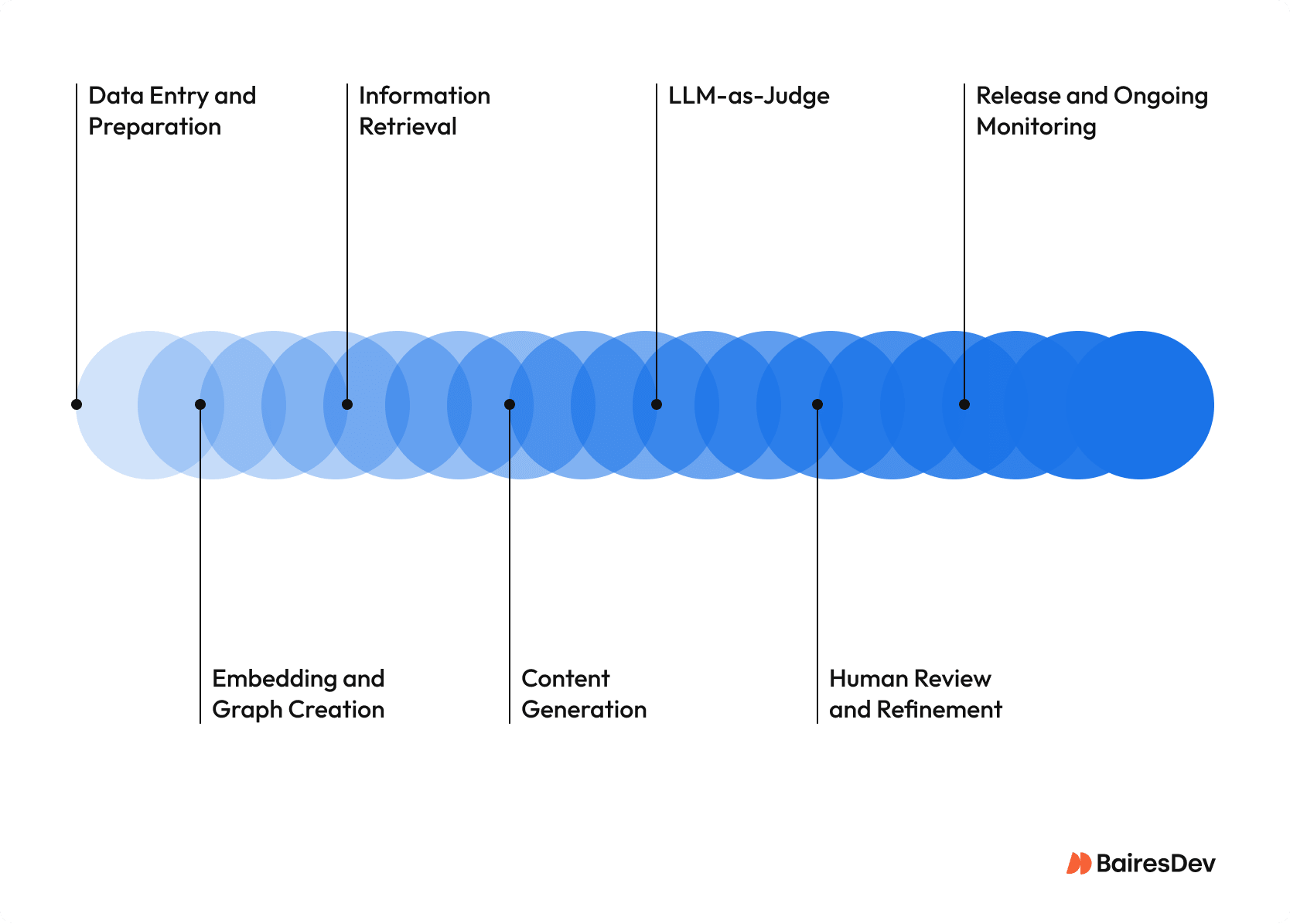

| Stage | What happens | Key questions | Primary owner |

| 1. Source & governance | Decide which documents, pages, and systems are “source of truth” for the model. | What content do we trust? What’s in or out of scope for AI use? | Content lead / SMEs |

| 2. Ingestion & preprocessing | Collect, clean, and chunk content into model-friendly units with metadata. | How do we split and tag content so it’s retrievable and maintainable? | AI / platform engineering |

| 3. Indexing & retrieval (RAG layer) | Build embeddings / vector indexes and optionally a knowledge graph. | How do we find the right passages reliably and quickly? | AI / platform engineering |

| 4. LLM generation | Use prompts + retrieved context to draft or update content. | What instructions and constraints should the model follow? | Prompt engineer / editor |

| 5. Automated checks (LLM-as-a-judge) | Run groundedness, relevance, and basic quality checks on the output. | Does this match the context and requirements well enough to review? | AI / platform engineering |

| 6. Human editorial review | Editors and SMEs fact-check, adjust tone, and sign off for publication. | Is this accurate, on-brand, and safe to publish? | Editors / subject-matter experts |

| 7. Monitoring & feedback | Track issues, update data and prompts, and refine the pipeline over time. | Where are errors coming from, and how do we fix them systematically? | Content lead + AI engineering |

Now that we have covered techniques for ensuring AI-generated content is grounded and factual, let’s look at how to give your AI-written content a unique voice.

Giving Your AI-Driven Content a Unique Voice

In order to give your content a more compelling voice, a well-designed editorial process is a must. However, there are a few differences between a traditional content studio and an AI-driven one.

In both organizations, the expertise of the editor is paramount. In addition to traditional content editing skills, a good editor in an AI content studio must also be an expert prompt engineer.

When AI-generated content has to be edited, an AI editor should aim to not just change the content, but also the prompt that created the content. By constantly iterating on the content generation prompts, the quality of the AI pipeline can improve with each production run. When it comes to modifying AI generation prompts, there are a few techniques to keep in mind.

Let’s review some of the most effective techniques.

Iterative Prompting

The first and most foundational technique is iterative prompting, which requires the editor to think almost like a programmer, making small changes to prompts and testing the effects along the way. This is especially important for prompts that concern voice and creativity, since they can often conflict with other instructions, or make the model more prone to hallucinations.

To make iteration easier, the prompts should be well-structured, composable, and supported by AI engineers in a way that allows editors to simulate production environments as much as possible.

Meta Prompting

Once we hit the limits of hand-prompting, it is time to move on to meta-prompting, which uses LLMs to create prompts. Many modern LLMs have specific training around prompting, because many AI applications today have an optimization layer where user instructions are translated to more detailed prompts.

We can take advantage of this functionality by showing the LLM a prompt and asking something like “How can we modify the prompt so the resulting content is more energetic?”

Chain-of-Thought and Tree-of-Thought Prompting

The output can then be used as a starting point for further iteration. During this process, it is also a good idea to take advantage of advanced prompt engineering techniques like chain-of-thought prompting or even tree-of-thought prompting to give you more insights and options on how to proceed.

Sometimes, though, companies make stylistic choices that are just too hard to put into a prompt. In those cases, it might make sense to use few-shot prompting. Few-shot prompting involves showing the AI several examples of the desired output, and asking the AI to mimic these examples. While originally developed for reasoning and logic-based workloads, this technique is also highly effective as creative direction, since some principles are easier shown rather than described.

By using these techniques, you can scale the quality of your content pipeline, so that your brand is strengthened by AI, rather than diluted by it.

Weighing Risk and Opportunity

We’ve looked at why companies are turning to AI for content, where the main risks lie, and how techniques like RAG, LLM-as-a-judge, and structured prompting can raise the quality bar. Taken together, they point to a simple reality: content volume is no longer the bottleneck. However, at the same time, high-quality content is becoming less of a moat.

The downside is that the same tools that let you move faster also make it easier to scale bad decisions. AI does not fix weak strategy or broken processes. It can help teams execute the wrong content plan more quickly, create more off-brand material, and generate more technical inaccuracies, all of which are expensive to unwind. That’s exactly what many companies discovered in 2024/2025 when first-wave AI content experiments led to rework, internal backlash, or loss of audience trust.

While most concerns are valid, and the risks are very real, the industry has come a long way in recent years. Content leaders have adjusted their workflows to minimize risk, engineers have fine-tuned their systems, and the models themselves have improved.

As we move into 2026, the real differentiator won’t be who uses AI for content (as everyone does it), but who can consistently turn AI output into high-quality content instead of adding to the growing pile of AI slop.