For years, the answer to any hard problem in AI was simple: throw more GPUs at it. That era has reached its end—not because GPUs failed, but because the questions we’re asking now are profoundly more specific.

We’re past just asking for raw TFLOPS. We’re on the hook for latency low enough for a real-time conversation with a customer, a feat that demands processing at a batch size of one. You have to scrutinize the punishing economics of scaling inference on hardware where a single Nvidia H100 can pull 700 watts (SXM), which makes TCO a board-level concern.

This is why the one-size-fits-all approach is fracturing. The singular tool is now being complemented by custom AI chips, specialist designs like Groq’s LPU. An architecture that can run a 70-billion-parameter model at hundreds of tokens per second (TPS). Why? Because it was designed for that one job and nothing else.

This isn’t some attempt to dethrone Nvidia. It’s just industrial specialization. The kind that happens in any maturing field.

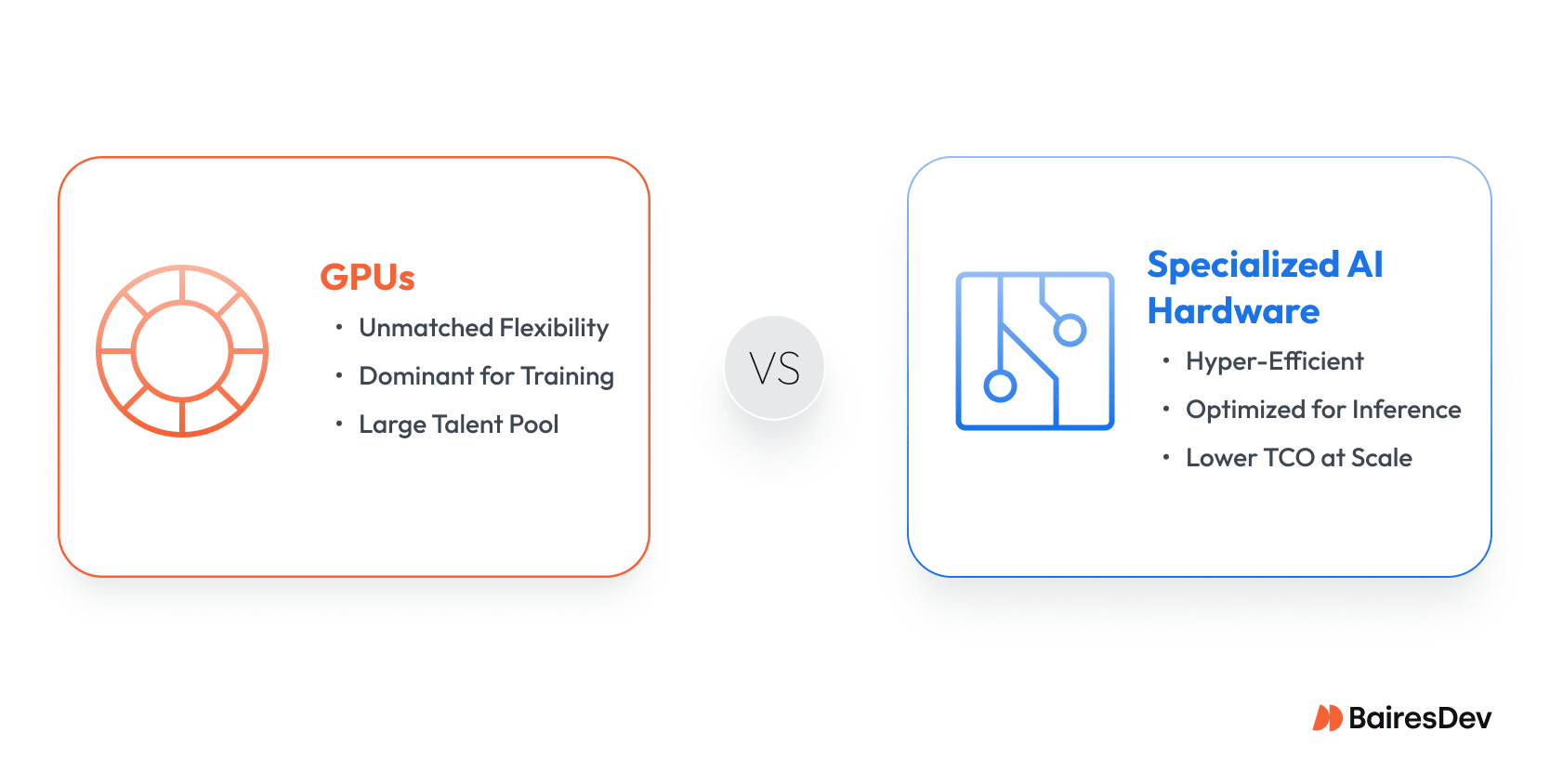

The Foundation: Giving the GPU Its Due

Before we get to the specialist AI hardware companies, you have to give the foundational platform its due. The GPU, specifically a flagship like Nvidia’s H100 with its 989 TFLOPS of peak FP16 performance (without sparsity), is still the undisputed sovereign of AI model training. Period.

Its flexibility is the bedrock of deep learning, and the CUDA ecosystem is a ridiculously deep moat of tools and expertise. An unshakable advantage in R&D and discovery. Just ask AMD and Intel. For the messy, brute-force work of training, that firehose of general-purpose compute is exactly what you need, and Nvidia reigns supreme.

But the physics of inference are a different game. The metric that matters is often not TFLOPS. It’s memory bandwidth. How fast can you feed the model’s weights to the chip? The H100’s 3.35TB/s of HBM bandwidth, while immense, becomes the real bottleneck when you’re generating one token at a time.

That’s the chink in the armor. A specific, predictable vulnerability where a specialized tool is designed to win. The architects we’re about to examine didn’t just notice this; they bet their companies and reputations on it.

“People mistake this for a hardware war, but it’s fundamentally an economics problem,” said Fuad Abazovic, Principal Analyst, ACAnalysis. “Nvidia’s GPU, backed by its CUDA software fortress, is the undisputed king of training. These upstarts aren’t trying to assault the fortress head-on. They’re exploiting a different economic model: the OPEX-driven world of inference at scale.”

Abazovic went on to point out that the upstarts have built specialized tools designed to win on metrics like performance-per-watt and a lower total-cost-per-query.

“Their bet is simple: at scale, the company with the most efficient economics per token wins, and a specialized tool will always be more efficient than a general-purpose one.”

Demand for inference is expected to significantly increase over the next eighteen months, pushing trained problem-solving models to the prime time. Companies are expected to introduce inference-focused hardware to provide better performance per watt per token.

“Enterprise customers will heavily rely on Time to First Token (TTFT) to provide users with a more responsive experience,” Abazovic told BairesDev.

This is the core calculation driving the strategic shift for companies evaluating their long-term reliance on generic infrastructure from the major cloud providers.

The Architectural Bets: A Study in Strategic Trade-offs

Choosing one of these new architectures isn’t a simple procurement decision. It’s a long-term commitment to a philosophy of computation.

Each of these companies has made a radical, defensible bet on what truly matters for AI inference: understanding the second-order effects—the real-world operational headaches, the hidden software costs, the trade-offs—is what separates a smart investment from a very expensive mistake.

Groq: The Bet on Deterministic Speed

The most ascetic of these philosophies belongs to Groq, founded by Jonathan Ross, a key architect behind Google’s original Tensor Processing Unit (TPU).

Groq’s chip design is a testament to the pursuit of pure, unadulterated speed through simplicity. The core of their Language Processing Unit (LPU)? A deterministic, cacheless design. They ripped out the parts of modern chips that create performance variability—things like multi-level caches and complex hardware schedulers—to deliver perfectly predictable latency. Every single time.

| Spec | Groq LPU (GroqChip) | NVIDIA H100 |

| Architecture | Deterministic, compiler-scheduled LPU | GPU with dynamic scheduling |

| Process node | ~14 nm (GlobalFoundries) | TSMC 4N (4 nm-class) |

| On-chip memory | ~230 MB SRAM | 50 MB L2 cache |

| Memory bandwidth (primary) | ~80 TB/s on-die SRAM | ~3.35 TB/s HBM3 |

| External memory | No HBM; models sharded across LPUs | 80 GB HBM3 |

This works because their compiler orchestrates every data movement in advance, feeding the compute units from 230MB of on-chip SRAM with an astonishing 80TB/s of on-die memory bandwidth. That’s 24x H100’s 3.35 TB/s HBM, which is already an order of magnitude more than the MacBook or ThinkPad you are reading this on.

This purity makes Groq untouchable at batch-size-of-one inference. The holy grail for real-time AI.

The Software Equation: A Matter of Trust

But it’s not just custom silicon. The whole thing hinges on the Groq Compiler. It’s the brains of the operation. It ingests a standard model and does the brutally complex work of pre-planning every instruction.

For AI teams, this is a totally different way of working. The engineering challenge shifts from runtime tweaking to trusting the compiler’s output. The huge benefit is a dramatic reduction in unpredictability. The risk? You’re placing a profound level of trust in a proprietary, black-box compiler. This isn’t the sprawling, messy, community-supported world of CUDA. It’s a scalpel. And scalpels can be scary, especially in untrained hands.

The decision to use it is a bet that its raw performance outweighs the cost of leaving a familiar ecosystem.

- Groq’s Strategic Bet: That for any interactive service, predictable latency is the only economic driver that matters.

- The Core Trade-off: Sacrificing a large external memory pool (no HBM) for spectacular on-die speed, while requiring a complete buy-in to their proprietary compiler.

Cerebras: The Bet on Massive Integration

In stark contrast to Groq, Cerebras is a bet on maximalist, brute-force integration. Their argument: the real bottleneck isn’t on the chip, it’s between the chips.

So they eliminated the network. The Wafer-Scale Engine 3 (WSE-3) is a monolithic system with 900,000 AI cores on one piece of custom silicon, providing 21 Petabytes/second of on-chip memory bandwidth. Not worth comparing to a flagship GeForce, let alone your 13-inch laptop.

| Spec | Cerebras WSE-3 | NVIDIA H100 (Hopper) |

| Architecture | Wafer-scale monolithic AI accelerator with on-wafer mesh fabric; compiler-managed execution | General-purpose GPU with dynamic schedulers and caches |

| Process node | TSMC 5 nm | TSMC 4N (4 nm-class) |

| Chip size | 46,225 mm² | 814 mm² |

| Cores | 900,000 | 16,896 FP32 + 528 Tensor |

| On-chip memory | 44 GB | 0.05 GB |

| Memory bandwidth | 21 PB/s | 0.003 PB/s |

| Fabric bandwidth | 214 PB/s | 0.0576 PB/s |

It’s an engineering marvel and a master class in chip design that pushes the absolute limits of modern chip manufacturing. It effectively eliminates the latency and power overhead from inter-chip communication. The buy-in here is operational. A single CS-3 system is power-dense (up to 23kW) and needs the data center infrastructure to match.

The compelling counter-argument, though, is consolidation. One of these systems can replace an entire cluster of GPU servers, which dramatically simplifies your network topology and MLOps. A big deal.

The Software Equation: Taming Complexity

Programming almost a million cores is not a trivial problem. Cerebras abstracts this away with its Cerebras Software Language (CSL). The software makes the whole wafer look like a single, massive inference accelerator to the developer. Your engineers aren’t managing hundreds of GPUs and their interconnects; they’re writing code for one device.

That’s the core value prop for your VP of Engineering: a radical simplification of the programming model for huge AI. It means faster development cycles, maybe even a reduced need for that one impossible-to-hire distributed systems guru. The trade-off is the same old story—it’s a proprietary stack. You’re betting that the operational simplicity is worth the lock-in.

- The Strategic Bet: That integrating a whole cluster onto a single wafer is the key to performance and energy efficiency.

- The Core Trade-off: Higher power density per custom chip in exchange for eliminating the complexities of a distributed system, all managed by their proprietary CSL software.

SambaNova: The Bet on Future Complexity

Navigating a middle path is SambaNova. Their approach is arguably the most adaptable, designed for the messy, complex future of AI workloads. Their Reconfigurable Dataflow Architecture (RDA) and a clever three-tiered memory hierarchy create a system that’s less a fixed processor and more a fluid hardware pipeline, reconfigured by software for each model.

| Spec | SambaNova SN40L RDU (RDA) | NVIDIA H100 (Hopper) |

| Architecture | Compiler-scheduled reconfigurable dataflow accelerator (dual-die RDU) | General-purpose GPU with dynamic schedulers & caches |

| Process node | TSMC 5 nm (5FF), CoWoS chiplet package | TSMC 4N (4 nm-class) |

| On-chip memory | ~520 MB distributed SRAM (PMUs) | 50 MB L2 cache |

| Memory hierarchy | 3 tiers: on-chip SRAM + 64 GB HBM (co-packaged) + up to 1.5 TiB DDR attached to accelerator | 80 GB HBM3 on-package (host DDR accessed via CPU/PCIe/NVLink) |

| Primary memory bandwidth | On-chip: hundreds of TB/s (aggregate SRAM bw) | HBM3: ~3.35 TB/s (SXM) |

This lets them hold multiple large models in memory and switch between them in microseconds. They’re optimizing for tomorrow’s agentic systems and Composition of Experts (CoE) frameworks.

The Software Equation: A Full-Stack Approach

The lynchpin for all this is SambaFlow. It’s the most ambitious of these software stacks, a true “model-to-hardware” compiler. It analyzes a model’s compute graph and physically maps the dataflow onto the chip’s reconfigurable units.

Adopting SambaNova isn’t like buying a component; it’s adopting a full-stack, opinionated platform. The learning curve for your team will be steeper. No doubt about it. They have to learn to think in this dataflow paradigm. The prize, however, is the unique ability to run a whole portfolio of different AI models on one platform at high utilization—a very compelling economic model for any company that sees generative AI as a core business function.

- The Strategic Bet: That the future isn’t one giant model, but a dynamic interplay of many specialized models that require architectural flexibility.

- The Core Trade-off: A steeper learning curve for the proprietary SambaFlow paradigm, in exchange for the versatility to run diverse generative AI models on a single, efficient platform.

Comparison of Specialized AI Hardware Architectures

| Feature | Groq | Cerebras | SambaNova |

| Core Architecture | Deterministic, Single-Core LPU | Wafer-Scale Integration (WSE) | Reconfigurable Dataflow Architecture (RDA) |

| Primary Strength | Ultra-Low Latency | Massive Throughput | Architectural Flexibility |

| Ideal Use Case | Real-time conversational AI, applications requiring instant response (batch-of-one). | Large-scale scientific modeling, batch processing massive datasets, training large models. | Complex, multi-model agentic systems, Composition of Experts (CoE), high-utilization multi-tenant platforms. |

| Key Trade-off | Sacrificing memory capacity for speed, requiring more chips for the largest models. | High power density and physical footprint per unit in exchange for simplified system complexity. | Steeper learning curve for a complex software paradigm in exchange for long-term versatility. |

| Software Stack | Groq Compiler: A proprietary, “black-box” compiler that pre-plans all execution. | Cerebras Software Language (CSL): A proprietary stack that abstracts the wafer into a single device. | SambaFlow: A “model-to-hardware” compiler that reconfigures the chip for each specific model. |

Expanding the Toolbox: Interconnects, the Edge, and Open Standards

Beyond the main high performance computing (HPC) architectures, a second wave of specialization is happening: AI hardware companies solving other critical parts of the AI puzzle. The data bottlenecks between systems, the push for open standards, and the unique demands of the edge. Each needs its own distinct software approach.

Celestial AI: The Bet on Photonic Interconnects

Even with the fastest processor, you’re ultimately gated by how fast you can move data. Electrical interconnects are hitting a wall. Celestial AI is tackling this with its photonic fabric technology. Using light instead of electricity to move data between chips. Higher bandwidth, lower power.

The Software Equation: Enabling Disaggregation

Their software layer is designed to make the photonic hardware invisible. It presents an optically-linked cluster of processors and memory as one unified system.

This is the holy grail of disaggregation. For your MLOps teams, it means they can scale compute and memory resources independently, building a virtual system that’s perfectly sized for the job, no longer constrained by the physical layout of a server. The software handles all the complex routing over the light-based fabric. The bet is that by solving the interconnect problem, Celestial AI enables a more efficient and flexible data center architecture.

- The Strategic Bet: The next performance leap won’t come from the processor. It will come from eliminating the data bottleneck between chips with optics.

- The Core Trade-off: Adopting a complex new interconnect technology for the ability to scale AI clusters with far greater efficiency.

Tenstorrent: The Bet on Open Standards

While most AI hardware innovators build proprietary software, Tenstorrent is betting the farm on the opposite: openness. Led by veteran chip architect Jim Keller, the company is building its AI processors on the open-standard RISC-V architecture and is all-in on a completely open-source software stack. A direct assault on NVIDIA’s CUDA moat.

The Software Equation: A Community-Driven Moat

Tenstorrent’s whole strategy rests on the belief that an open-source community can out-innovate a closed one. The bet is that the freedom of an open stack will attract developers tired of being locked into one company’s roadmap.

For your team, this means unprecedented access to everything, from the compiler to the firmware, allowing deep customization. The risk is clear. You’re taking on a younger, less mature ecosystem without the safety net of a big corporation like Google or Nvidia. The choice for Tenstorrent is a long-term bet on the power of open-source to create a more flexible next generation AI infrastructure.

- Keller’s Big Bet: Enterprises are tired of proprietary ecosystems and will choose open, customizable solutions to avoid strategic risk.

- The Trade-off: Giving up the stability of a mature, closed ecosystem for the long-term strategic freedom that comes with an open standard.

Hailo: The Bet on High-Performance Edge AI

While the data center gets the headlines, a huge number of AI applications have to run locally, on devices where power and space are tight. Hailo has established a dominant position here. Their specialized processors, like the Hailo-8 and Hailo-15, are designed to deliver data center-class inference in a tiny, low-power package, thanks to their unique “Structure-Defined Dataflow Architecture.”

The Software Equation: Developer-First Tooling

Hailo gets that engineers working on edge devices—in cars, factories, whatever—care about a smooth workflow more than anything. Their software suite is a comprehensive, “developer-first” toolkit.

It has a profiler, a model zoo, and application suites to speed up development. It’s built to integrate with standard AI frameworks, letting teams take models trained in the cloud and compile them for the Hailo chip with minimal friction. Their bet is that by providing the best developer experience, they can become the default choice for the high-performance edge.

- The Strategic Bet: That a huge slice of future AI value will be created at the edge, requiring specialized, ultra-efficient processors to optimize performance and reduce costs.

- The Core Trade-off: Focusing on a narrower range of AI models (mostly for vision) in exchange for world-class performance-per-watt that is simply out of reach for data center hardware.

Building Your Heterogeneous Compute Strategy

The era of a one-size-fits-all approach to AI hardware is over. The emergence of this diverse, specialized ecosystem isn’t fragmentation; it’s maturation. The comfortable simplicity of defaulting to a GPU for every task is being replaced by a more demanding strategic imperative: building a heterogeneous compute strategy.

The key takeaway is not that one of these companies will “kill” the GPU. It’s that the GPU’s own success has created a spectrum of workloads so demanding they require their own purpose-built instruments. The semiconductor industry will adapt and rise to the challenge. The question for any enterprise leader is no longer if you should look beyond your current vendor, but which of these new architectural philosophies aligns with your most critical, value-generating AI services.

Is your competitive advantage tied to the latency of a customer-facing agent? Is it in the massive-scale processing of your proprietary data? Or is it your ability to deploy the complex, multi-model systems that will define the next frontier of automation?

There is now a specialized tool for each of these jobs. The ultimate challenge is no longer about procurement. It’s about precise, strategic alignment. Choosing the right tool from the toolbox, and knowing when to use it, will be the defining characteristic of the companies that lead in the next decade of AI.

Frequently Asked Questions

Why is Nvidia’s hardware so dominant for AI training?

Nvidia’s dominance comes from more than just hardware; it’s built on CUDA, their mature and extensive software ecosystem. This deep library of tools and developer familiarity creates a powerful “moat” that is difficult for competitors to overcome.

What are AI chips? How are they different from CPUs and GPUs?

AI chips, or AI accelerators, are specialized processors designed to handle the massive computational demands of artificial intelligence. While CPUs handle tasks sequentially, AI chips and GPUs use a massively parallel architecture to perform thousands of similar calculations at once. Specialized AI chips take this further, often with hardware specifically designed for matrix math, the core of machine learning and AI workloads.

What’s the difference between a chip for AI training versus one for inference?

Training requires massive, sustained compute power to build an AI model from scratch, often taking days or weeks. Inference is the much faster process of running an already-trained model to get a real-world answer, prioritizing speed and efficiency.

How do we decide between GPUs and specialized accelerators?

Match the platform to the job. If your revenue depends on batch-size-one latency for interactive agents, look at Groq-style designs. If your bottleneck is scale and cluster complexity, evaluate wafer-scale systems like Cerebras. If you expect a portfolio of models and agentic workflows, consider SambaNova. If your needs are broad or research heavy, GPUs remain the default.

How important are foundries to the AI hardware industry?

Chip manufacturing is the absolute bedrock of the AI hardware industry. A chip design is theoretical until it can be fabricated on an advanced process node. The capabilities of foundries, primarily TSMC and Samsung Foundry, dictate the final performance and energy efficiency of every GPU and AI chip. Access to leading-edge nodes is a major competitive advantage, making foundry capacity a critical chokepoint for innovation.