AWS certifications used to be a reliable way to identify genuine delivery partners. In 2025, that signal has evaporated. With more than a million active credentials in circulation, nearly every vendor on your shortlist will show you the same wall of badges.

The smarter approach is to skip badge counting and test whether the proposed teams can actually deliver production workloads.

This playbook uses two quick filters to make that happen: coverage fit and operational proof. The first acknowledges that certifications alone are empty signals. The second ensures you focus on what matters, which is what happens when systems go live. Together, they keep your selection process decisive while accounting for the constant evolution of AWS services and the certification catalog.

When Everyone Has Badges, Signal Evaporates

As of January 2025, there are 1.42 million active AWS certifications held by 1.05 million people. Counting badges now tells you nothing beyond whether a vendor pays for exam prep. Associate certifications are standard. Professional-level certifications are increasingly common. Even small shops coach their engineers through exams just to hit baseline expectations in their proposals.

And of course, AWS keeps updating the catalog. The September 2025 CloudOps exam replaced the AWS Certified SysOps Administrator credential. A retired specialty pushed teams toward the new Data Engineer exam. Serious AI partners now hold the AI Practitioner and ML Engineer certifications, and many are preparing for the GenAI beta exam scheduled for November 18th.

When everyone can display the same certification counts, you need to examine what happens when those badges meet real production environments and demonstrate genuine cloud expertise.

What Happens When You Bet on Badge Theater

A vendor might show 50 certifications across the team, but if those credentials are concentrated on a few devs or skewed toward outdated exams, you risk buying into theater.

When the certified architect leaves mid-project, you discover the remaining team doesn’t understand your design. The risk compounds when certifications substitute for hands-on experience. A team can pass every relevant exam but still lack the experience necessary to identify failure modes specific to your workload.

These gaps share two root causes: misaligned coverage and zero operational proof. Both problems are avoidable if you put vendors through two tests.

The Two Tests That Still Differentiate Partners

The first test evaluates coverage fit. You map your upcoming workloads to current, redundant certifications across architecture, cloud operations, security, data, and AI. Then you make the vendor show you the actual people behind each badge. Any gap or single point of failure ends the conversation before discovery begins.

The second test requires operational proof. Partners only advance when they produce dated artifacts like release ledgers, OPS10-aligned postmortems, Control Tower exports, and Cost Anomaly Detection alerts that align with current AWS guidance.

Test One: Match Coverage to Workloads, Not Badge Counts

Before anyone touches production, you need to see how the vendor spreads credentials across the team, plans for knowledge redundancy, and accounts for upcoming certification changes.

What Real Coverage Looks Like

Architectural credibility starts with two engineers holding the AWS Certified Solutions Architect Associate credential for every major workload, plus at least one AWS Certified Solutions Architect Professional leading regulated or complex migrations. Pair them with an AWS Certified DevOps Engineer Professional so designs actually translate into production pipelines.

Operations, security, data, and AI domains need the same redundancy. Look for two people holding the new CloudOps certification to handle managed services and cloud operations, a dedicated Security Specialty holder for regulated environments, and Data Engineer talent, since the old specialty retired. For AI work, look for an AI Practitioner and ML Engineer.

This baseline ensures the team can absorb turnover and vacation schedules without stalling your workstreams.

Mapping Workstreams to Actual People

Abstract badge counts collapse when you map initiatives to specific humans.

Migrations and platform builds require AWS Certified Solutions Architect Associate, AWS Certified Solutions Architect Professional, and DevOps Engineer Professional holders to design landing zones, govern infrastructure as code, and keep releases predictable. Day-two reliability demands CloudOps coverage so teams can operate multi-account environments without leaning on your internal site reliability engineers.

Data, AI, and regulated workstreams carry their own requirements. When a vendor mentions the Data Engineer certification, ask who maintains pipelines and manages data storage. When they cite the ML Engineer credential, ask who handles model drift. When they reference the Security Specialty, ask which person responds to audit requests and manages security practices.

A clear staffing grid makes red flags visible immediately. Vague answers signal trouble.

Catching Coverage Theater Early

Press for specifics in the first meeting and watch how vendors respond. Coverage theater reveals itself quickly when you ask direct questions.

A vendor might promise 80 active certifications across a 10-person team but can’t match specific names, Solutions Architect Associate exam codes, and expiration dates to your project roster. Their data lead might still reference the retired specialty instead of acknowledging the retirement notice and explaining what changed in the new exam. They might pitch generative AI accelerators without a single AI Practitioner certification, no enrollment in the GenAI beta, and no awareness of the shifts outlined in the portfolio update.

When coverage gaps surface, shift immediately to operational proof. Only concrete evidence shows whether a team can actually deliver.

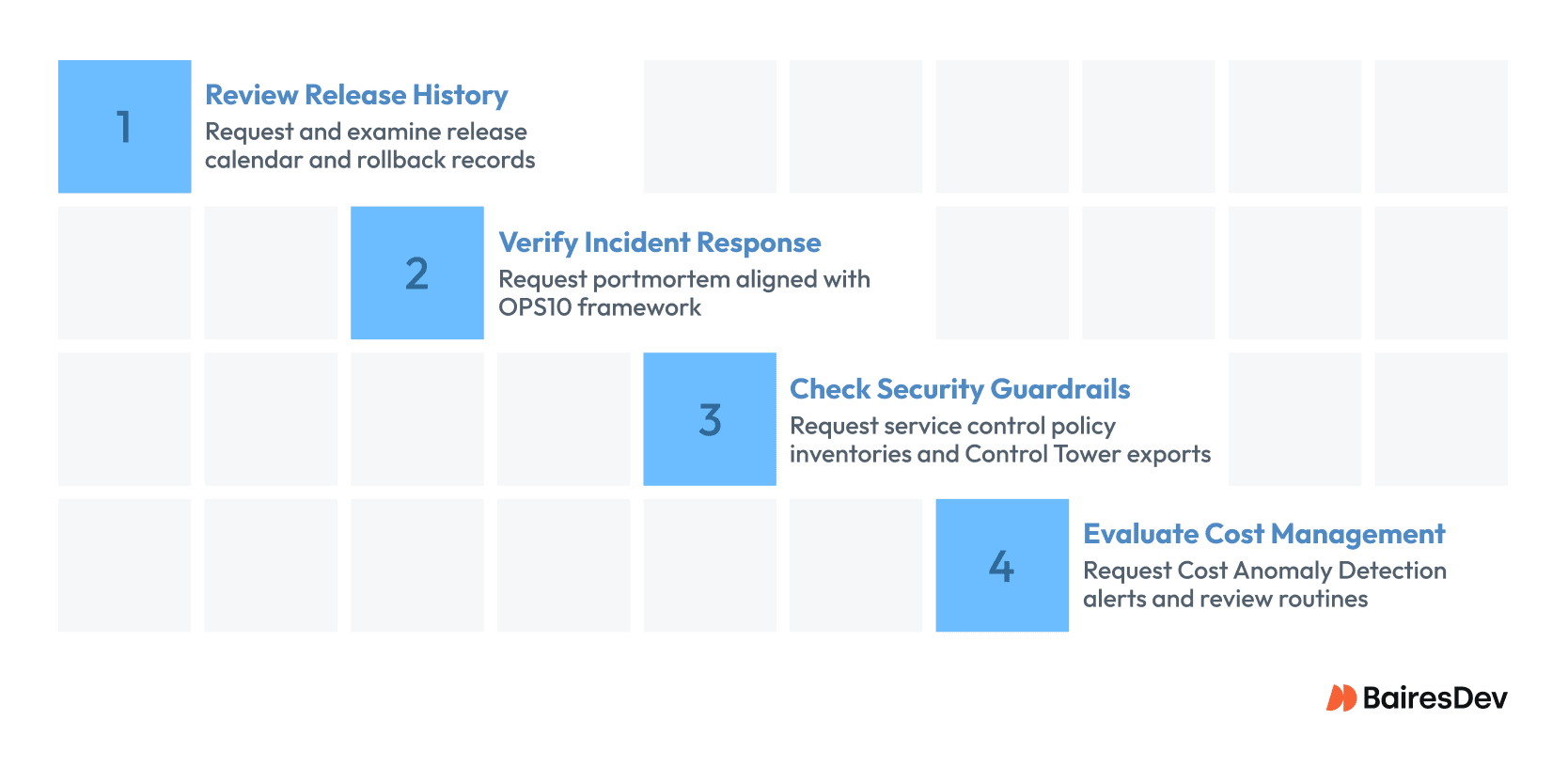

Test Two: Examine Production Proof Before Signing

Operational proof reveals how a partner performs when systems are live. Request artifacts from the last 90 days covering releases, incidents, guardrails, and cost management before procurement issues a purchase order. The teams that produce evidence immediately are the ones you want running your workloads.

Review Recent Release History

Ask for a release calendar showing scope, change windows, and rollback records. Strong partners reference the April 2025 Well-Architected update in their documentation and demonstrate how DevOps Engineer Professional holders governed approvals and managed deployment practices.

Examine one rollback in detail. If they can explain which guardrail stopped the deployment and how long recovery took, you’ve confirmed real change control. This sets up the conversation about incident response.

Verify Incident Response Capabilities

Request a postmortem aligned with the OPS10 framework that identifies action owners and shows closure dates. Real operators maintain this evidence in their incident tooling, not slide decks.

Meet the people who rotate on-call and ask how they handle IAM lockouts, KMS key issues, or EKS throttles using the scenarios covered in the CloudOps certification. If the walkthrough stalls here, expect the security review to reveal similar gaps in their technical skills.

Check Security Guardrails and Control Tower Configuration

Request service control policy inventories and Control Tower exports that incorporate the 2024 Resource Control Policy launch features and the 2025 release notes. Preventive, detective, and proactive controls should run across every account in scope, not just a demonstration environment.

Confirm how they detect drift and which Security Specialty holder owns the response. Manual spreadsheets or missing dual-stack coverage signal the same weaknesses you’ll see in financial operations.

Evaluate Cost Management Discipline

Request anonymized Cost Anomaly Detection alerts that reflect the May 2025 alerting update and the July 2025 model update. Look for owner assignment, time to resolution, and documented follow-up actions that demonstrate cost optimization proficiency.

Then probe their routines. Who reviews budgets? How often? Which DevOps Engineer Professional or CloudOps holder approves corrective steps? Weak answers here usually signal missing remediation discipline downstream.

Recognize Warning Signs Early

Even credible partners show gaps, and three patterns surface quickly. Release documentation anchored to guidance older than the April Well-Architected update signals stale processes. Control Tower exports missing the 2024 Resource Control Policy controls, or 2025 release notes reveal abandoned landing zones.

Remediation trackers that ignore the 45 percent threshold from the Well-Architected criteria tell you they have problems closing high-risk issues.

Catch these warning signs and you’ll recognize the same patterns earlier in the sales cycle.

A Vendor Diligence Playbook for Amazon Web Services

Coverage fit and operational proof provide the foundation for vendor evaluation, but applying them consistently across multiple candidate teams takes a little structure. This playbook converts those two tests into a repeatable process you can complete in under a week. Each step functions as a gate, and vendors that fail any one of them are dropped immediately.

The process moves through four phases: structured questioning, artifact collection, rubric-based scoring, and verification testing. This enables rigorous, parallel evaluation and can cut evaluation cycles by two or more days.

Ask Five Production-Proof Questions

Open each vendor session with identical prompts to enable direct comparison. These questions surface both coverage fit and operational proof simultaneously.

- Which named engineers held the AWS Certified Solutions Architect, AWS Certified DevOps Engineer, and Security Specialty certifications on projects similar to ours?

- Show the change record that applied the April 2025 Well-Architected update and explain how you handled rollback.

- Walk through your most recent postmortem aligned with the OPS10 framework and identify who closed each action item.

- Provide a Control Tower export showing Resource Control Policy coverage described in the 2025 release notes.

- Share a Cost Anomaly Detection alert triggered after the July 2025 model update and document the response time you achieved.

Responses citing specific names, dates, and systems indicate genuine operational history and hands-on experience. Vague generalities or marketing language forecast evidence collection difficulties ahead.

Collect Artifacts That Confirm Readiness

Following initial conversations, request focused documentation that proves both coverage depth and operational capability. The list should include:

- Credly verification links with exam codes and expiration dates

- A 90-day release ledger

- Two postmortems tagged to the OPS10 framework

- Current Control Tower exports

- Financial operations dashboards showing alert closure rates

Vendors operating at scale should share this material within 24 hours and facilitate introductions to teams that produced it.

Delays, excessive redaction, or difficulty assembling basic operational artifacts suggest weak delivery capability. These responses should influence scoring decisions even when documentation does arrive.

Score Using a Proofed Coverage Rubric

The rubric below maintains consistency across multiple reviewers and vendor candidates. Each category combines coverage indicators with evidence, scoring on a zero-to-three scale.

A score of three represents full redundancy plus current operational artifacts, two shows adequate coverage with some proof gaps, one indicates single points of failure or outdated evidence, and zero reflects missing coverage or no proof.

| Category | Coverage Indicator | Proof Evidence | Score |

| Architecture | Dual Solutions Architect presence at Associate and Professional levels | Releases tied to April 2025 Well-Architected update | 0–3 |

| Operations | Redundant CloudOps and DevOps Engineer Professional coverage | Postmortems with closed action items | 0–3 |

| Security | Dedicated Security Specialty holders with backfill capacity | Control Tower exports with 2024 RCP update and 2025 release notes | 0–3 |

| Data & AI | Data Engineer and ML Engineer depth plus GenAI beta plan | Pipeline audits showing monitoring and drift management | 0–3 |

| Financial Operations | Named owners with supporting credentials | Alerts using May 2025 alerting update and 45% remediation via funding criteria | 0–3 |

Total scores below 11 trigger automatic elimination. Higher scores advance to verification testing within two business days.

Run a 48-Hour Verification Sprint

The first day validates every certification ID against Credly, LinkedIn, and AWS Partner Portal records. Confirm that expiration dates and exam codes match the proposed team roster. Mismatches or missing credentials at this stage eliminate vendors immediately.

The second day requires sandbox demonstrations where vendors show a recent deployment, demonstrate Control Tower drift remediation, and review actual Cost Anomaly Detection alert handling. Vendors hiding behind non-disclosure agreements during verification lack readiness for production partnership.

Close the sprint with reference calls to organizations matching your industry or workload profile. Ask how quickly the partner recovered from their most recent incident and whether the team remained stable over time.

Consistent answers strengthen vendor scores. Evasive responses or reluctance to connect references should close the evaluation file.

Making the Playbook Work

This four-phase approach transforms subjective vendor selection into an evidence-based process that surfaces both coverage gaps and operational weaknesses before contracts get signed. The structured format enables faster decision-making while reducing the risk of selecting partners who look strong on paper but fail in production.

Organizations running this playbook can expect improved vendor performance and stronger alignment between certified capabilities and delivery outcomes. The key is treating each phase as a genuine gate rather than a formality, and moving decisively when vendors fail to meet evidence standards.

From Badge Counting to Evidence-Based Selection

The certification explosion has fundamentally changed AWS vendor evaluation. Organizations that adapt their selection processes to this new reality will build partnerships capable of sustaining production workloads through platform evolution and operational stress.

This means treating badges as a baseline rather than a differentiator. It entails demanding operational proof alongside coverage assessments and moving decisively based on evidence rather than marketing.

Those clinging to certification as a primary selection criterion will continue learning expensive lessons about the gap between exam passage and operational excellence.