The model race is over. The AI deployment race is here. When moving a model from a proof-of-concept to a live production environment, the question is no longer “who’s best?” but “what fits your business?”

In this article, we break down the major AI inference platforms by model availability, ease of use, throughput, quotas, and total cost, helping you choose a reliable partner.

The State of Inference-as-a-Service Platforms in 2025

AI seemed to be heading towards a winner-take-all game where economies of scale ruthlessly drove out smaller, less capable models. As of late 2025, the landscape is dramatically different. Large foundation labs are still dominant, but smaller, open-source models are increasingly competing with large, closed-source models for market position.

These open-source AI models gave rise to a number of Inference-as-a-Service offerings, creating real competition in the AI landscape and offering businesses more choice and control.

For illustration purposes, we will evaluate three groups of inference providers:

- Cloud Providers (Azure, AWS, and GCP),

- Foundation Labs (OpenAI, Anthropic, and Perplexity),

- Specialist Providers (Replicate and Hugging Face).

This is by no means an exhaustive list, but you will find that most providers fall into one of these categories.

We will first look at the non-negotiables that can make certain inference providers incompatible with your project’s goals, then look at the softer requirements that could bias you towards one provider or the other.

Finally, we will put everything together in a comparison table, so you can apply our framework to choose a provider that is the best fit for your project.

The Non-Negotiables

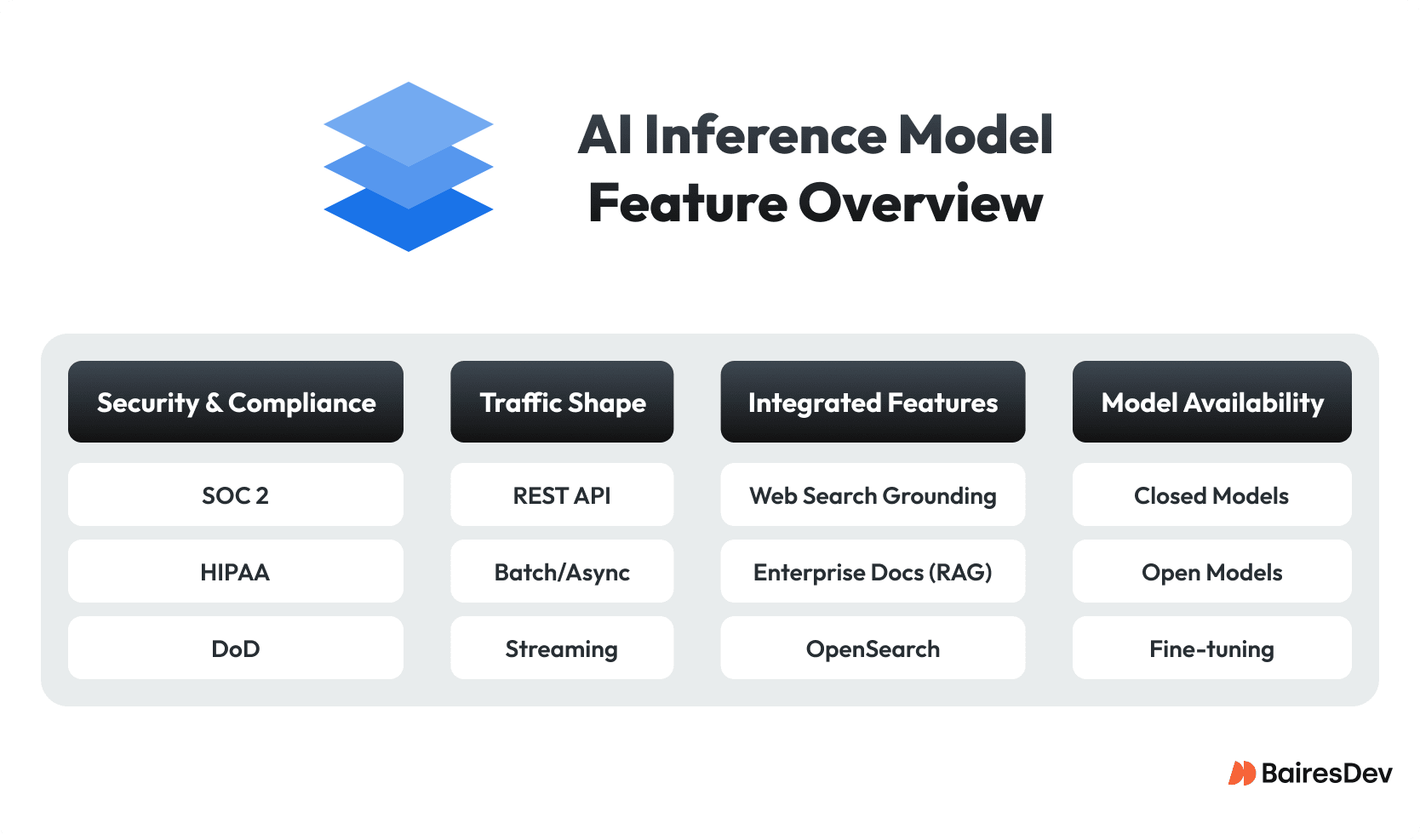

Because inference providers are often focused on specific segments of the market, they may choose to prioritize certain features over others. Some of these can be non-negotiable requirements for your project, and focusing on these features can help you narrow down your choices fairly quickly.

Model Availability: Closed Source, Open Source, and Fine-Tuned Models

One of the first make-or-break questions when choosing an inference provider is “Does the platform offer the model I want?”

For example, maybe you’re looking to run a closed-source model like Google Gemini. In that case, you will be forced to use Google Cloud Platform, since other providers do not offer this model for AI inference.

Other times, you might be deploying models like Llama 4 or DeepSeek R1. In that case, you have a lot more options, since most inference platforms will have access to the model weights as well as the infrastructure necessary to power these pre-trained models.

Another aspect of model availability is fine-tuned models. If you need a model that is fine-tuned on proprietary data, you will want to check if the platform offers an easy way for you to tune and host your custom model. OpenAI, for example, offers access to fine-tuned models for most of its offerings, whereas Anthropic does not allow you to fine-tune all of its models.

Integrated Features: Search and Grounding Data

Integrated features are an often-overlooked issue that teams consider too late in the provider selection process.

Nowadays, many AI applications rely on features that go beyond the large language model itself, and each platform offers a vastly different set of integrated features along with its LLM offerings.

For example, if you need the model to be able to access internet searches and cite the source in-line, you will consider Perplexity (included with their base offering), Google Cloud Platform (through the “grounding with Google Search/Vertex AI Search” feature), or Microsoft Azure (through the “grounding with Azure AI Search/On Your Data” feature).

Alternatively, you might need the model to access proprietary documents. In those situations, you could focus on Azure for its “OpenAI on Your Data” feature, which offers by far the easiest way to connect an LLM to a large set of proprietary data.

A close second would be AWS, which allows you to integrate OpenSearch with models running on AWS Bedrock.

Specialist services like Replicate and Hugging Face would not be well-suited if you are looking for a turnkey solution, since you would have to provide your own infrastructure for hosting, indexing, and searching through your proprietary data.

Traffic Shape: REST, batched, streaming

Another important consideration is the shape of your traffic.

A common architecture is the Agent Swarm, where multiple agents run inferences in parallel on their piece of the task before handing results off to each other. Agent Swarms usually run inferences through a REST-like interface and trigger the next step in the agent workload. In that case, you will want to check the inference provider to make sure your model can be deployed easily as a REST API.

If your AI workload is not time sensitive, you might consider running your inference in a batched fashion, where you would be optimizing for cost at the expense of immediacy. If this option is important to you, use the major cloud providers (Azure, AWS, GCP), since they offer batch processing with significant discounts, allowing for greater cost efficiency.

Foundation labs are split on this feature, with OpenAI and Anthropic offering discounted options and Perplexity offering none. Specialist providers, which have more limited access to idle GPU infrastructure, generally do not offer a batch processing discount.

Finally, if you’re running something like a voice chat application where the output needs to be streamed instead of returned at the end of an inference run, you will also want to look at the traffic shape on offer. Because of the increasing popularity of voice and real-time natural language processing applications, most cloud providers and foundation labs provide streaming options.

Notably, however, Hugging Face’s inference offerings make it relatively difficult to run streaming workloads.

Security and Compliance: ISO, SOC 2, HIPAA, FERPA, DoD

You will want to consider security and compliance assurances provided by your inference provider.

For example, healthcare companies that handle protected health information (PHI) will often require BAA and HIPAA certification, Education companies that handle sensitive education-related information might need to follow FERPA guidelines, and defense contractors might need DoD certifications. Many other industry verticals also have their own security requirements and certifications.

Enterprise-grade providers like AWS, Azure, and Google Cloud have generally been audited in all major security certifications, and further offer enterprise licenses and BAAs that guarantee the privacy of your data and of your model weights.

Other providers, like Hugging Face, are more geared towards the open source community and therefore do not offer BAAs, even if HIPAA-compliant applications can theoretically be built on top of their platform.

Foundation labs tend to offer more security certifications than specialist providers, but lack the comprehensive offerings of the major cloud providers.

The Nice-to-Haves

Now that the non-negotiables are out of the way, let’s look at some of the “softer” requirements.

Ease of Setup

Seeing your project stalled by infrastructure setup can be a source of frustration and create real risks for AI applications with many stakeholders, each with their own requirements and specializations.

In order to minimize the costs of setting up your inference provider, we want to look for managed inference platforms that are simple to set up, easy to use, and provide excellent support and/or documentation.

Labs Lead the Way

Foundation labs lead the way in ease of use. OpenAI and Anthropic compete for developer attention. As a result, they offer an extremely straightforward setup, and a user can usually start running inference with ready-to-use code in as little as five minutes.

What’s more, these AI platforms are excellent at generating example code for using their respective APIs, meaning documentation access is a breeze.

Specialist Providers are Dev-Friendly

A close second are the specialized infrastructure providers like Replicate and Hugging Face, whose documentation tends to be frequently updated. However, because their feature set is a bit more complex than the foundation labs, setting up with these platforms can take longer.

Teams often report being able to run their first inference in around 30 minutes with Replicate and Hugging Face, compared to under 10 minutes for most foundation labs.

If foundation labs are easy to get up and running, cloud providers are best described as slightly cumbersome. You need to do a bit of work to set everything up, but most developers should be able to navigate the documentation.

Cloud Providers Can Lag Behind

The larger cloud providers tend to be the most difficult when it comes to setup. All three cloud providers (Azure, AWS, and GCP) have sprawling and overlapping AI inference services, often with components that are tied to other services.

AWS can be especially difficult for the uninitiated, since using AWS effectively requires expertise in multiple AWS services like S3, IAM, EC2, and CloudWatch. A notable exception to this pattern is GCP’s Gemini API, which offers a simplified setup experience akin to other foundation labs like OpenAI and Anthropic.

However, this challenge is largely nullified if the team has access to a cloud architect who can navigate these complexities.

Cost Model

Another factor to consider is the cost model. Depending on your usage pattern and budget constraints, choosing a provider with the wrong cost model can erode your ROI.

Cost Per Token

The popular pricing model is cost-per-token, which is a model pioneered by OpenAI. The biggest advantage of this model is its predictability, since users can easily calculate the number of tokens their model will consume and decide if they want to run a particular inference. This model is also great for projects in the pilot stage, where the usage may be sporadic.

The downside of cost-per-token is the disconnect between model inference cost and the underlying hardware cost, since there often isn’t a 1-to-1 relationship between tokens processed and hardware costs incurred. This makes it difficult for some providers to offer this cost-effective model.

In fact, this model is mostly offered by foundation labs like OpenAI, Anthropic, and Gemini. A notable exception is Azure AI Foundry, which offers cost-per-token billing for popular open-source AI models.

Prince Per Hardware Second

Another popular cost model is price-per-hardware-second. While this makes the inference cost quite a bit less predictable, the pricing model is actually more transparent, giving users finer-grained control over the cost-performance tradeoff.

This pricing model, though, is much more suited to mature AI projects, where the usage patterns are much more established. Services like Replicate and Hugging Face, as well as major cloud providers, offer this pricing model. One notable distinction is that, while Replicate and Hugging Face only offer managed hardware billable by the second, Azure, Google, and AWS offer both managed and reserved capacity.

Performance, Scalability, and Reliability

Last but not least, we will want to consider the performance, scalability, and reliability of each provider.

Cloud Providers Reign Supreme

Cloud providers like Azure, AWS, and GCP truly shine in this regard. Not only do they have access to vast computing resources and optimized infrastructure, but they often offer SLAs that guarantee an extremely high level of reliability for all of their services, including their AI inference.

Additionally, each platform offers its own tools for auto-scaling and fleet management, so the offerings can easily grow with your project.

Foundation Labs Are Laser-Focused

Cloud providers are followed closely by foundation labs. While foundation labs don’t have access to the same level of resources, their limited offering means they can focus all of their resources on making sure their inference infrastructure is operating smoothly.

However, when GPU resources get scarce, these labs have been known to restrict access to their inference services, making scalability a concern. Additionally, foundation labs often lack the smart scaling solutions offered by cloud providers, making it harder to access economies of scale.

Specialist Providers are Good, But…

Specialist providers like Replicate and Hugging Face offer impressive reliability and performance, but cannot match the SLA-backed guarantees of cloud providers. While they offer enough reliability and scalability for most AI projects, their uptime is nowhere close to the near-constant uptime of the cloud providers and the foundation labs.

During periods of GPU scarcity, they’re often the first to lose access to much-needed compute capacity. Therefore, when dealing with mission-critical workloads, it is best to stick with a major cloud provider rather than going with a specialist.

Comparing the Top AI Inference Providers in 2025

To help you apply the framework from this article, the following tables summarize our findings.

Cloud Providers

The major cloud providers, Azure, AWS, and Google Cloud, are the established incumbents in the AI space. They are the ideal choice for large-scale enterprise deployments.

| Feature | Azure | AWS | Google Cloud |

| Model Availability | ChatGPT, Open Source | Open Source, Claude | Gemini, Open Source |

| Integrated Features | Azure AI Search, Document Search | Amazon OpenSearch, Bedrock Knowledge Bases | Google Search |

| Fine Tuning | Integrated | Separate | Integrated |

| Batch Processing | Offered | Offered | Offered |

| Security | Comprehensive (SOC, HIPAA, etc.) | Comprehensive (SOC, HIPAA, etc.) | Comprehensive (SOC, HIPAA, etc.) |

| Setup Time | ~1hr | 1 to 3 hours | ~1 to 2 hours |

| Cost Model | Per-Token & Per-Second | Per-Second | Per-Token & Per-Second |

| Reliability | Extremely Reliable | Extremely Reliable | Extremely Reliable |

Foundation Labs

Foundation labs like OpenAI, Anthropic, and Perplexity are the primary creators of today’s leading models. Their platforms are defined by an excellent developer experience and rapid setup, offering the most direct and optimized access to their flagship closed-source models.

| Feature | OpenAI | Anthropic | Perplexity |

| Model Availability | ChatGPT | Claude | Perplexity Sonar |

| Integrated Features | DIY | DIY | Perplexity Search |

| Fine Tuning | Integrated | N/A | N/A |

| Batch Processing | Offered | Offered | N/A |

| Security | SOC 2 | SOC, ISO, HIPAA | SOC 2 |

| Setup Time | 5 Minutes | 5 Minutes | 5 Minutes |

| Cost Model | Per-Token | Per-Token | Per-Token |

| Reliability | Highly Reliable | Highly Reliable | Highly Reliable |

Specialist Providers

Specialist providers such as Replicate and Hugging Face cater primarily to the developer and open-source communities. Their key strengths are flexibility and access to a vast and diverse range of open-source models.

| Feature | Replicate | Hugging Face |

| Model Availability | Open Source | Open Source |

| Integrated Features | DIY | DIY |

| Fine Tuning | Integrated | Integrated |

| Streaming | Offered | Difficult |

| Security | SOC 2 | SOC 2 |

| Setup Time | ~15 minutes | <1 hour |

| Cost Model | Per-Hardware-Second | Per-Hardware-Second |

| Reliability | Mostly Reliable | Mostly Reliable |

Bonus: AI Inference Hardware Providers

One category of inference providers we didn’t cover is hardware innovators.

These providers function similarly to specialty providers, but instead of renting out GPU-seconds, you’re renting out entire specialized computing infrastructure that is optimized for certain workloads. Because they have a narrower use case, we chose to omit them in the main comparison.

However, if you’re looking for deeper control over your hardware stack, as well as fine-grained optimization, these providers are worth examining:

- Groq specializes in low-latency inference, catering to companies developing real-time, high-speed applications. By using Language Processing Units (LPUs), their proprietary computing architecture, they achieve inference speed that traditional GPUs struggle to match.

- SambaNova offers an integrated approach that can run both training and inference for massive models. SambaNova’s Reconfigurable Dataflow Unit (RDU) systems are available on-premises, as a cloud service, and in hybrid cloud configurations for maximum flexibility.

- Cerebras Systems offers a unique hardware innovation known as the Wafer-Scale Engine (WSE), a supercomputer etched on a chip the size of a dinner plate. By putting the whole system on a chip, the WSE eliminates the latency associated with moving data between GPUs and memory. These chips are well-suited for extremely demanding, time-sensitive workloads.

In case you would like to learn more, we already covered these inference hardware upstarts on our blog.

While these platforms may offer less model flexibility than a specialist provider, they can be a tempting choice for AI workloads whose competitive advantage depends on best-in-class speed or the ability to run exceptionally large models.

| Feature | Cerebras | Groq | SambaNova |

| Model Availability | Open-weight LLMs; custom endpoints | Popular OSS/closed via partnerships; LLM/STT/TTS | OSS models + enterprise suites |

| Integrated Features | Managed inference API; private cloud; large shared clusters | GroqCloud API; playground; integrations | SambaCloud (hosted) + SambaStack (on-prem) |

| Fine Tuning | Supports custom models/endpoints | Not primary; focuses on optimized serving | Enterprise fine-tune options (suite) |

| Streaming | Yes (low-latency focus) | Yes (real-time focus) | Yes |

| Security | Enterprise contracts; cloud + on-prem | Enterprise tiers; cloud | Enterprise/private deployments |

| Cost Model | Per-token claims and dedicated capacity pricing | Transparent per-token pricing; high TPS | Subscription/enterprise pricing; dedicated capacity |

| Reliability | Hosted cloud + dedicated/on-prem options | Hosted cloud; public status/pricing pages | Hosted or private; enterprise support |

Growing, Scaling, and Optimizing Your Inference

Choosing an inference provider can seem like a daunting task — and it is. In a nascent, fast-changing market where new features are being added every day, the choices and options can be overwhelming.

Partnering with experts through AI development services can help you navigate these complexities, ensuring you select solutions that align with your goals and scale effectively.

However, by focusing on your project’s needs, aligning with your team’s expertise, and weighing trade-offs like cost, scalability, and potential vendor lock-in, you can make an intelligent decision that will serve your organization throughout the project’s lifecycle.