Executive Brief

Software teams are racing to integrate AI into delivery, yet most fail to convert these investments into measurable gains in revenue and system reliability.

Most organizations are stuck in the middle: widespread pilots and significant spend, yet a change management process that lags behind. Engineering leaders recognize the pattern: duplicated efforts, conflicting guidance, and senior engineers quietly routing around untrusted tools.

This article lays out a structured approach connecting AI adoption, organizational change, and engineering systems into a testable change management strategy. It focuses on what leaders need: predictable shipping, fewer surprises, and a defensible line from a proposed change to measurable outcomes.

The AI Burden on Engineering Leadership

The macro context increases pressure on management. Private AI investment in the United States exceeded one hundred billion dollars in 2024, according to the 2025 Stanford AI Index. More than ninety percent of companies expect to increase AI spend over the next three years, based on research from McKinsey’s 2025 AI in the Workplace report. According to Gartner, global IT market spending on AI is projected to rise sharply through 2026 and beyond.

Worldwide AI spending 2024-2026, all figures in millions of US dollars, Gartner, September 2025

| Market | 2024 | 2025 | 2026 |

| AI Services | 259,477 | 282,556 | 324,669 |

| AI Application Software | 83,679 | 172,029 | 269,703 |

| AI Infrastructure Software | 56,904 | 126,177 | 229,825 |

| GenAI Models | 5,719 | 14,200 | 25,766 |

Meanwhile, sectors with high AI exposure are realizing productivity growth nearly five times higher than those with low exposure, as highlighted by PwC’s 2024 Global AI Jobs Barometer. Your competitors are no longer experimenting on the margins; they are redefining their strategy around these capabilities.

For senior leaders, this shifts AI from an “interesting tool” to a “core capability.”

Organizations need AI-driven change management strategies that align architecture, people, governance, and everything else. Scattering AI across teams will not produce organic organizational change. You need predictable pathways that tie every change initiative into CI/CD and platform governance while providing a defensible ROI model for the board and risk owners.

The risk? Ad hoc AI initiatives that erode trust and create hidden dependencies beyond your control.

Implemented correctly, AI-driven change management becomes a growth lever. Good initiatives can compress cycle times, improve productivity, and strengthen company culture by making responsible experimentation normal. Implemented poorly, the lack of a coherent change strategy creates bottlenecks, confuses ownership, and introduces failure modes that take quarters to untangle.

The management practices you adopt today will decide whether AI becomes your fastest growth lever or your most expensive liability.

Why AI Change Management is Hard

AI is driving a structural shift that demands a new playbook for change management. Analysts call this “a cognitive industrial revolution,” a transformation reshaping work and talent models (IEEE Transmitter). You see it in the daily experience of your teams: more cross-functional dependencies, more tools, and higher expectations from business leaders.

Despite aggressive investment, many organizations fail to scale AI because change management breaks down. Only a small percentage of organizations classify their AI deployments as mature, according to McKinsey.

Most companies remain in early, fragmented stages. They have pockets of success without a change management strategy to align AI investments with business processes, technical constraints, and risk tolerance. That gap becomes an architectural risk surface.

Talent pressure complicates managing organizational change.

One-third of technical projects already stall due to skills shortages. Simultaneously, executives expect a large portion of the workforce will need reskilling, according to IBM’s AI upskilling insights.

AI adds to it with ad cognitive demands: understanding model failure modes, designing prompts, and detecting drift. If you do not connect AI adoption to professional development, you get improvisation leading to inconsistent internal processes, unsanctioned AI use, and unpredictable behaviors that undermine governance.

Regulation makes AI governance non-negotiable for any change management plan. The EU AI Act sets expectations for high-risk AI, and the NIST AI Risk Management Framework provides a structure to create trustworthiness. These frameworks influence what must be logged and how human oversight is enforced. A change management strategy that ignores them will eventually collide with compliance audits.

Picture a mid-sized firm rolling out AI coding assistants team by team, without a unified change management plan.

Early wins turn into chaos. These only occur in isolated teams, but patterns shift. Some squads embrace AI while others reject it. Incident reviews discover AI-driven misconfigurations. Leadership teams can’t link the change initiative to deployment speed or defect rates. This is a basic example of an organization “doing AI” but failing at managing change effectively.

Criteria and Requirements

Successful AI change management hinges on four interconnected areas: planning, communication, implementation, and reinforcement. Each touches technical systems and organizational change management practices.

Planning and Scope Definition

Planning starts by identifying which business and internal processes are in scope. Identify affected teams and map AI tools to repositories, data sets, and CI/CD stages early to avoid integration surprises. Aligning this phase with the NIST AI Risk Management Framework helps translate generic principles into specific design constraints. This is where you decide what successful change looks like and how to measure it.

Role-Aware Communication

Communication must be precise and role-specific, forming the core of your strategy.

- Engineers: test coverage and failure modes.

- Product leaders: capability limits and time to market.

- Business leaders: risk and cost.

Segmenting your approach communicates the change initiative in terms that make sense for each group, reducing accidental resistance.

Technical Implementation

Implementation is where strategy turns into code, led by change managers and architects. You embed AI services into the delivery chain, define model lifecycles, and ensure developers use tools safely. You must define boundaries: allowed use cases, data usage, and required human review.

Skipping these details during the change process creates brittle workflows that Ops inherits and struggles to maintain.

Reinforcement and Drift Management

Reinforcement ensures continuous improvement. AI that is not monitored becomes misaligned. Reinforcement means measuring adoption, usage, and quality, then updating training and adjusting guardrails based on incidents.

Without reinforcement, expect the usual pattern: early enthusiasm, then drift, and finally abandonment.

Assign clear ownership for each stage. This prevents the classic failure mode where everyone assumes ‘someone else’ is handling it.

| Stage | Primary Owner Group | Focus for Engineering Leaders |

| Planning | Leadership team | Technical scope, risk, architecture alignment |

| Communication | Change managers | Expectations, roles, integration impact |

| Implementation | Architecture + Delivery | CI/CD integration, model guardrails, telemetry |

| Reinforcement | Ops + People Leadership | Metrics, feedback loops, training updates |

This table prevents the failure mode where everyone assumes “someone else” is handling change communications or providing adequate training.

Business Impact + ROI Model

AI investments only earn executive trust when linked to hard, measurable outcomes. Your change management plan must connect productivity and stability to financial performance.

Productivity is the first, and easiest, signal of effective change management. Developers using AI assistants often complete boilerplate generation or pattern searches faster. However, raw speed is useless if it increases rework. With guardrails, you see sustained improvements in change lead time. Without guardrails, early gains collapse into outages and costly rework.

Measure what matters. Use the AUP hierarchy (Adoption, Utilization, Proficiency, Outcomes) to connect activity to business results.

| Metric Category | What You Measure | Link to Outcomes |

| Adoption (A) | % of users enabled | Signals readiness for organizational change |

| Utilization (U) | Frequency of usage | Predicts compression of delivery cycles |

| Proficiency (P) | Quality of outputs/defect rate | Indicates stability and release confidence |

| Outcomes (O) | Time to market / EBIT | Validates ROI and long-term viability |

Then, map outcomes to DORA metrics such as deployment frequency and change failure rate. Proficiency drives DORA success: high proficiency means faster, safer releases; low proficiency means incidents—even with heavy tool usage.

ROI is also a question linked to professional development. A large share of your workforce needs new technical skills: prompt design, data literacy, and AI-aware code review. Research from IBM highlights the scale of this reskilling. If change projects lack professional development paths, you create a two-speed organization: experts carrying the load versus a sidelined majority. This slows delivery and undermines future change.

Consider a company rolling out AI-assisted code review as a change initiative. Leaders measure Adoption (tool access), Utilization (suggestions in PRs), Proficiency (accepted suggestions/defect impact), and Outcomes (DORA metrics).

Over time, data reveals where training works and where integration needs refinement. Future change initiatives benefit from this evidence rather than starting from zero.

What Good Looks Like

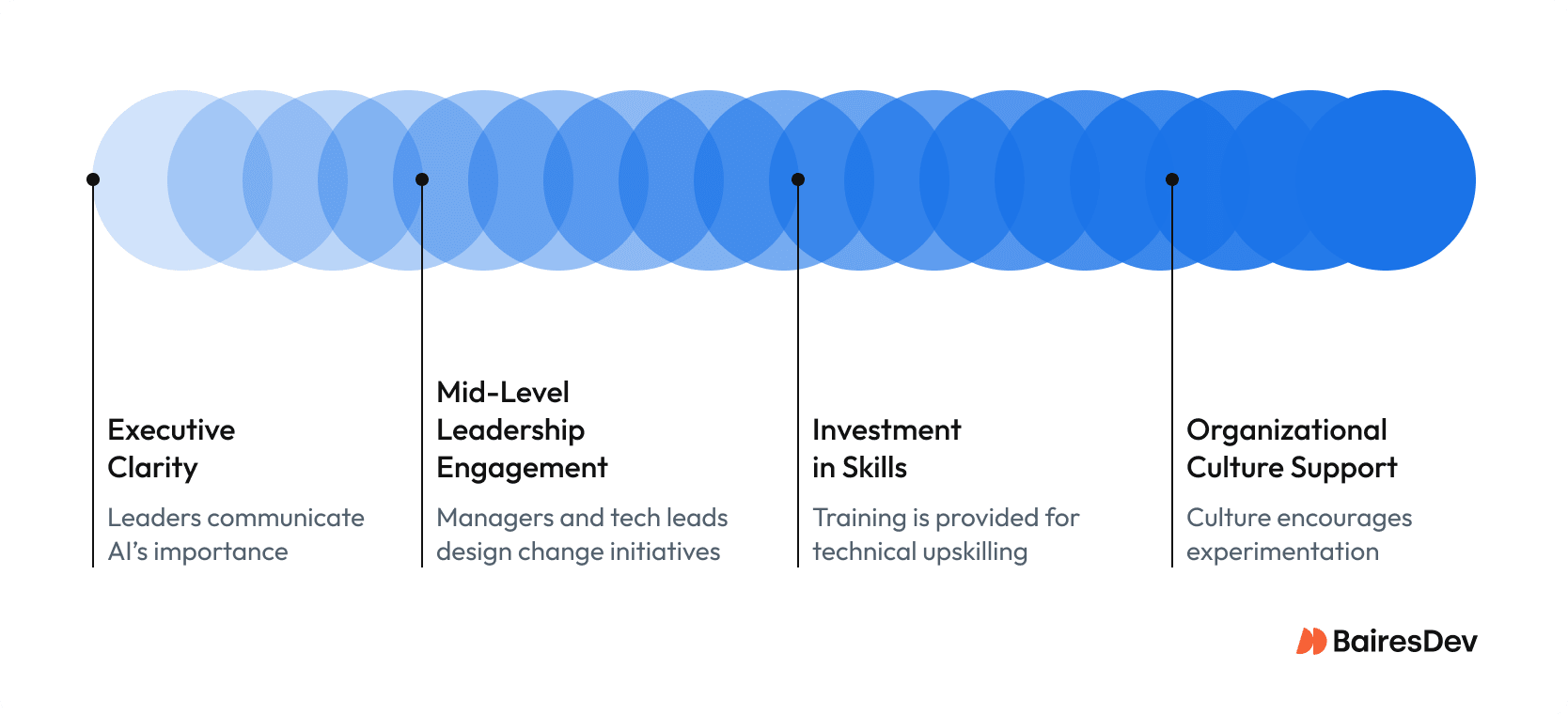

In mature organizations, AI-driven change management isn’t ad hoc; it’s a disciplined system built on four enablers.

- First, executive clarity is sustained. Senior leaders consistently explain why AI is important, outline goals and non-negotiable constraints. They acknowledge tradeoffs, too. For engineers, honest rationale is more credible than hype, making it easier to accept disruption.

- Second, mid-level leadership is active. Engineering managers and tech leads help design change initiatives. They identify high-value starting points and team-level risks. When these leaders support the change effort, they become effective change agents.

- Third, the organization invests in technical skills and human dynamics. Training covers safe tool usage and how to question AI outputs. It addresses communication and psychological safety, critical when organizational change affects identity. Teams that feel safe raising concerns fix problems earlier.

- Fourth, organizational culture supports experimentation with guardrails. Teams can pilot workflows but must operate within defined boundaries for data and security. These guardrails allow innovation to scale without chaos that could undermine the organization’s culture.

A global engineering organization executing a change initiative illustrates this.

They pilot AI-assisted development in three areas using a template covering scope, risks, and business processes. Opinion leaders ensure buy-in. Telemetry feeds a dashboard showing AUP metrics. Successful patterns are codified into playbooks, while failed experiments are documented as learning.

The result? An organization that scales AI predictably by turning experiments into repeatable success.

Implementation Realities: What Leaders Must Get Right

AI-driven change management unfolds under real-world constraints: shipping features, maintaining compliance, and managing risk. Senior leaders must integrate AI while shipping features and maintaining compliance.

Integration over Isolation: AI must integrate into existing governance. Creating a parallel universe for change projects fails. Adapt portfolio governance and security processes to account for AI risks. This keeps decision rights clear and ensures organizational transformation aligns with reality.

Resistance as Signal: Expect potential resistance and treat it as a vital data point. Engineers worry about tooling reliability, managers worry about fairness in metrics, and interns fear being replaced by AI systems. When business leaders treat stakeholder concerns as bugs to be fixed, they can overcome resistance and create resilient plans.

Explicit Ownership: Assign responsibilities for each change initiative. You need an executive sponsor, a manager for change processes, and a technical owner for architecture. Unclear roles cause the change initiative to tilt too far toward technology or politics.

Sequencing: Sequencing matters when implementing change. Rolling out strategy, tools, and processes simultaneously guarantees overload. Leaders who use a preparation phase, run constrained pilots, and scale based on empirical evidence succeed more often. Move in stages that can be measured and corrected.

Telemetry as Truth: You will not have complete certainty. AI behavior is not fully predictable. Instrumentation is critical. Log AI usage, overrides, and performance impacts. This data informs training, process improvement, and investment choices.

Strategic Takeaways

AI-driven change management is no longer optional. It’s a core leadership discipline at the intersection of technology, talent, and governance.

- Connect to Strategy: Connect every change initiative to business strategy. If you cannot explain how a proposed change supports revenue, risk, or time to market, question its existence. This filter prevents “interesting” but low-value work.

- Invest in the Middle: Executives set direction; ICs innovate. Middle managers translate strategic goals into practice. If they are not aligned and resourced, organizational change stalls.

- Metrics as Instruments: Use AUP and DORA metrics to view how change processes unfold. Used well, they highlight bottlenecks. Used poorly, they create fear and gaming.

- Psychological Safety: Build safety within your company culture. Teams must feel safe reporting “wrong” AI outputs. Without safety, silent failures accumulate into incidents.

- Portfolio Management: Manage change as a portfolio, not disconnected change projects. Reuse patterns and standardize guardrails to build institutional memory.

AI-driven change management strategies require disciplined execution and architectural awareness. Leaders who master these realities will turn AI-driven change from chaos into a competitive advantage.