Testing is vital to the success of every software development project. With a multitude of different web browsers, operating systems, and devices for users to choose from, cross-browser testing is the only way of ensuring web apps deliver a good user experience across all platforms, without fail.

Cross-browser testing requires a significant amount of effort from developers and testers. However, automation can help. With the right cross-browser testing tools, you can make testing more effective and efficient. The result is more reliable software that’s easier to maintain and provides a better experience.

Understanding Cross-Browser Testing

Cross-browser testing is an essential part of a comprehensive testing process. As more users turn to web apps for work and entertainment, engineers have to ensure their products deliver a consistent experience on all platforms—from the company ThinkPad to the kids’ iPad.

Why is Cross-Browser Testing so Important?

Cross-browser testing helps development teams deliver a consistent experience regardless of the user’s choice of browser or device. It allows engineers to preemptively tackle compatibility issues before apps ever reach the intended audience. Cross-browser testing gives developers a chance to identify and rectify layout, functionality, and performance discrepancies. It helps avoid pitfalls created by the mind-boggling variety of devices used today.

Twenty years ago, web developers needed to test their products on a couple of operating systems and a handful of screen sizes. Today, they need to account for everything from a 6″ smartphone to a 65″ TV, running dozens of different browsers and platforms.

The end result is worth it, though. Thoroughly tested web applications offer a good user experience and improve retention rates, fostering a sense of trust between users and the product. This encourages further engagement. Poor cross-platform performance fragments the user experience and drives visitors away.

Google Chrome ranks as the most web browser in 2025, with a market share of 66%. However, many users leverage other browsers like Safari, Edge, and Firefox. It’s important to confirm that your app works just as well on these browsers, across multiple devices.

The Challenges of Cross-Browser Testing

It isn’t difficult to see why development teams both appreciate and dread cross-browser testing. It introduces complex challenges that QA teams and developers must navigate to ensure functionality and a good user experience, regardless of platform. Implementing structured testing processes can streamline the process by providing a clear methodology to address compatibility issues effectively. Automation tools help, too, but the process still requires a lot of technical expertise and hard work.

Layout discrepancies are among the most prevalent issues in this type of testing. Visual inconsistencies frustrate users by negatively impacting usability and accessibility while also detracting from its overall aesthetic appeal. The result? Users choose competing services that offer a superior experience.

Functionality bugs are another complication. Features that work seamlessly in one browser or device may malfunction in others due to the different interpretations of web standards. Performance variations also create a challenge, with the same website loading at slower speeds or apps responding more sluggishly on certain browsers and devices.

From desktops running Windows and Android phones, to iPads and Chromebooks, each combination has the potential to introduce unique challenges.

Thankfully, cross-browser testing is no longer solely a manual process. Test automation tools allow QA teams to rapidly execute tests across multiple environments save time and eliminate human error. These tools can connect with other development tools to enhance productivity, and they support a broad range of devices and browsers. Some tools also provide debugging capabilities, helping teams identify and resolve problems immediately.

Automation in Cross-Browser Tests

Automation is the backbone of a cross-browser testing. Manual testing, in contrast, requires considerable time, effort, and resources. It also leaves more room for human error. Automation improves the accuracy and efficiency of the cross-browser testing process while reducing the need for manual labor.

With the aid of automation, QA teams can create and run comprehensive test suites, ensuring that navigation, layout rendering, form submissions, and other functionalities, work as expected. Since the process is scripted and automated, it ensures consistency and repeatability. This allows for greater scalability and coverage. Teams can execute near-identical tests on dozens of browser and device combination. With no human in the loop, the test results are consistent and comparable.

Development teams typically use commercially available automated cross-browser testing frameworks such as Selenium, Cypress, and Playwright.

Testing tools are typically designed to integrate into continuous integration and continuous delivery (CI/CD) pipelines, further streamlining the development process.

All these benefits add up. Automation doesn’t just save time and money, it allows faster release cycles, better quality assurance, and improved user experience.

Integration with Development Tools

Some of the most common integration use cases for cross-browser testing tools are CI/CD pipelines.

Integration means that automated cross-browser tests are executed every time developers push new code to the repository. The changes can be tested in real time, allowing teams to address issues quickly.

Connecting version control systems with cross-browser testing tools gives devs the ability to test code commits and branch merges for usability. By only allowing code tested across various browsers and platforms to make it into the production codebase, subsequent testing is streamlined.

Some tools can be integrated into project management suites to give all stakeholders a glance at the testing process.

With a multitude of tools flooding the market, there are plenty of cross-browser testing solutions to choose from, and most offer a free trial.

How Comprehensive Testing Delivers Value

Cross-browser testing is an essential step in ensuring that your website or application works seamlessly across different browsers and operating systems, delivering the best possible user experience.

Whether your user is a student on a Chromebook or a professional rocking the latest MacBook Pro, consistent functionality and appearance must be ensured. Cross-browser tests help products deliver a good user experience by:

- Ensuring Compatibility: Cross-browser testing verifies that your website or application behaves consistently across all relevant browsers, devices, and operating systems. This is vital in today’s fragmented digital landscape, where users access content from a variety of platforms.

- Improving User Experience: A site that functions properly and renders consistently builds trust and engagement. What’s the use of attracting customers to your e-commerce platform if they will abandon their carts in frustration due to a poor experience? Addressing inconsistencies helps ensure a reliable user journey and helps retention.

- Eliminating Bugs and Fixes: Identifying compatibility problems early on prevents flaws from reaching production. This means users get to experience fewer bugs and development teams spend less time on bug fixes and maintenance.

- Improving Efficiency: Automation speeds up quality assurance, reduces time spent on manual tests, post-release bug fixes, and accelerates the development cycle. Then teams spend less time on unproductive tasks, they can focus on innovation—adding new features and improving existing ones.

- Driving Retention and SEO Performance: A consistent and polished user experience results in better user satisfaction. When people scroll, they expect speed and responsiveness. If your product doesn’t deliver, they won’t stick around. Good performance also improves SEO. Better performance, reduced bounce rates, and longer session times are important signals for search engines. The better your site is, the better it will rank.

Choosing the Right Cross-Browser Testing Tool

When choosing cross-browser testing tools, focus on core capabilities and key features rather than nice-to-have features. Keep it simple and focus on the following:

- Browser and Device Coverage: Shortlist tools that support the platforms you are targeting. Of course, all will cover the major browsers, but you may need to check if older versions are supported, or underpowered hardware common in some industries industry (e.g., education).

- Integration and Automation: Cutting-edge features won’t help if tools don’t integrate with your workflow. Make sure the tool supports your automation framework of choice, the necessary CI/CD tools, version control systems, and so on.

- Debugging and Reporting: Check if the tool’s test reporting and logging abilities and standards are aligned with your requirements and workflow.

Do not focus on vendor checklists and dozens of extras your team will rarely use. Skip the marketing, prioritize alignment.

Leading Cross-Browser Testing Tools Compared

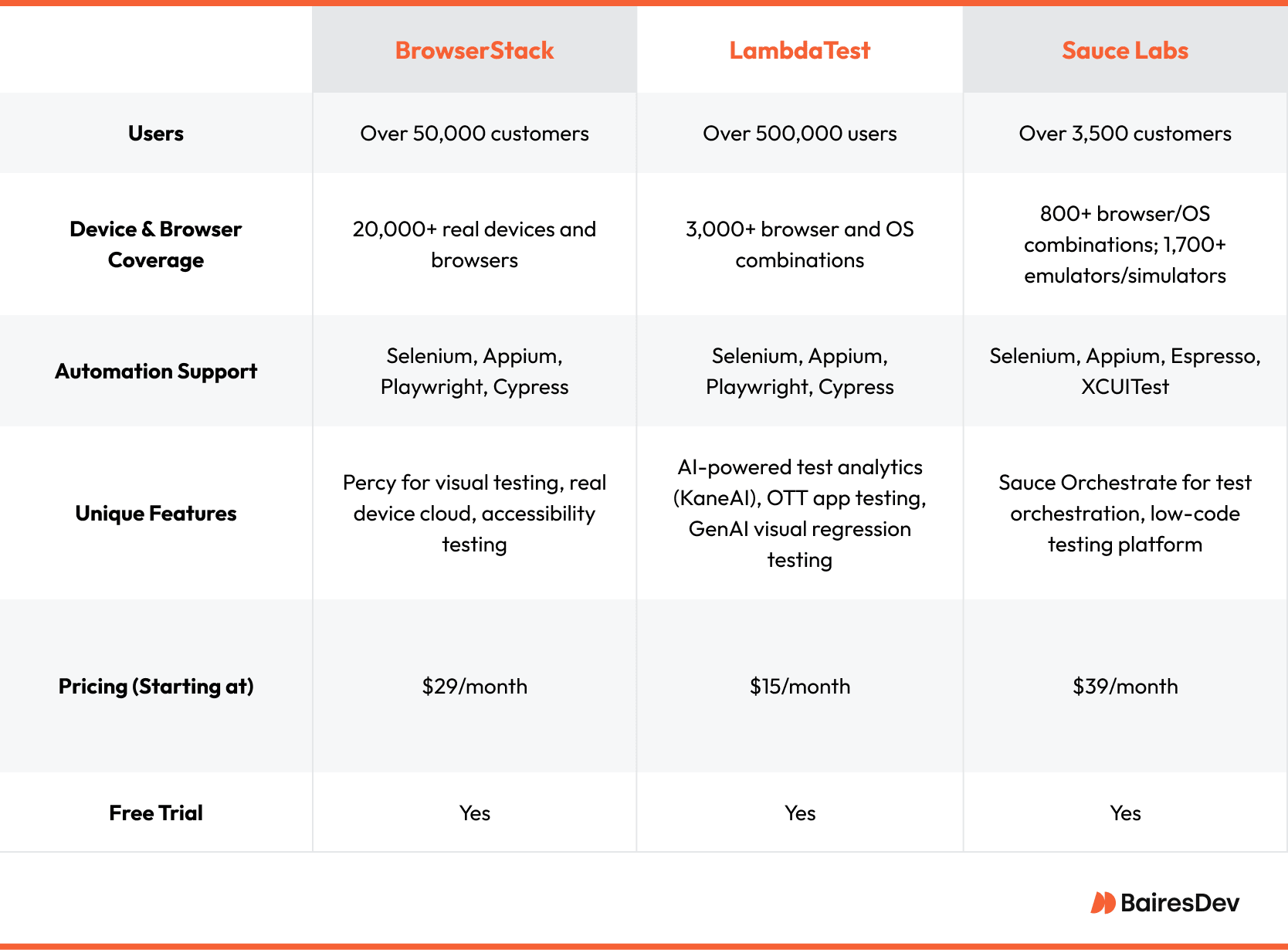

For brevity, we will compare just three leading cross-browser testing platforms: BrowserStack, LambdaTest, and Sauce Labs.

All three feature support for industry-standard automation frameworks and CI/CD integrations. However, they differ in terms of pricing, support for certain technologies, and tend to cater to different markets.

BrowserStack leads the pack in terms of revenue and users, and is geared toward enterprise users. LambdaTest should appeal to startups and medium-sized companies, thanks to competitive pricing. Sauce Labs is aiming for a different and more specialized niche, emphasizing automation and orchestration capabilities.

In addition to the three market leaders, we could mention a few noteworthy alternatives worth considering:

- TestingBot is a lean and affordable testing platform catering to small and mid-sized teams, focused on browser automation.

- HeadSpin targets the opposite side of the spectrum and is largely used by enterprise teams. It claims to offer exceptional performance testing and UX benchmarking capabilities.

- CrossBrowserTesting is now part of the SmartBear suite, and is a good choice for existing SmartBear users.

Best Practices for Cross-Browser Testing

Effective cross-browser testing starts with prioritization. Teams should use analytics and demographics to identify browsers and devices to prioritize for testing. High-priority environments should be tested first, with broader compatibility tests conducted later.

Although automated testing improves coverage and speed, real-device testing remains important. It allows teams to identify issues that don’t affect simulated environments. Teams also need to keep their tests updated at all times, accounting for new device releases, OS updates, and the latest browser versions.

Efficient Testing Strategies and Common Challenges

- Identify the browsers, devices, and operating systems used by your intended audience and prioritize them accordingly.

- Use automated testing tools for broad, repeatable test execution, followed by manual testing for user flow validation and visual/UX issues automation may miss.

- Use cloud-based testing platforms to access a wider range of testing environemnts while reducing infrastructure overhead.

- Gather real user feedback through usability testing and beta sessions, and test for real-world compatibility problems.

Addressing Common Challenges

- Cross-browser automation is complex. Try to use platforms that integrate easily with your CI/CD pipeline and streamline the process as much as possible.

- The number of broswer/device combinations is growing, making comprehensive tests impractical. Focus on the most relevant combinations and expand coverage as resources allow.

- Devices, browsers, and operating systems receive regular updates. This means continuous test maintenance is a must. Automated regression testing will help ensure your app stays compatible as the ecosystem evolves.

Case Studies and Success Stories

- Chegg, an education tech company with millions of worldwide subscribers, utilized BrowserStack’s cross-browser test infrastructure to ensure that their Chegg Student Hubb Platform is accessible across a wide range of browser-device-OS combinations.

- eMoney Advisor relied on SauceLabs to effectively and efficiently scale their mobile, cross-browser, and visual testing to guarantee product quality.

- Airmeet, a virtual events platform, utilized LambdaTest to automate and improve cross-browser testing to ensure that their platform worked seamlessly across technologies for a better user experience.

Development teams have many options available when it comes to choosing a cross-browser testing tool. This important decision, however, doesn’t have a simple answer or best option. Work with your team members to decide what your priorities are in a tool that will help you test across multiple browsers.

By weighing the pros and cons of each option for every project and considering your existing tech stacks and niches, you can find the right tool to automate tests and conduct your assessments more effectively.

FAQ

What are cross-browser tests?

Cross-browser tests involve testing websites, apps, and software across various browsers, operating systems, and devices to identify compatibility and usability issues.

Why is automation important for cross-browser testing?

Automated cross-browser tests help you test more efficiently and effectively across a wider variety of use cases while reducing room for human error.

How do you choose the right tool for cross-browser tests?

Choosing the right cross-browser tool starts with prioritizing compatibility, test automation, cloud-scaling options, and options for real device testing. Every team must find the right tool for their specific needs.

Can cross-browser testing be integrated into continuous integration/continuous deployment (CI/CD) pipelines?

Yes, cross-browser testing integrates into CI/CD pipelines by setting up trigger-based executions of tests upon each code push or merge. This promotes continuous testing and improvement.

What are some common challenges in cross-browser tests, and how can they be addressed?

There are some challenges when you execute test cases in a cross-browser testing environment. They include:

- Too Many Tech Combinations: To resolve this, identify the priority browser, operating systems, and devices based on user analytics. Test the rest later.

- Updates and Versioning: Configured correctly automated testing tools update tests without a lot of human involvement.

- Infrastructure Maintenance: A cloud-based automated testing tool relieves teams and companies of the need for costly infrastructure.