The International Data Corporation (IDC) estimates that large companies will spend north of $150 billion on GenAI projects by 2027. Most of that money lands in two buckets: cloud cycles and integration work. The second bucket is harder to see because it’s spread across dozens of teams who keep wiring Slack bots to Jira bots to ServiceNow bots, only to discover that each new ‘smart assistant’ lives in its own bubble.

Take a simple example. Your IT service desk rolled out a password-reset bot. A month later, the HR team adopted a shiny “employee self-service” assistant that does, yes, password resets. Both charge tokens. Neither knows what the other just did. Finance notices the duplicate spending only when the monthly AI bill arrives.

That duplication is the fallout of the first wave of enterprise AI. It’s the default when every assistant talks to the company’s systems but not to one another. The more bots you add, the less efficient the organization becomes. Siloing your intelligent systems really undermines efficiency.

Why Orchestration, Not Your AI’s IQ, Is The Next Limitation

Most leadership teams have already grasped that adding “more AI” no longer means building one colossal model to do everything. Departments want their own specialists: a triage agent for IT, a product advisor for e-commerce, a policy validation agent for finance. The missing piece is the wiring that lets these specialists coordinate, escalate, and, crucially, explain what they did when auditors show up.

Google’s Agent-to-Agent (A2A) protocol is an early attempt to make that wiring standardized and reusable. The same way standardized power outlets let you plug in anywhere without worrying about the brand of your charger (beyond the adapter). To quote Google: “The A2A protocol will allow AI agents to communicate with each other, securely exchange information, and coordinate actions on top of various enterprise platforms or applications.”

What A2A Actually Is

Imagine you’ve hired five sharp contractors: HR, IT, finance, legal, and customer service. They each have access to the relevant apps. They’re competent. But they’ve been told never to talk to one another. So HR drafts an offer letter without checking if finance has approved the salary. Customer support spots a bug but can only stick a Post-It on the engineer’s office door. After a few weeks, the team is experiencing missed handoffs, broken workflows, pending audit issues, and some fed-up customers. Every bot’s at the starting line, but the arrows keep missing the mark.

That’s where most AI rollouts sit today. Virtual assistants in different corners, no shared memory, no official hand-off channel. A2A defines that channel using a shared message format and a set of rules for how agents should talk to one another. At its core, the protocol handles four things:

- What needs doing (task metadata like type, priority, and deadline)

- Where to look for more detail (context links to relevant data or tools)

- Who’s allowed to do it (authentication tokens with scope-limited access)

- And how to track the whole thing (a task ID that follows the task across agents)

Agents can accept, reject, delegate, or complete tasks based on these messages. They can also report errors or timeout conditions. Since the protocol is stateless (although the tasks themselves maintain state), the agent that receives a task doesn’t need to remember who sent it.

It’s a surprisingly compact envelope. But that simplicity is what makes it useful. Developers don’t need to reinvent their own handoff mechanism for every pair of agents. They just register each agent, define what kinds of messages it can send or receive, and let A2A take over from there. You can call it AI service tickets if that helps.

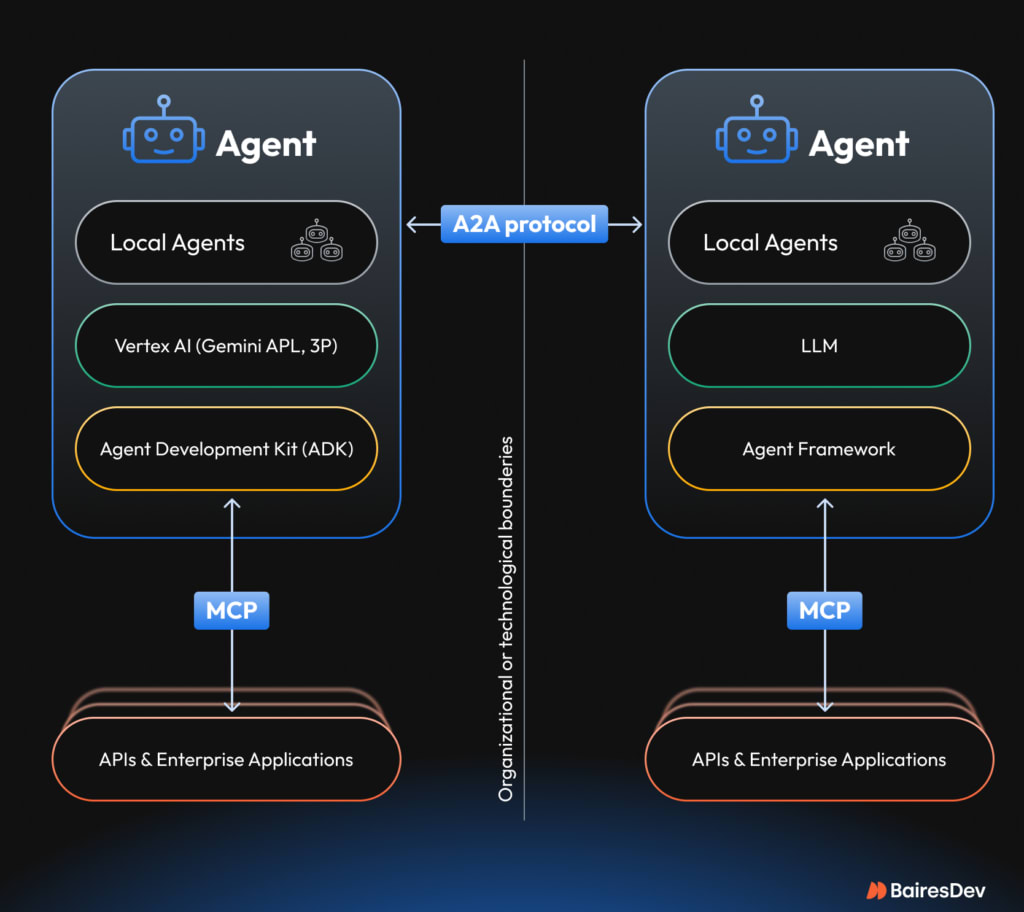

The Difference Between Google’s A2A and Anthropic’s MCP

You may find recent commentary mistakenly framing Google’s A2A and Anthropic’s MCP as competing standards. In practice, they address fundamentally different problems. MCP gives AI-powered applications, not just agents, a standard way to connect to business tools like databases, document stores, or ticketing systems. You can think of it like a USB-C port. It helps models interact with systems through instruction-oriented tasks.

A2A, on the other hand, defines how agents communicate with one another to coordinate goal-oriented work. It’s more like an Ethernet cable, designed for peer-to-peer conversations between agents. One connects AI to tools. The other connects AI agents to each other. They’re not in competition. In fact, Google supports MCP as a community standard while pushing A2A as its own strategic solution for inter-agent collaboration. Most enterprise setups will benefit from having both, since standardized coordination helps reduce the burden of maintaining brittle, custom integrations.

Source: Google A2A Wiki

Why A Senior Exec Should Care Now, Not Next Budget Cycle

First, speed. When agents can escalate or delegate directly, you cut the round-trip time that humans spend translating one team’s language into another’s. A support agent can push a crash log straight to an engineering triage agent, who pokes the on-call bot.

Second, resilience. Business logic lives in the interaction pattern, not in a single monster prompt. If you sunset a model or swap vendors, you replace one specialist agent, not the entire workflow. The rest of the AI agent grid still talks the same A2A dialect.

Third, accountability. Every baton pass should enable an audit trail. Although this isn’t yet a built-in feature of A2A, structured communication in the system could provide regulators with a clear, machine-readable record of system operations. In theory, this would be a valuable tool for ensuring compliance in industries like finance and healthcare.

Future Pilot Opportunities for A2A

Although A2A is still early in its rollout, the pattern is promising enough to test in real workflows, especially where multiple agents already exist but don’t yet coordinate. The examples below show how A2A enables handoffs, alignment, and shared visibility across systems that previously relied on humans to bridge the gap. However, have in mind that implementing these scenarios requires significant agent development and supporting infrastructure.

Adaptive last-mile routing for logistics and transportation

A2A potentially enables real-time agent coordination without human glue. For instance, a traffic-watch agent detects an accident that will delay truck 42. It alerts a routing agent, which checks a customer-preference agent (“deliver before 6 p.m.?”). If the window is tight, routing coordinates with a dispatch agent to reroute, then leaves a note for the customer-service bot so messages stay consistent. The result is fewer failed deliveries, better fleet utilization, and no human intervention needed to connect the dots. It’s important to note that this scenario requires a robust agent ecosystem and integrations that may not yet be standardized. Keep in mind that you’ll need the right specialists to navigate these kinds of integrations.

Dynamic shelf restocking in-store

A2A could let multiple agents reason over inventory together. For example, a shelf sensor agent notices that milk in aisle seven is running low faster than forecast. It chats with a pricing agent who also sees yogurt moving slowly. Together, they decide on a two-for-one promo that will clear yogurt before expiry and free cold-chain space for milk. A signage agent updates the digital shelf tags; supply chain gets a smaller reorder. A2A helps agents make decisions, meaning less waste, higher margin, and no store manager hectically skimming through supply dashboards to approve reductions. Achieving this level of coordination will require agent development and real-time data integration.

Real-time room turnover in hospitality

A2A could synchronize task handoffs across ops roles. Imagine that a guest checks out at 9 a.m. instead of the reserved noon. The checkout bot notifies the housekeeping bot, which pings maintenance to confirm if a room is ready. If clear, the front-desk bot lists the room for early check-in and upsells an arriving guest. This could significantly enhance customer satisfaction, especially for guests arriving on red-eye flights. Achieving this level of automation is possible with significant agent system development.

Coordinated pre-authorization for hospitals

A specialist referral lands in the EMR. A scheduling agent flags the case and opens a ticket with an insurance pre-authorization agent. While insurance considerations might be complicated and messy, think of the ways this could be sorted with technology. Once the pre-authorization is approved (which may involve one or more human-in-the-loop checks), downstream agents for lab prep and patient communication are notified. The patient gets a confirmed appointment with fewer handoffs, less time on the phone, and reduced risk of scheduling a procedure before the green light.

The benefit isn’t skipping steps. It’s sequencing them more cleanly, so clinical and administrative staff spend less time chasing paperwork and more time focused on care. A2A’s real-world adoption in healthcare is still nascent, but the opportunity is huge. Implementing such scenarios will require collaboration between healthcare systems and A2A agents.

Load balancing during peak energy demand

A2A could support distributed responses in time-critical infrastructure. A smart meter reports a sudden outage on one street. An outage detection agent triangulates it with two other reports and confirms a fault. It immediately notifies a dispatch agent, which finds the closest available crew and sends the job. At the same time, a customer communication agent texts affected residents with an ETA. A2A keeps these agents talking despite pulling from different data systems and response models. The result is fewer calls to the call center, quicker restoration, and more satisfied customers. Like other scenarios, this requires integration across various systems. Stay tuned for advances in production-level implementations to make this possible in your organization.

What This Means for Your Organization and Hiring Plan

A2A shifts how AI systems are structured. Instead of one generalist assistant jammed with a 6k-token prompt, you design a team of narrowly scoped agents, each with a clear interface. That creates a new kind of design problem: who defines the boundaries, the escalation paths, the fallback when an agent times out?

Roles like AI orchestration leads (or a less grand “agent workflow architect”) may be emerging in no time. Someone who sketches out which tracks each AI assistant runs on, when to hand off the baton, and how to avoid collisions. Think train dispatcher, not train conductor.

Your engineering team also needs human talent that spans LLM prompts, APIs, and policy enforcement. Building an A2A grid is closer to service choreography than to prompt engineering. Tokens matter, but so do retry logic, idempotency, and trace aggregation.

On the upside, rollouts get simpler. You can launch a single agent in one department, see if it behaves, and then let it start exchanging messages with a neighboring agent. A few small failures along the way are normal and help you build confidence as you expand.

Another side effect: vendor diversity stops being scary. If the IT bot uses AWS for cost reasons and finance insists on GPT-4o for its audit features, that’s fine. As long as both speak A2A, the message envelope stays the same.

Trade-offs and Things to Keep in Mind

Even the most promising protocols bring some friction and A2A is no exception. These are some operational details that determine whether your pilot scales or stalls.

1- The specs are still evolving.A2A is new and it’s going through updates.

Version 0.2 introduced stateless interactions and a Python SDK, which is a big usability step. If your teams build on a version that changes next quarter, something might break. For now, treat it like any emerging tech: isolate your pilots and use it where you can experiment safely. In other words, don’t tie it into payroll just yet.

2- Handoffs add a little delay.

Every AI baton pass adds milliseconds. In most business workflows, like coordinating IT tickets or employee onboarding, it’s a negligible time cost. But if your use case demands real-time speed (think: fraud detection or high-frequency trading), you might still want a single, fast model handling the whole job.

3- Someone needs to manage permissions.

A2A supports fine-grained access controls, which is great for compliance, but it also means someone has to keep that permissions map up to date. If you’re automating across dozens of tools and departments, the work adds up. Most teams don’t have the right tooling in place yet, so it’s worth budgeting time (and people) for this.

4- Security needs more depth.

A2A’s default security model isn’t quite ready for high-stakes payloads like healthcare or finance data. The current guidance includes rotating tokens, scoped permissions, and short-lived consent for multi-step tasks. All doable, but there’s no “just plug it in” moment yet. If you’re piloting in regulated environments, you’ll need a proper review with your security leads and possibly some custom policy wrappers.

5- Coordination gets complex at scale.

The moment you move past pilots, choreography becomes real work. Aligning how agents interpret task types, defining fallback behavior, and tracing flows across multiple vendors is closer to systems design than prompt engineering. Especially when you combine A2A with an orchestration layer like MCP, new wrinkles show up in governance and error handling.

6- Bots can break mid-stream.

Agents can fail in the middle of a task, such as during an IT provisioning workflow. If one agent stalls or crashes, it may leave a user half-onboarded, with access to Slack but no email. That’s annoying. You’ll need fallback rules and some light monitoring to catch these edge cases.

These are the reasons some AI projects quietly fizzle after the demo. Often because coordination, versioning, and reliability weren’t given enough upfront attention. Handle them well, and you’ll have a system that not only works but keeps working under pressure and avoids post-launch purgatory.

The Quiet Shift You Should Plan For

A2A, on the surface, is just a message envelope. Behind the scenes, it’s the missing puzzle piece that has engineers genuinely excited. It’s an early dev Christmas present. It tackles coordination, the critical ceiling most AI pilots hit after launch. Look for your big vendors to tack “A2A-compatible” onto release notes over the next year. Namely, ServiceNow, Salesforce, and Atlassian. When that happens, the cost of not supporting the protocol will feel like running a website that can’t load in Chrome.

If you’re experimenting with AI in finance, onboarding, customer support, or IT, add A2A to the technical due diligence sheet. It doesn’t lock you into a platform, but it gives you the option to grow an ecosystem that acts less like a swarm of hyperactive bots and more like a team that knows when to pass the ball.

Start small with two agents that can complete one hand-off. Watch how they interact. If it works, you’ll see faster cycles and better audit trails. If it doesn’t, you’ve learned something valuable, without sinking time into a big build.

Either way, the real friction isn’t in what your agents can do individually. It’s in how they hand off work, track progress, and stay aligned. It’s next-level coordination, so you can finally celebrate those efficiency gains you’ve been promised.