Agentic AI has become one of the most anticipated shifts in software right now. It’s gone from a promising idea to a priority for teams trying to level up their workflows.

We’ve been watching the Agentic AI space since it was just background noise. Now, it’s everywhere. Ninety-three percent of IT leaders are already exploring AI agents, while boardrooms are pushing hard to get agents into production. Yet with supply of agentic IA models outpacing demand, security worries mounting, and nearly 40% of agentic projects predicted to fail, it’s time you ask two key questions. First, are AI agents really what your operation needs, and second, what does it take to make them work in the real world?

Before talking about tools or timelines, it’s worth discussing in depth what agentic AI is, how it works, its building blocks, and whether it makes sense for your business cases or not.

What is Agentic AI and How It Works: The Architecture Behind Autonomy

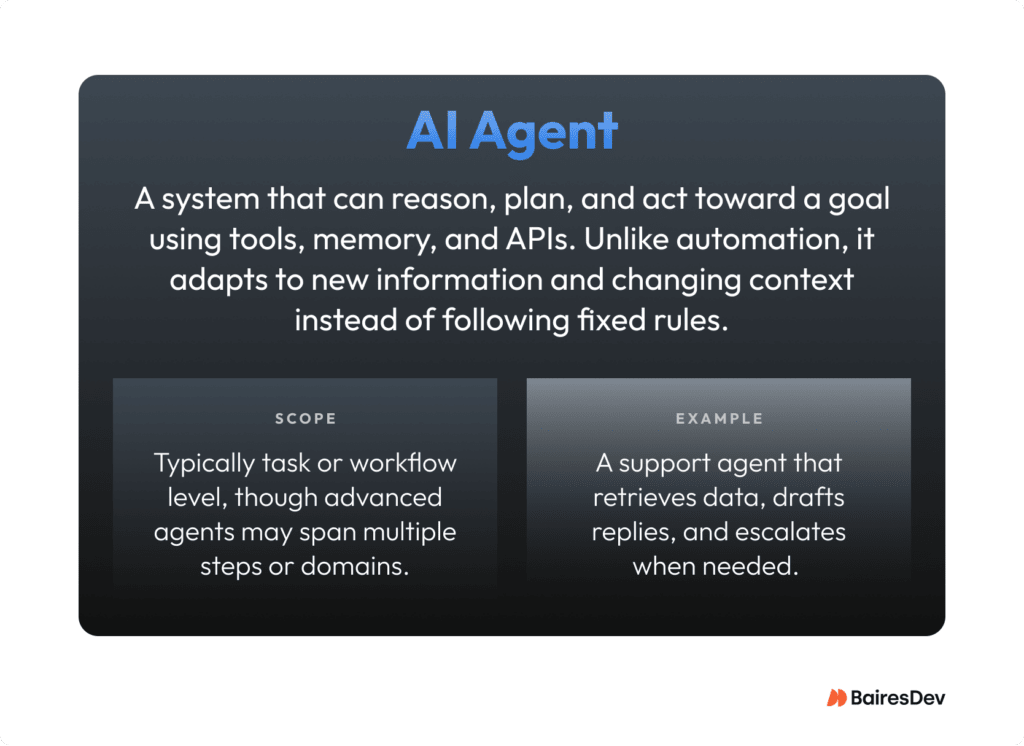

Agentic AI refers to systems that can autonomously plan, act, and adapt toward a goal, not just once, but continuously, with minimal human input. These systems operate with purpose. They’re built on three core principles: autonomy, adaptability, and goal orientation.

Consider agentic AI a network of planners and doers. Each agent is designed with a clear goal, a set of tools it can use, and the ability to act toward that goal without constant oversight. What keeps them from clashing is the underlying structure. This logic tells them when to act, how to share context, and how to stay in sync.

Let’s apply this to a support agent. It can pull relevant customer data from your CRM, draft a personalized response, and loop in a human rep only when the issue falls outside its scope. That’s a big leap from traditional automation, which just follows a fixed script. Agents can adjust, prioritize, and escalate depending on the scenario.

How Agentic AI Works

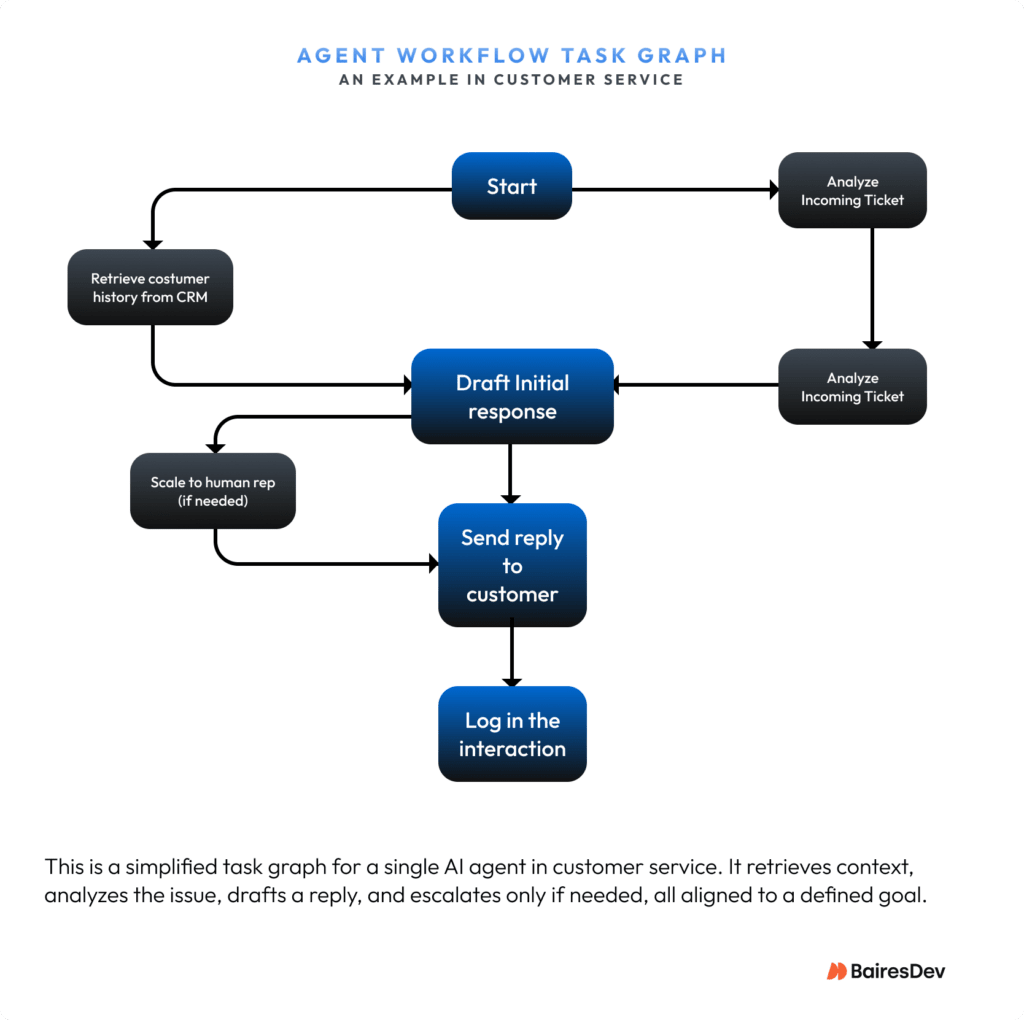

Agentic AI runs on a few core building blocks: states (system conditions), dependencies (task relationships), and goals (desired outcomes). Together, they form what’s known as a task graph, a kind of blueprint that maps out what needs to happen, in what order, and how data flows between steps.

Think of it like managing a large project. The task graph is your project plan. It shows how tasks connect, what stage they’re in, and where things might get stuck. Then there’s the orchestration layer. This s your project manager. It keeps everything moving, tracks who’s doing what, and makes sure each agent plays by the same rules.

A well-designed system keeps this plan dynamic and visible. It knows which tasks are connected, what stage each one is in, and how they’re progressing. That visibility is what makes it possible to trace and debug workflows like any other type of software.

Let’s make it more tangible. Say you’re managing customer onboarding. One agent sends welcome emails, another sets up the account, and a third watches for drop-off signals to trigger reminders. Each agent handles a specific goal. The task graph defines the order. Orchestration makes sure every step happens in sync.

This is how agentic systems plan, act, and adapt. This keeps performance steady and costs predictable without constant human oversight.

Architecture Sets the Ground Rules for Scale, Cost, and Stability

Before you launch a single agent, you need to get the architecture right. This is where AI strategy meets engineering. This is where you define how the system is structured, how components talk to each other, and what’s responsible for what.

In agentic systems, good architecture starts with clear boundaries: what each agent can and can’t do, how it accesses tools and data, and how it fits into the bigger picture. If your team can’t summarize an agent’s role in one sentence, the design probably isn’t ready for production.

When those boundaries blur, things get messy fast. Agents overlap, duplicate work, or act on conflicting goals. That might not break the system immediately but as you try to scale, those overlaps turn into reliability issues, mounting costs, and frustrated teams.

In the rush to get something working, some companies may skip architectural guardrails or bolt on governance after the fact. It works in a demo. Then it breaks under real workloads.

That’s what we call the infrastructure iceberg. It is all the behind-the-scenes complexity that gets ignored at first: how agents authenticate, how they share memory, how changes get audited. The more autonomy you introduce, the bigger that iceberg gets.

Strong architecture plans for this upfront. Engineers define agent boundaries, design shared memory flows, and bake governance into every layer, not just on top. Weak architecture hides complexity. And when complexity is ignored, agentic systems break when you need them to scale.

Architecture Choices That Make or Break Agentic Systems

With the right architecture in place and boundaries clearly drawn, the real test comes down to design. Three choices, in particular, often tend to decide whether an agentic system runs smoothly or into trouble.

- Memory & the data plane: This is the system’s collective memory (short and long term), and where that information lives. It’s like a corporate knowledge management for your AI. Poor memory design leads to two big problems: agents forget important context or retain too much irrelevant data. In a logistics workflow, that might mean forgetting recent delays or duplicating tasks due to outdated state — issues that get more expensive as you scale.

Strong architectures use structured retrieval so agents pull the right data at the right time, keeping responses consistent and efficient. Later, when you measure performance, this shows up in metrics like knowledge retention and utilization. This is a sign that agents use information effectively instead of relearning it from scratch.

- Single-agent vs. multi-agent: A single agent works like a generalist. It’s easier to manage but limited in scope. A multi-agent system resembles a team of specialists, faster and more capable but harder to coordinate. For something simple, like generating personalized reports, a single agent works. But for something like coordinating routing, fleet maintenance, and customer comms across a logistics network, you’ll want multiple agents collaborating under shared rules.

- Tool use & execution control: Agents constantly reach out to external systems, including APIs, databases, and internal applications, to get work done. That tool use needs guardrails. Without proper execution control, agents can overload systems, trigger the wrong actions, or expose sensitive data. That’s why agent-tool connections need structure. Many teams are moving away from one-off integrations and building centralized layers, often MCP servers, to manage access and enforce compliance.

Your agents are only as effective as the systems they plug into. Build those connections to last.

Let’s tie it all together with a quick example. Let’s say your logistics team starts with a single agent to plan delivery routes. It works fine at first. But once volume grows, the team adds more agents for maintenance and customer updates. Without good memory design, agents forget that certain vehicles are in repair or worse, they double-book routes because task history isn’t shared.

With structured retrieval and tools routed through an MCP server, those agents can collaborate cleanly, scale with demand, and stay compliant the whole time.That brings us to a growing standard behind many of these centralized layers: the Model Context Protocol (MCP).

MCP isn’t just about connecting tools — it’s about doing it safely, scalably, and in a way that supports real-world governance. Here’s what you need to know.

Connecting Agents with MCP

At the system level, you need a way for agents to use tools and data safely. This is where the Model Context Protocol (MCP) comes in as a standard “USB-C for agents.” They let apps connect to tools, data sources, and workflows through a common interface. Without it, companies end up stitching together fragile one-off integrations that don’t scale. With MCP, you get consistency and governance across every agent interaction.

Major players (including Microsoft) are backing MCP to enable cross-agent collaboration and memory across vendors. For enterprises, MCP is quickly becoming foundational. It brings agent operations in line with the same compliance, observability, and access control you’d expect from any production-grade system.

Orchestration: How Agents Think and Work Together

Once those agents are in place, orchestration becomes the backbone of agentic AI. It’s the layer that manages how agents communicate, share context, and coordinate tasks without colliding or duplicating work. It decides who acts when, how outputs become inputs, and how progress is tracked.

In well-designed systems, orchestration is stateful. It means it keeps track of what each agent is doing, what it depends on, and how far it’s progressed. That’s what keeps workflows like customer triage or supply chain updates running smoothly, even when things shift midstream.

We’re also seeing a new role emerge: the Orchestration Engineer. This person designs and maintains agent workflows, defines how information moves, and sets the rules for evaluation. For business leaders, it’s an early signal that managing AI systems is starting to look a lot like managing digital teams.

Agents Decide Their Next Move Through Reasoning

Reasoning is what enables agents to think through their next step. It’s where they plan, evaluate, and adjust, especially when the path forward isn’t obvious. Many agents today use LLMs (large language models) to power this layer. These models help the agent understand context, break down complex tasks, and adapt when things don’t go as expected.

Take our onboarding example. If a user skips email verification, the agent uses reasoning to decide what to do. Should it send a reminder, pause the flow, or escalate to support? Orchestration manages the workflow; reasoning helps the agent choose the right move within it.

Governance Is Built in, Not Bolted on.

As systems grow more autonomous (yes, this thought can be a bit daunting), control can’t be an afterthought. You don’t want AI agents deleting key files, hallucinating invoices, or triggering unexpected API calls. Guardrails need to be there from the start to protect data, ensure accountability, and make decision-making transparent. For business leaders, three pillars matter most:

- Role-based access & sandboxing: Think of each agent as a team member with a clear job description: what it’s allowed to see and do, nothing more. Access control handles the “who,” but sandboxing sets the boundaries for how it executes. That way, if something breaks, the damage stays contained. It keeps the blast radius small.

Salesforce’s Agentforce, is a good example. It supports role-based access, policy enforcement, and data redaction to keep agents in their lane

- Observability & audit trails: You cannot govern what you cannot trace. Agentic systems need the same level of visibility as any enterprise-grade app. They need full logs of what happened, why it happened, and what went off-script. Standards like OpenTelemetry are already shaping how we get there. We’re talking about control and performance. The better your observability, the faster you can link actions to value.

- Regulatory alignment: Tech often outpaces legislation, but when rules catch up, systems that behave like black boxes won’t pass muster. Frameworks like the EU AI Act demand logging, human-in-the-loop oversight, clarity of decision pathways, and documentation. In short: build for auditability and trust from day one.

Human oversight belongs in that same foundation. It shouldn’t be a patch for when things go wrong but rather a design feature that grows with the system. These systems aren’t meant to replace people. They’re meant to give people the context and control to steer autonomy as trust builds.

Once those foundations are in place, measurement takes over. You can’t manage what you can’t see, and you can’t trust what you can’t measure.

Measure What Matters: Performance, Trust, and Business Value

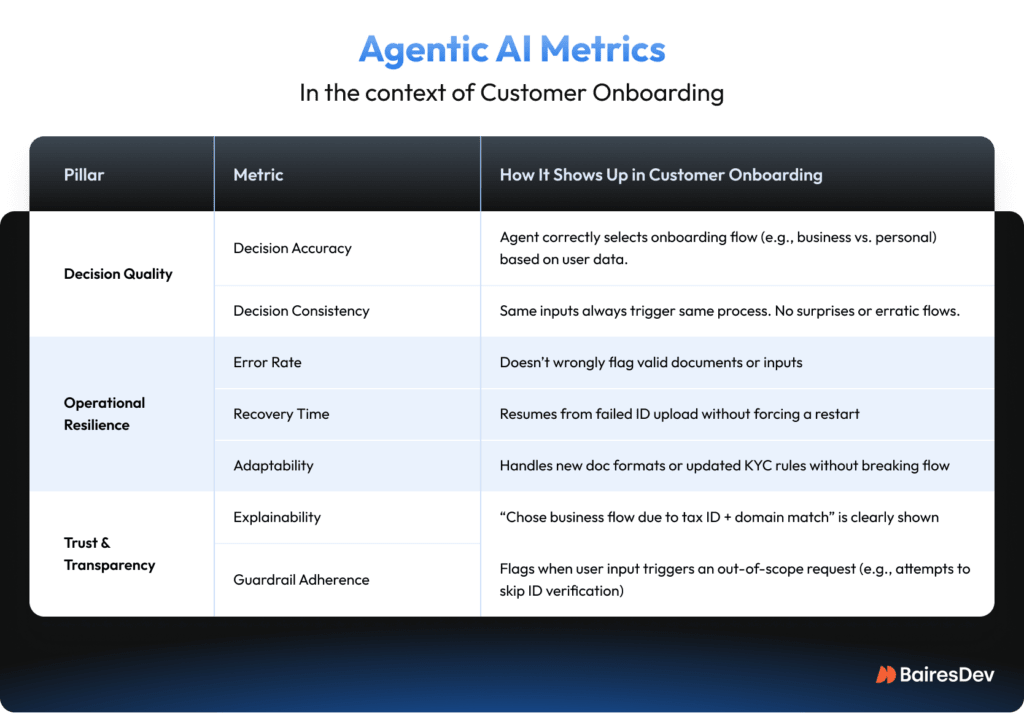

Define success before you measure it. Agentic AI should be evaluated not just on how fast it works, but on whether it delivers meaningful outcomes. That means tying metrics to tangible business impact. Think of faster ticket resolution in CX, fewer forecasting errors in finance, or stronger SLA performance in ops. If you’re not connecting agent behavior to business goals, you’re measuring in a vacuum.

However, speed and outputs aren’t the full picture. The real test of success is whether agents become trusted, effective parts of the workflow. That starts with tracking what, how and why an agent does what it does. We break it down into three core pillars:

Pillar 1: Decision Quality

This speaks to the agent’s autonomy. One of the clearest signs of maturity is decision accuracy. It shows how often it picks the right flow (e.g. business vs. personal) without manual help. If it chooses well consistently, it’s reliable.

Then there’s decision consistency. It’s not always tracked formally, but it matters because unpredictable behavior erodes user trust fast. If two users with similar inputs get completely different flows, you’ve got a trust issue. Agents need to be predictable before they can be trusted.

Pillar 2: Operational Resilience

This is about how well the agent holds up when things get messy, which they always do in production. Start with error rate and recovery time. These ones reveal how often it gets something wrong (like rejecting a valid ID) and when it fails, whether it restarts the process or picks up where it left off.

You’ll also want to track adaptability. Real-world workflows change: a new data field, an unexpected format, a surprise downtime. The best agents flex and recover, not break.

Pillar 3: Trust & Transparency

People won’t adopt what they don’t understand or trust.

Explainability helps here. If the agent chooses a specific flow it should be able to say why. A clear audit trail of its reasoning builds confidence and keeps things accountable.

And guardrail adherence is just as important. You need to know when the agent tries to step out of bounds, like exposing PII or making calls it shouldn’t. Flagging these instances is key to manage risk and staying compliant.

Building on our customer onboarding example, here’s how the metrics above play out in practice.

Tie performance to cost and scalability

Once you’ve evaluated decision quality, resilience, and trust, there’s one more lens that matters: cost. Every autonomous action has a price: compute, bandwidth, and human oversight. If you want to scale responsibly, you need visibility. That starts by linking usage analytics and cost per decision to real business KPIs.

This isn’t just a technical concern. For financial and other business leaders, these metrics offer critical input for forecasting, budget planning, and understanding where agentic AI delivers measurable ROI—or where it quietly drains resources.

Resource efficiency matters. How much memory, processing power, or API time does the agent burn per task? Look at unit economics that give clear answers. How much does it cost to resolve a ticket? To complete a transaction? To qualify a lead? These insights tell you when to scale up, fine-tune, or pull the plug before sunk costs pile up.

But raw compute spend isn’t the whole picture. Smart scaling means understanding where value is created and where it’s not. Metrics like cost per outcome, throughput-to-cost ratio, and idle time vs. active time help you assess whether performance gains are actually worth it.

And don’t skip the human factor. Oversight cost tells you how much autonomy you’ve really achieved and when it stops being efficient.

Do You Really Have an Agentic AI Use Case?

Everyone wants agents now. It’s tempting to think they can handle “anything”. But the uncomfortable truth is that not every problem needs one. Let’s approach this assessment based on the agentic principles.

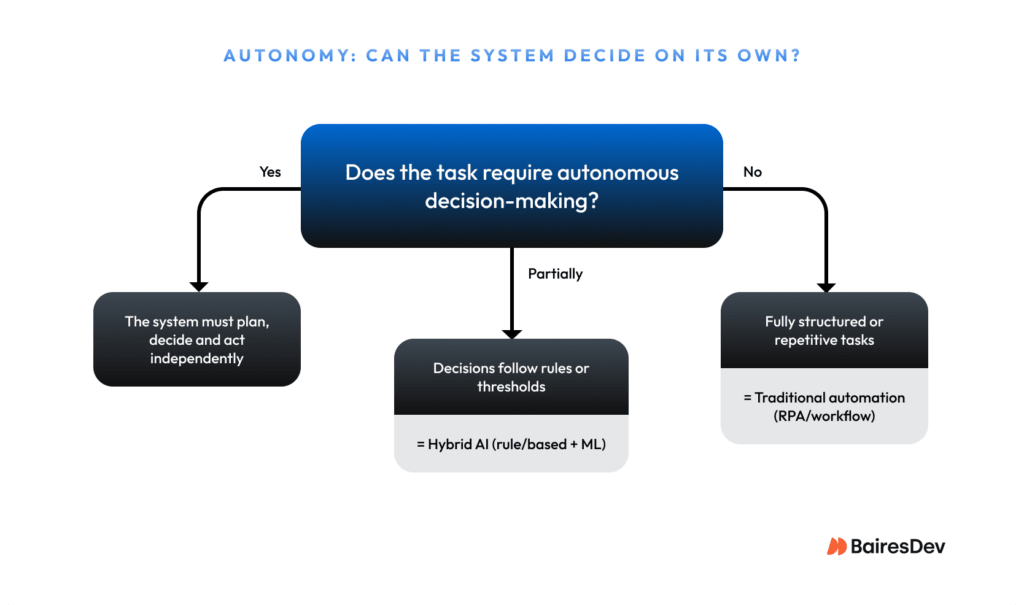

Is Autonomy Important?

Autonomy isn’t the goal. It’s a means to a better outcome. Sometimes, adding it just introduces complexity without delivering value. If your use case requires the system to act independently without constant human supervision, that’s your first sign it might fit the agentic mold.

For example, an AI that routes freight dynamically as conditions change qualifies. A route-planning system that proposes schedules but needs a dispatcher’s confirmation for every change does not. Will removing the human from the loop improve speed and outcomes or just create risk?

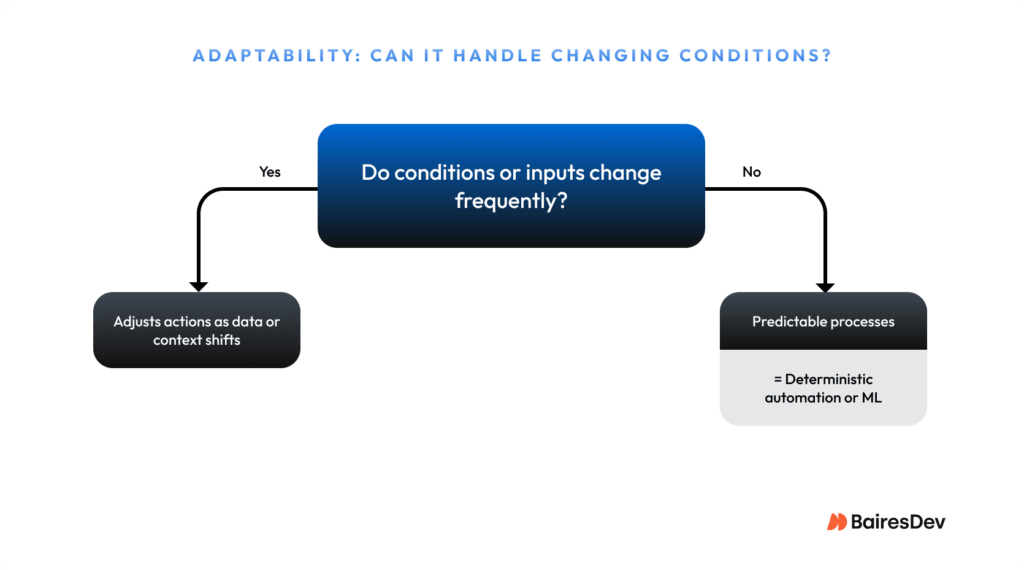

Is Adaptability a Necessity?

Agentic AI shines in environments that shift constantly, where the inputs, data, or context are in constant flux. If your process involves real-time variability (weather, demand, market signals, user behavior), agents can adjust automatically. If it’s stable and rule-based, you’ll likely get better ROI from traditional automation.

Think of adaptability as the difference between a thermostat (fixed logic) and a smart HVAC system that learns your patterns and adjusts throughout the day.

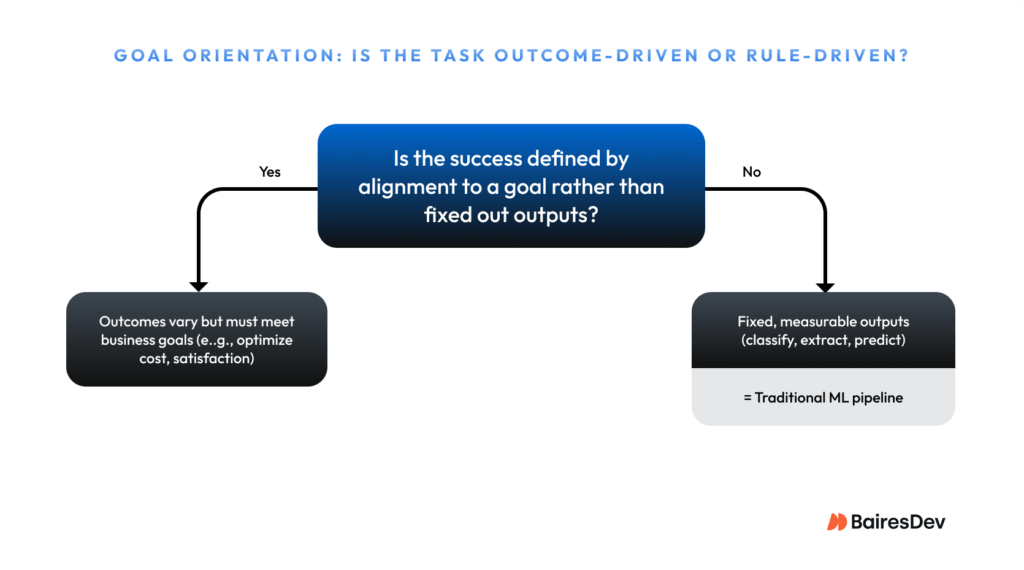

Is a Goal-Driven System Required?

Traditional automation follows rules; agentic AI pursues outcomes. If your workflow requires the system to plan and adapt toward a goal rather than follow fixed rules, it’s a good candidate for agentic design. For instance, an AI tasked with “reducing delivery delays” can plan routes, reassign drivers, and update customers dynamically. But if your task is “send shipment updates every hour,” that’s deterministic, and that’s automation territory.

From Agent Curiosity to Value

The agentic AI race is on, but many companies haven’t crossed the starting line. McKinsey says that 62% of organizations are already experimenting with AI agents, yet only 23% are scaling them across a business function. Interest is high, but true adoption is just beginning.

That means you’re right on time. The real winners will combine autonomy with discipline, governance with adaptability, and use clear metrics to separate hype from value.

Start with one well-governed use case. Define success, measure it, and prove it delivers. If you move smart, focusing on where autonomy drives real outcomes, you’ll be in a strong position to outpace others in your space. And if you’re looking for a reliable AI development partner to help you get there, contact our team. We’re ready to support you.