Shopify CEO Tobi Lütke recently shared, “There’s a fundamental expectation that employees embrace AI in their daily work.” At an increasing number of companies teams are now expected to try solving with AI first before requesting more resources. This shift isn’t just happening in tech. It’s a signal that AI proficiency has become a core business skill.

If you’re one of the 97% of business leaders tasked with delivering AI-powered results, but don’t come from a technical background, there’s a lot to take in. No wonder over 80% of AI projects fail, often due to issues with data quality and underestimated complexity.

You don’t need to become an engineer, but you at least need to know what AI can do for your business, and how it works. The “how” can get a little complex, but that understanding will help you make better decisions, ask sharper questions, and lead with confidence in an AI-first world.

In this article, we’ll walk you through key AI concepts, from the main AI branches to learning strategies, model types, and real-world use cases. Consider this your crash course in how AI works, and why that knowledge now belongs in your and your team’s toolkit.

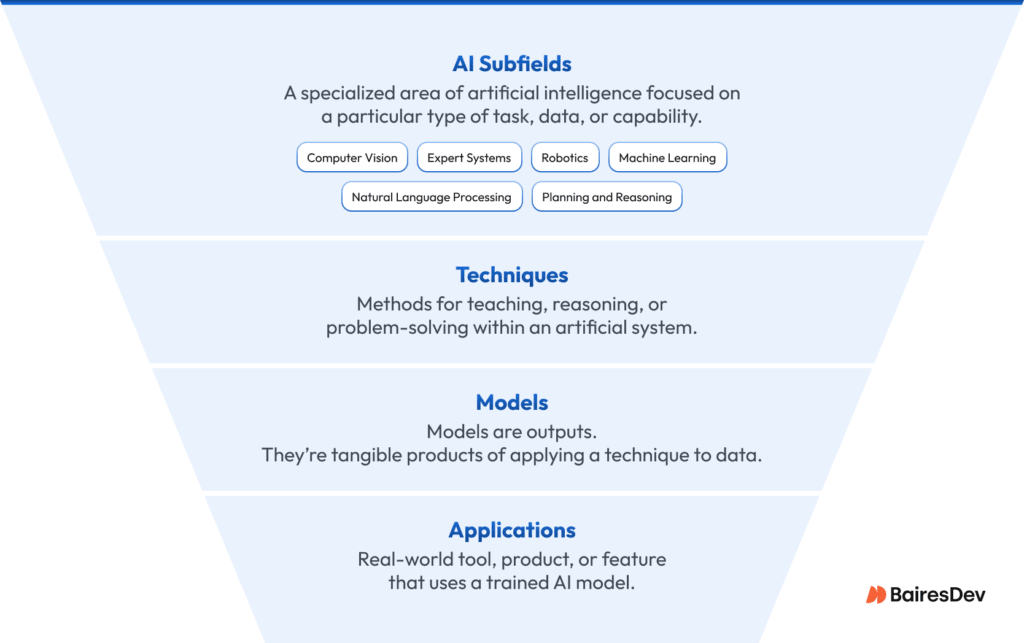

The AI Stack: From Subfields to Solutions

AI may still feel like a mess of buzzwords, causing stakeholders to shy away from practical conversations—the kind where real decisions around implementation or adoption happen. So, let’s get AI terms right. In casual conversations, you’ll often hear “machine learning,” “deep learning,” or “generative AI” used like they mean the same thing, but they don’t. Closely related? Yes, but they actually sit at different levels of an AI hierarchy: subfields, techniques, models, and applications.

- AI subfields are our first step. Picture them as departments in your organization. Each one focuses on a different type of work. Machine Learning is like your Operations team, figuring out how to improve performance over time. Natural Language Processing is like Communications, making sense of language. Computer Vision is like Quality Control, spotting visual issues. Each department has its specialty, but often overlaps with others and shares resources.

- Techniques are the methods used to solve problems or learn from data within an AI system. If AI is the field, and a model is the tool, then a technique is how you build and train that tool. Understanding techniques helps explain how AI systems learn and function. It helps you assess what kind of data, effort, and skills are needed to make an AI system work. It also helps you ask the right questions when scoping a project or choosing a solution.

- Models are the result of applying a technique to data. They are tools trained to recognize patterns in data and make decisions or predictions based on that training. An AI model is the trained brain that makes sense of new data. Knowing what a model is helps you understand what you can achieve with them, whether it’s getting a marketing insight, a product recommendation, or an automated inventory decision.

- Applications are tools, products, or features that use trained AI models to solve real problems. Think chatbots, recommendation engines, a fraud detection system, or a GenAI tool to summarize reports. This is how AI shows up in your everyday life. You don’t need to know every detail of how it’s built, but you do need to know what it does, how reliable it is, and whether it solves the problem it’s meant to.

Getting these terms right helps you understand what’s required at each level: from the systems you invest in to the talent and infrastructure you need to support them. For decision makers like you, this clarity guides smarter hiring and budgeting, and results in better-aligned solutions.

Now, let’s look at how AI systems actually learn.

How AI Learns and Thinks

The way AI learns is the basis of what it can and can’t do. That’s why understanding its core learning strategies is essential when evaluating tools, setting expectations, or building new solutions. Since AI aims to mimic human learning and reasoning, knowing how it works helps you choose the right solutions that align with your data and goals.

When you understand how AI learns, you’re also better equipped to ask the right questions. For example, imagine you’re setting up an AI system to forecast customer demand or predict staffing needs. If the model requires labeled data, you’ll know to ask, “Does this model require labeled data, and how much do we need to get it off the ground?” This would shed light on upfront time and resource costs. Or, you might flag when a solution promises to continuously improve but doesn’t support post-deployment learning, raising concerns around long-term maintenance and performance.

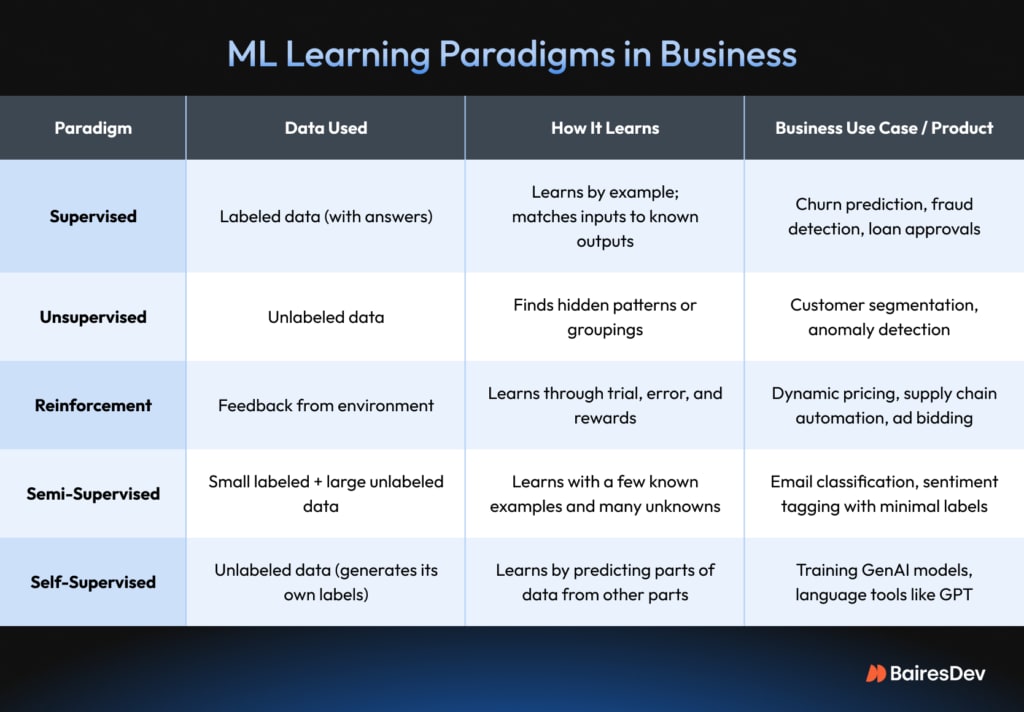

Let’s examine the learning strategies that machine learning models use:

- Supervised Learning – This approach trains a model on a labeled dataset. This means the data already includes the “correct” answers. The system looks at examples, learns the patterns, and uses that learning to make predictions on new data. For instance, if you’re training a model to predict customer churn, you’d train it on past customer records labeled ”churned” or “retained”. Over time, it learns the patterns that suggest at-risk customers.

- Unsupervised Learning – As opposed to supervised learning, this learning works with unlabeled data. There are no “right” answers. The AI looks for hidden patterns or clusters on its own. Imagine giving your sales team a list of thousands of customers without any labels. You ask them to cluster similar customers based on purchase behaviors. This strategy organizes the data mess and helps you spot patterns.

- Reinforcement Learning is a trial–and–error strategy. This technique trains models to make decisions to achieve a specific goal. The actions that work towards that goal are reinforced, while actions that detract from it are ignored. Think of coaching a new employee. You let them try, fail, and learn with your feedback. Over time, they make the right calls on their own. Take optimizing delivery routes as an example. Reinforcement learning rewards faster or more efficient outcomes over time.

- Semi-Supervised Learning – This combines a small amount of labeled data with a larger pool of unlabeled data. When labeling data gets expensive or time-consuming, and you still want an accurate model, semi-supervised learning is a great technique. Think of an analyst learning from a handful of labeled reports, then trying to classify a much larger stack on their own, occasionally checking back with a few known examples to stay on track.

- Self-Supervised Learning – This method needs no human intervention. It generates its own training signals from the data itself. It predicts parts of the data from other parts, a very common approach in training large language and vision models. It’s like giving someone a document with missing words and asking them to fill in the blanks. Over time, they get great at understanding context, just like a language model.

But learning strategies don’t stop with ML. Let’s take a look at a few additional common approaches you may encounter:

- Rule-Based Systems (A core part of Symbolic AI) – If you’re familiar at all with basic programming, this is easy to understand. It uses “if-then” logic written by humans. These systems just follow predefined instructions. They’re useful when decisions need to be consistent, explainable, and aligned with policies or regulations. Think of an automated loan eligibility check: if the applicant’s credit score is below 600, then deny the loan.

- Neuro-Symbolic AI (a form of Hybrid AI)– This combines the structured logic of rule-based systems with the pattern recognition of machine learning. It offers the best of both worlds: interpretability from symbolic reasoning and adaptability from machine learning. That’s why it’s gaining traction in sectors like legal, finance, and healthcare. Imagine a contract analysis tool that extracts clauses using ML, but applies legal rules to assess risk.

- Case-Based Reasoning (Cognitive AI) – This learning approach solves new problems by referring to similar past cases. It searches for matches and adapts old solutions to new situations. This is useful in knowledge-heavy environments where precedent matters. You’ll find this in legal research tools that help attorneys find relevant cases by comparing new legal issues to existing precedent, speeding up case preparation while maintaining accuracy.

- Evolutionary Algorithms (Computational Intelligence) – Remember high school biology and natural selection? Evolutionary algorithms generate many possible solutions, test them, keep the best ones, and combine them to evolve better results over time. Think of situations with too many variables, like optimizing pricing strategies across hundreds of products. You’d test different combinations to find the ideal balance between profit margins, customer demand, and competitor pricing, without relying on a single fixed formula.

Each learning paradigm shapes how a model is trained. Choosing the right one is key to building something that actually fits your data and goals. Once you understand how AI learns, the next step is to understand what is built from that learning. Let’s explore the model types that turn raw data into predictions, decisions, and even creativity.

What Gets Built: Common AI Model Types

There are many AI model types, many of which you’ve probably used at some point. Getting familiar with them helps you understand what each one is good at, what it costs to build and maintain them, and which one fits your business problem.

We’ll group models into approachable clusters:

1. Decision Trees / Random Forests

Decision trees are among the simplest and most interpretable models. Picture a flowchart asking you a series of Yes/No questions. An easy example would be credit scoring systems like FICO. For example: “Has the applicant missed any payments in the last 12 months?” → “Is their credit utilization above 30%?” → “Do they have a history of bankruptcies?” — leading to a prediction like “low,” “medium,” or “high” credit risk.

Random forests build on this idea, combining many decision trees into a single, powerful model. Each tree looks at different data, and their collective predictions are aggregated, like taking a vote.

Great for: Classification and prediction using structured data. Common in customer churn prediction, credit risk scoring, fraud detection, product recommendation, or employee attrition modeling. Particularly valuable in industries that require explainability, like finance, insurance, and healthcare.

Cost: Low to moderate. These models don’t require huge amounts of data or expensive infrastructure.

Complexity: Low for decision trees, moderate for random forests.

2. Neural Networks

Inspired by the human brain, neural networks have layers of “neurons” that process data and pass it on, gradually learning patterns and relationships. Each layer transforms the data a bit, until the final output is something useful, like a prediction or a classification. Deeper versions (like deep neural networks) solve more complex problems, like detecting patterns in voice, images, or financial data. You’ve seen neural works in action powering Amazon’s product recommendation engine.

Great for: Demand forecasting, sentiment analysis, image or audio recognition, predictive maintenance, and process automation.

Cost: Moderate to high, especially for deep networks that need more compute power.

Complexity: High. This requires skilled data scientists and careful tuning.

3. Transformers

These are a specialized type of neural network built for sequence-based data, especially language. Their attention mechanism allows them to look at the whole context of a sentence of data input, not just parts of it. They’re behind Large Language Models (LLMs) like GPT and BERT. They power GenAI tools, advanced search, summarization, and content generation.

Great for: Chatbots, virtual assistants, intelligent search and knowledge retrieval, contract or policy document analysis, or code generation.

Cost: High. These are resource-intensive, require strong compute power, and often cloud-based infrastructure.

Complexity: High. They require specialized ML talent if you’re fine-tuning rather than just using pre-trained models.

Natural Language Processing (NLP) fits here

NLP is another AI subfield focused on helping machines understand and generate human language. Before transformers, models like Recurrent Neural Networks (RNNs) were used for text, but they struggled with long-range understanding. Transformers changed this by handling entire blocks of text at once, making them the new standard for NLP tools.

Why it matters: Well, NLP is where many AI applications become customer-facing (chatbots), or team-supporting. Understanding transformers helps you assess vendors, budget realistically, and recognize where GenAI might (or might not) add value to your workflows.

4. Generative Models (GANs, VAEs)

These models create new data that resembles the data they were trained on. The two most common types are:

Generative Adversarial Networks (GANs): They pit two neural networks against each other, where one generates content and the other judges it until the output is convincingly realistic. GANs create synthetic images, product mockups, or data. Imagine your designers are trying to come up with creative labels for a packaged good. Think of Cava’s Magic Design or Adobe Firefly as examples of this.

Variational Autoencoders (VAEs): These use compression and reconstruction to generate new, slightly varied versions of existing data, particularly used in visual and design-heavy tasks. Following up on the example above, imagine those packaged goods labels have to be personalized. VAEs will generate those personalized variations without creating anything from scratch.

Now, you may be wondering if generative models are a part of GenAI? Although GANs and VAEs are considered part of the GenAI family (especially for visual and design-focused outputs), not all GenAI is built with GANs and VAEs. Most text-based GenAI relies on transformers.

Cost: Moderate to high, depending on scale and computer resources.

Complexity: Moderate to high, requiring ML talent with experience in deep learning.

5. Ensemble Models

These models combine multiple individual models for better and more accurate results. It’s like taking a vote. The final decision is the result of what the models collectively predict. For instance, e-commerce platforms like Shopify or Etsy combine models to improve fraud detection. They use decision trees to flag clear rule-based fraud patterns and neural networks to catch more complex, subtle behavior. One model might catch obvious signals, while the other learns from behavioral patterns, and the ensemble helps reduce false positives.

Great for: Increasing prediction accuracy for high-stakes decisions, reducing bias or overfitting from any single model, and handling complex classification or forecasting problems.

Cost: Moderate. Combining existing models requires compute and engineering time.

Complexity: Moderate, since it adds layers of coordination but not necessarily new data.

AI Models: Use Cases and Business Implications

| Model Type | Best For | Industries/Use Cases | Cost | Complexity |

| Decision Trees / Random Forests | Classification and prediction | Churn prediction, fraud detection, credit risk scoring | Low to moderate | Low to moderate |

| Neural Networks | Forecasting, classification, automation | Sentiment analysis, predictive maintenance, image/audio recognition | Moderate to high | High |

| Transformers | Language-heavy tasks | Chatbots, summarization, code generation, search | High | High |

| Generative Models (GANs, VAEs) | Synthetic content, personalization | Product mockups, content variation, creative design | Moderate to high | Moderate to high |

| Ensemble Models | Reducing error, improving predictions | Financial risk modeling, healthcare, e-commerce | Moderate | Moderate |

How Your Competitors Are Leveraging AI Models

Now that you understand the basics of how AI learns and what gets built, let’s examine how forward-looking companies are applying these concepts to gain a competitive edge.

While most companies are still early in their AI journey (exploring use cases, identifying critical problems to solve with AI, or onboarding their teams with AI tools), the ones making the biggest strides are going further. They’re experimenting with proprietary AI models that give them a real edge. Here are a couple of examples of how we’ve supported companies in their AI development journey.

Synthetic Data with LLMs to Accelerate Model Testing

Recently, an AI/ML venture focused on building sustainable data infrastructure partnered with us to strengthen its foundation for machine learning experimentation. Our engineers helped prepare high-quality training data, automate data cleaning and curation, and implement scalable data pipelines. This enabled the client to leverage Large Language Models (LLMs) for synthetic data generation and run more agile, controlled experiments, all without being limited by real-world data constraints.

Hierarchical Models for Faster, Smarter Classification

Another client, a logistics company using AI/ML to automate catalog categorization and streamline data processing, brought us in to enhance its machine learning infrastructure. Our teams improved classification accuracy using hierarchical models and techniques like RAGFusion and semantic chunking. We also optimized data workflows, implemented MLOps automation, and migrated models to the cloud, ultimately reducing Google Cloud costs by 80% and cutting inference latency from 40 seconds to just 1.5.

These are examples of AI requirements we see day in and day out. As you move toward a more AI-powered business, your job is not only to define goals, but also to leverage tech to meet them. That means knowing what AI models do, how they work, and how to evaluate them. That knowledge is what separates reactive adopters from innovation leaders.

The Real Work Starts Here

Without this foundational understanding, business leaders risk poor investments or missing out on market-shifting opportunities. But now you know more. And that changes everything.

You don’t need to be a technical expert to lead in AI. You just need clarity, the kind that helps you ask the right questions, challenge assumptions, and guide your teams with confidence. That’s what this knowledge gives you.

If you’re ready to move from awareness to action, we’re here to help. Our AI developers and machine learning engineers are ready to architect solutions around your goals.

You bring the vision. We’ll help you build the future.