The importance of artificial intelligence (AI) is that it can drive measurable impact like faster execution, safer operations, or lower costs. But the graveyard of stalled pilots proves that hype alone doesn’t survive production. Your peers aren’t asking if AI is exciting. They’re asking if it’s reliable, governable, and worth the budget. The key idea is to deploy AI like any other critical system, with the same rigor and accountability.

Executives are learning that with AI just as with any other tool, they need to think beyond demos and vendor promises. Can your team identify potential security threats at scale? Can your platforms handle event-driven growth? The bar for trust is high. Leaders who clear it will extract durable advantage. Those who don’t risk operational drag and compliance exposure.

This guide strips away the noise and focuses on execution. We’ll discuss where AI creates enterprise value and what guardrails you need to make it work. We’ll cover operational efficiency, decision quality, customer growth, and risk posture. Then we’ll walk through prerequisites most teams ignore. For VPs of Engineering and CTOs, the objective is simple: deliver AI that ships, scales, and sticks.

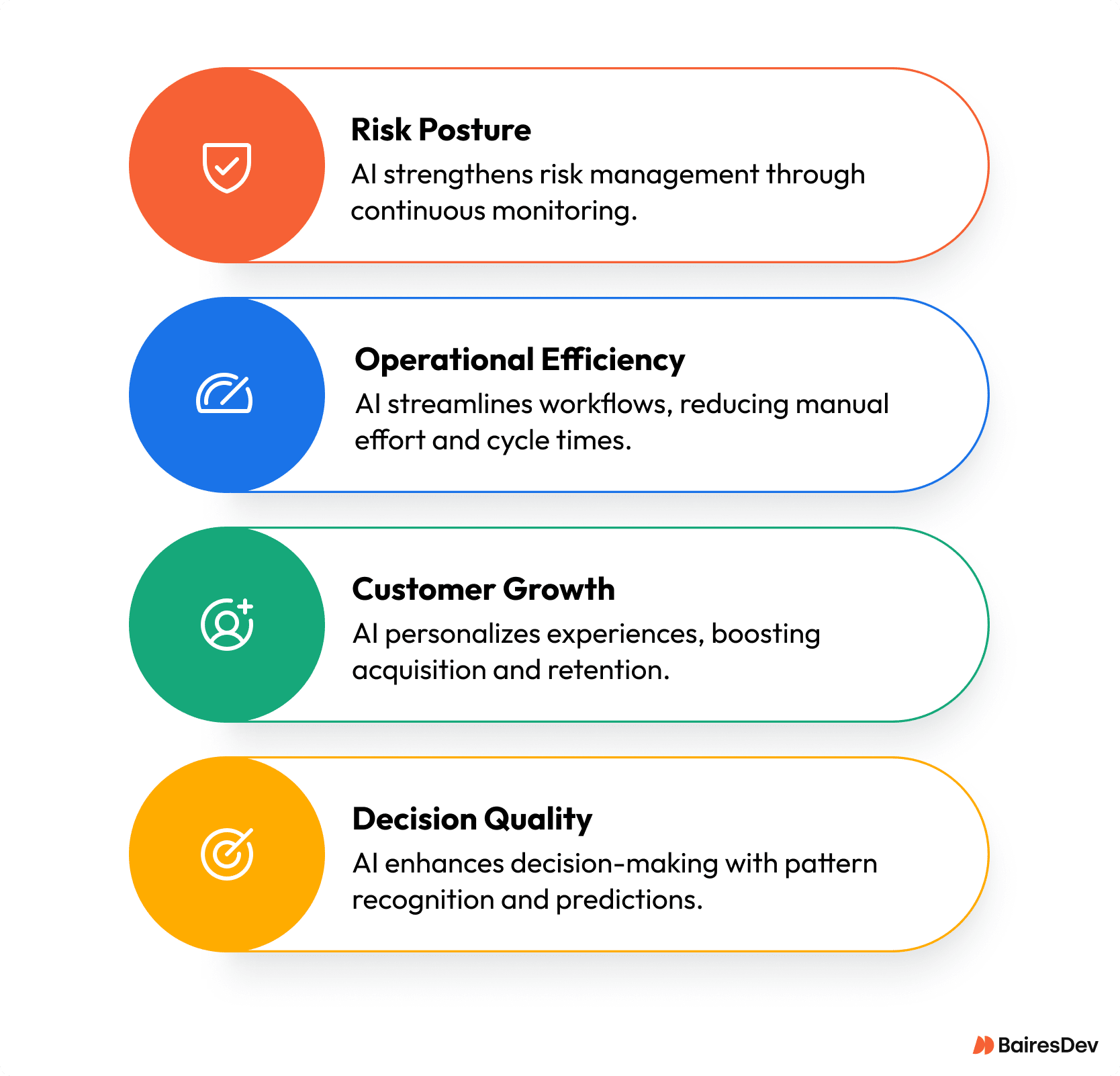

Why AI Matters for Enterprise: The 4 Value Pillars

The hype around AI technology is easy to buy into, but for enterprise leaders, results matter more than headlines. We don’t have the luxury of experimenting for experimentation’s sake. What we need is grounded, strategic AI development that improves operational efficiency and decision-making. We need AI to deepen customer relationships and reduce risk.

If you’re not seeing measurable outcomes across these four pillars, you’re not doing AI right.

1. Operational Efficiency

AI applications can deliver massive productivity gains when they’re aimed at the right problems. Start with reducing data entry. Logistics operations are using route optimization models and machine learning on real logistics data to reduce travel time and fuel consumption. This can free up staff for systems improvement.

Success from AI solutions compresses cycle times and raises leverage per engineer. AI doesn’t just shave seconds but instead changes the shape of your workflows. But without good training data and realistic expectations, you’re likely to over-automate messy workflows, creating drag. And autonomous creep (where systems take on more control than intended) can quietly expand scope. That can introduce failures that engineering leaders don’t notice until the damage is already operational. To avoid calamity, build with model hygiene and human-in-the-loop checkpoints.

Model Hygiene helps by versioning models and monitoring them to prevent drift. For instance, you could store model iterations in Git and run scheduled drift detection to spot when outputs start deviating from known baselines. Human-in-the-loop checkpoints are manual reviews for high-impact AI decisions. They can work by requiring a product manager to approve AI decisions.

2. Decision Quality

The real value of AI development is speed plus sharper judgment. When your product team uses AI to identify patterns across usage logs and NPS feedback, they surface insights faster and cut rework. For instance, finance can use fraud detection models to stop losses before they scale.

A healthcare company can layer AI on historical treatment records to recommend personalized treatment plans. AI here can drive higher adherence and faster recovery times.

The win is reduced variance in calls that require human intelligence but benefit from AI-assisted framing. But it’s critical to watch the risk surface. Poor training data leads to garbage insights. And once black-box decisions start creeping into strategy, the explainability debt can pile up fast. This can be mitigated by monitoring with a human in the loop strategy.

Use responsible AI frameworks and require clear lineage from source data to decision.

3. Customer Growth

The best AI applications let you deliver personalization at volume. A SaaS company can use AI chatbots to tailor onboarding based on user behavior. Digital assistants trained on company knowledge can significantly reduce churn. You can also use AI to predict upsell moments and time outreach accordingly.

These wins come from training models on your knowledge base. However, unchecked, this is where AI can damage trust. Hallucinated recommendations and irrelevant personalization are fast ways to erode loyalty. That’s why every customer-facing model needs an ethics-first stack.

Let’s say you have 200k chatbot interactions weekly. You might audit for biased outputs, flag hallucinations with heuristics, and add review gates for new intents. That can reduce irrelevant replies and prevent brand damage. Use tools like LIME or SHAP for interpretability analysis. Require data lineage tracking and document decision logic.

4. Risk Posture

AI is extremely useful in compliance, privacy, and fraud detection, but only when governed well. It can reduce false positives, flag edge cases, and tighten alert thresholds.

Consider a regional bank that sets up AI to monitor real-time transaction data. They might catch synthetic identity fraud that rules-based engines miss, with fewer false flags.

The fail case? Shadow AI tools rolled out by eager teams without risk review. That’s where the governance gap widens. This is where AI decisions outpace human oversight, and that’s where risk lives. There’s a costly trust tax on using artificial general intelligence haphazardly, especially where security is concerned.

Sam Altman has pointed out that AI depends on good governance. If your AI is touching regulated workflows, AI ethics is an existential requirement.

Readiness First

A shocking 95% of AI projects never reach production, according to MIT Sloan. The issue isn’t a lack of ambition. It’s that most teams rush to deploy an AI model without the prerequisites in place.

Start with data. If your unstructured data is messy or locked up in silos, you have work to do before you can drive value with it. Feeding unlabeled data to deep learning algorithms is like hoping for magic. You’ll be disappointed when you instead get garbage at scale.

Second, your platforms need to be event-driven and API-first. We’ve seen AI programs break because they couldn’t complete tasks across systems that weren’t built to talk to each other. No orchestration? No impact.

Security matters too. If you’re applying computer vision to sensitive environments or automating routine tasks around customer PII, then you need guardrails baked into the stack.

And finally, budget matters. Set clear success metrics and enforce scope boundaries. Without them, it’s too easy to let narrow AI pilots sprawl into expensive, unfocused messes.

Try to implement a checklist that will aid to your specific business case. Start with questions like:

- Is this a simple rules-based decision?

- Can humans complete the task faster or more safely?

- Is supervised or unsupervised learning truly required?

- Do you have the AI skills in-house to maintain it?

Where AI Works: Use Cases by Function

When AI works, it really works, but there’s a catch. It’s critical to pair the right machine learning models with the right data foundations. Leaders often assume that because AI systems learn, they’ll self-correct bad inputs. In reality, poor implementation spreads risk. Below, we’ve broken down where artificial intelligence solutions are driving measurable results, and what can go wrong if you don’t nail the fundamentals.

Customer & Marketing

| Use Case | Prereqs | Risks |

| Churn Prediction | Labeled retention data | False signals, misallocated spend |

| Personalization | Real-time clickstream + feedback | Hallucinated content, UX erosion |

| Agent Assist | Intent tagging, customer interactions history | Confused recommendations, brand drift |

AI can personalize at scale only if your inputs are clean. JPMorgan Chase reported that its AI systems now handle over 1,000 contract reviews per day. They cut legal review times dramatically and free lawyers for higher-value tasks. But after spending $17 billion on AI, they’re serious about feeding clean data to their AI systems.

When you feed poor tags or unreliable engagement signals into your models, they generate responses that undermine brand voice or trigger unwanted journeys. The fix is tight feedback loops and always-on review.

Some AI tools can even automate this monitoring. For instance, a QC GPT can validate your chatbot responses, removing off-brand messaging.

Sales & Service

| Use Case | Prereqs | Risks |

| Forecasting | Normalized pipeline metrics | Volatility amplification |

| Pricing Optimization | Historical sales data, market signals | Margin compression, fairness flags |

| CRM Recommendations | Segmented personas, behavior history | Compliance risk, misaligned messaging |

AI can flag opportunity gaps, push up-sell prompts, and optimize quote timing. LinkedIn built an Account Prioritization Engine in its sales CRM that uses machine learning and explainable AI. An A/B test showed this drove an 8% increase in renewal bookings.

But if your input data is lopsided, you’re just amplifying existing distortions. Misapplied models have pushed teams into predatory discounting or over-promising features. And if AI-generated suggestions don’t follow regulatory or contractual boundaries, you’ve got a governance gap waiting to bite.

Supply Chain & Operations

| Use Case | Prereqs | Risks |

| Predictive Maintenance | Telemetry + parts lifecycle data | Missed failure signals, cost overruns |

| Route Optimization | Real-time traffic + delivery patterns | Fuel waste, customer delays |

| Demand Forecasting | Seasonality + historical sales | Overstocking, stockouts |

Operations thrives on automating repetitive tasks, and AI does this well. DHL deployed AI-powered route optimization and dynamic scheduling across its delivery network. The model helped cut fuel consumption by up to 15% while improving on-time performance.

Still, these wins require precision inputs. Route data missing a few percent of rural routes? Forecasting models that ignore promotion cycles? You’re setting yourself up for failure. Don’t let vast quantities of data distract from quality. Align telemetry, invest in normalization, and define edge-case protocols to reduce your risk surface.

Finance & Risk

| Use Case | Prereqs | Risks |

| Fraud Detection | Transaction histories, anomaly baselines | False positives, customer churn |

| Anomaly Alerts | Clean logs, known variance thresholds | Alert fatigue, missed real threats |

| Cashflow Modeling | Real-time expense and revenue data | Illiquidity blind spots |

Artificial intelligence solutions can identify potential risks long before a human sees them. Mastercard uses AI anomaly detection to monitor real-time transactions. This tool helps their banks block fraud attempts worth billions annually. The company has drastically reduced false declines for legitimate purchases.

But AI in finance demands transparency. Opaque machine learning models can’t be allowed to “guess” their way through compliance audits. Without explainable outcomes and data lineage, you’re sitting on a regulatory problem. Bake in explainability at the start and document everything.

Build the Oversight Layer

The artificial intelligence gold rush can feel like the Wild West. Models are moving faster than oversight can scale. Make sure that your governance is maturing along with your deployment, to ensure accountability and traceability across all AI-driven decisions.

Start with intentional design. Include bias testing, transparency benchmarks, and deep learning review gates. Some teams are pushing for AI algorithms that reflect human values instead of distorting them. Without giving AI the same target human teams are aiming for, you risk misalignment when your system scales.

Human-in-the-loop oversight isn’t just using human technicians to check results. When models analyze data at scale, subtle errors creep in that only domain experts can spot. Engineers, clinicians, or risk officers need to be empowered to audit AI decisions and flag potential oversights. Embedding review into workflows prevents blind automation and grounds AI in context.

Every model impacting decisions needs explainability. Otherwise, you accrue risk you’ll pay off through bad press or even in court.

Legal, risk, and engineering teams must review all critical AI use cases. Use Estonia’s open-source approach as a model for scalable governance. It’s lightweight and it avoids the transparency trapdoor of over-promising ethics while delivering little.

The Real Costs of AI

Training just one model like GPT-3 produced 552 metric tons of CO₂. That’s as much as five U.S. homes emit in a year, before a single prediction is made. As the AI arms race heats up, emissions from large artificial neural network training will only grow. Sustainability needs to be factored into both vendor selection and infrastructure planning.

When AI makes decisions that require human intelligence (in areas like credit, healthcare, or HR) errors are expensive. One bias-laden resume screener can translate to a lawsuit. Poor model decisions can dismantle your brand. The hidden costs of weak AI ethics add up fast, especially in regulated environments.

To make AI applications work, you’ll need to invest in model observability and training pipelines. Skimp here, and you’re scaling noise instead of signal. Handling unstructured data alone can consume more time than building the model.

Many leaders underestimate the cost of retraining when switching tools. Proprietary automated systems with opaque logic can accrue technical debt that’s hard to escape. If your vendor won’t let you export and audit, you’re walking into a transparency trapdoor.

Good AI engineers aren’t just coders. They understand the social cost of automation and how to perform tasks responsibly. Closing this gap means upskilling, hiring, or partnering. All three cost real money.

Sidebar: Avoid These 3 Hidden Failure Modes

- High CapEx, low deployment: You spend big, but the model never launches.

- Metrics gamed by teams: Optimization targets drive the wrong behavior.

- Misalignment with company values: The system scales outcomes you didn’t intend.

Execution Blueprint for Engineering Leaders

Execution makes or breaks AI. The smart path is methodical. Start small and prove impact before you grow. By sequencing wins, embedding governance, and pressure-testing vendors, you build more durable systems. Follow these steps to implement AI in your operations:

- Start with the lowest-friction wins: Identify 1–2 high-impact, low-risk use cases where machine learning or natural language processing solves real workflow pain. Think: automating repetitive tasks, not moonshots.

- Spin up blended pods: Mix in-house teams with nearshore talent to build fast, ship lean, and avoid bloated headcount. Pair data analytics engineers with PMs who understand regulatory and business context.

- Bake in model controls early: Use machine learning algorithms that support versioning, observability, and rollback. Regulators won’t tolerate black box systems.

- Govern every lifecycle stage: Add governance checkpoints from design to deployment. Avoid the governance gap by adopting checklists from open-source governance models and responsible AI frameworks.

- Pressure-test vendors: Audit for explainability, support, and integration, especially with data analysis workflows and your security stack. Look for proof of real-world data protection practices.

- Stay policy-aware: Track updates to the EU AI Act, U.S. Executive Orders, and local data laws. Use a policy sandbox to pilot new tools under governance guardrails.

When NOT to Use AI (And What to Use Instead)

We’re in a moment where AI is expected to solve everything from repetitive tasks to full-blown self-driving cars. But sometimes, adding AI only automates inefficiency.

If you can write a clear “if-this-then-that,” you don’t need deep learning. Use a rules engine. You’ll deploy in days vs. quarters. For example, routing service tickets based on issue type doesn’t need a neural net to perform tasks.

Noisy, sparse, or biased data? That’s not a model opportunity but a lawsuit waiting to happen. Predictive analytics only works when you can identify patterns across real, representative datasets. Until then, dashboards > models.

In high-stakes environments (finance, healthcare, compliance), opaque models increase audit risk. Regulators don’t care how clever your computer science is. They care if human beings can trace decisions. Use big analytics tools, not deep learning, when outcomes must be fully transparent.

Analyze these tactics to complement or substitute AI as needed by your use case:

- Rules Engines: Great for deterministic processes

- RPA (Robotic Process Automation): Best for high-volume repetitive tasks

- Dashboards + BI Tools: Ideal when decision-makers need clarity over complexity

Conclusion: Don’t Just Use AI

The artificial intelligence arms race won’t be won by those who deploy the most models. It’ll be won by those who deploy them wisely. Lead with machine learning where it delivers valuable insights, but temper that with structure. Every AI model needs human intervention to reduce black-box bias and avoid alignment tax as it scales.

Want to deploy artificial intelligence that actually ships? BairesDev can help you move faster with machine learning frameworks, AI development services, algorithms checklists, and nearshore delivery models built for real-world AI systems.