It can be hard for a CTO to make sense of AI. On the one hand, we hear bold claims of extreme development speed and powerful replacements of existing systems and teams. Some leaders go as far as suggesting that they never plan to hire junior engineers again.

On the other hand, McKinsey reports that 90% of AI projects don’t make it out of pilot mode, and that most companies aren’t seeing bottom line impact from AI. On the development side, we hear that AI tooling might be making developers slower, even when they think it’s making them faster.

Where does that leave us as engineering leaders? You can’t waste your team’s time and money chasing solutions that won’t add value. Yet, ignoring the potential benefits of AI isn’t an option. Your professional responsibility and your CEO won’t let you.

I consider myself an AI moderate. When I’m in the room with business leaders, I’m usually the one saying, “AI isn’t magic.” When I’m in a room of engineers, I’m more often the one saying, “Actually, AI can be extremely useful.”

I don’t claim to be a world expert, but I’ve seen enough successes and failures to recognize some clear patterns in what makes AI work or fail inside companies.

AI Often Suffers from the “Hackathon Problem”

If you’ve ever participated in a hackathon, you’ve probably experienced something like this:

You pull together a small team to solve a problem quickly. You only have 48 hours, so you cut every corner imaginable. You don’t build with any kind of scalability or redundancy. You hard code things that ought to be configurable. And somehow, you pull together a relatively impressive proof of concept that’s half-software, half cardboard cut-out. Then, you put together a demonstration, hold your breath, and miraculously your demo mostly works.

“This is great,” say stakeholders, “when can you roll it out?”

Your stomach turns as you consider the weeks and months you’ll need to actually build the robust, extensible version of this you would feel comfortable putting in front of users. In your excitement to solve the problem, you lose track of managing expectations.

Most AI-based solutions have this problem at a higher order of magnitude. LLMs are quick to produce something convincing, but reaching production-grade reliability requires far more investment. “The AI is right 96% of the time” may sound exciting, but explaining to your CEO and stakeholders that 1 in 25 customers is going to get a broken experience is a much harder sell.

The biggest risk isn’t that AI won’t work, it’s that it will half-work, and you’ll scale broken experiences to thousands of customers. To succeed at deploying AI, you must understand what it’s good at, and what it’s not.

LLMs Have Significant Limitations

For all their power, LLMs have some significant limitations that get in the way of delivering effective solutions. Understanding those limits enables you to make the most of their potential.

As has been extensively documented, even the most powerful LLMs are prone to hallucination at times. My own experience suggests that for every 9 tasks AI helps me with, it wastes my time on the 10th being confidently wrong about something. Any solution built on AI must have a solid approach to addressing this issue. Even if you solve for accuracy, you quickly run into scaling challenges.

There are meaningful limitations to the amount of information an LLM can consider at once. These models can’t simply “read” an entire database or massive file and reason across it the way we might expect. That’s because they don’t have unlimited memory or true data-access capability. They work within a context window—a fixed limit (measured in tokens) that defines how much text they can process in a single inference call.

Even GPT-5 or Claude Sonnet 4 models cap out anywhere after a few hundred thousands to a million tokens (roughly up to few thousand pages of text). That’s tiny compared to what’s in a typical database or multi-GB file.

I once had a product manager come to me, confused, after loading a CSV with 50,000 records into an LLM and asking it to produce some aggregate statistics. The model happily produced polished-looking charts and summaries. The issue was: they were complete nonsense. It hadn’t actually processed all the data, only the fraction it could fit into its limited context.

The result was an answer that looked authoritative but was fundamentally broken. It was a stark reminder that without the right scaffolding, AI can’t be trusted to “just work” at scale. Even when the model can ‘understand’ what you want, it doesn’t mean it will respond at the speed or cost you need.

If you’re working with AI, you must consider performance. LLMs are much faster than human authors at producing responses. Yet, compared to the latency of traditional software, they are positively sluggish.

They also run up a bill quickly, unlike the much slower marginal cost growth of traditional software systems. Performance efficiency and financial efficiency are also at odds. Improving one is typically at the cost of the other.

Most of the Power of an AI Solution is in the Scaffolding

LLMs are powerful, but there’s no quick fix coming that will make them overcome their limitations at scale. As such, the value and applicability of AI to real-world use cases comes from the surrounding architecture—where most of the real power lies.

There’s a variety of model-extrinsic investment one can make, some of which are quite well-known and written about exhaustively. The simplest is prompt engineering, which is the science of crafting and iterating on the actual instructions to an LLM. Retrieval-augmented generation (RAG) is another example. It involves using traditional programmatic queries and function calls with LLM outputs. This approach lets you feed real data into the model to handle user requests, greatly improving accuracy and reducing hallucinations.

There’s work to be done in selecting the right model and tuning it for correctness, performance, and cost efficiency. You may need to introduce additional models or prompts to reach the outcome you want. Often there’s further work in shaping the model’s output format and vocabulary so it integrates cleanly with the rest of your systems. You may even find yourself needing to customize the model itself, which is harder and more costly than inference. And of course, you must identify common failure cases and build guardrails so that even when the AI makes mistakes, your product still delivers a good final result.

All of that is to say, if you see an AI-based solution that performs well consistently at scale, it’s not because AI is magic. It’s the result of meaningful, deliberate investment in the specific use cases it’s solving. So, where does that leave us?

A Disciplined Approach to Where and How We Apply AI

For engineering leaders, the next step isn’t to throw up our hands, nor is it to blindly chase the latest demo. We must take a disciplined approach to where and how we apply AI. Broadly, most opportunities for AI fit into three general categories: AI developer tooling, AI-based vendor solutions, and homegrown AI projects. Each requires thoughtfulness to deliver lasting value rather than flashy prototypes.

AI Developer Tooling

These often have the lowest barrier-to-entry, but have the highest potential to be misused. Code generation, test generators, and AI-augmented IDEs can be powerful ways to reduce toil and improve efficiency, but only if applied carefully. The key is to treat these as accelerators, not replacements.

Don’t assume you’ll immediately see 50% gains overnight. A variety of factors will affect how much speed you’ll gain (or lose). Prototypes and green-field projects typically have a lot of boilerplate that AI will speed through, but integrations with vast, poorly-documented legacy systems can present a quagmire.

Junior engineers may gain speed and confidence by having an AI assistant, but they can quickly develop bad habits if they rely on the tool to do their thinking and planning. Senior engineers often get better leverage because they know what to ask for and typically know what to check in their results to be confident in what they’re delivering.

You’ll want to be thoughtful and careful as you roll these tools out. Don’t push with broad mandates. Instead, make it easy for people to leverage tools and understand how to use them wisely. We have a rule on my team: You can use AI to do anything you like, but at the end of the day, you are accountable for every line of code and change you push. We never accept “the AI said so” as a rationale for doing anything.

AI-Based Vendor Solutions

If you’re a technology leader, I don’t have to tell you there’s a sea of new AI-based vendors. You hear from them every day through cold emails, LinkedIn pitches, or stakeholders who met a salesperson at an event promising a product that can “totally solve your problem.” Some of these solutions are truly powerful. But for every one that delivers, many more are simply ambition wrapped around an LLM, served on a heaping bed of hype.

When evaluating a vendor, the real value lies in how deeply (and how specifically) they’ve done the work to apply AI to your use case. Any analysis should start with a sober assessment of how closely their product aligns with your actual needs.

Let’s take AI voice agents for call centers as an example. Some are genuinely strong. However, unless a product has been tuned to the exact functionality and workflows your call center requires, it won’t perform well. At best, you’ll find yourself stuck in a long “co-development” cycle, effectively training the vendor’s product for them before it ever delivers reliable value to you.

If the solution is well fit for purpose, your next task is to get past their demo. Dig into whether enough engineering work has gone into making the system reliable in production by asking:

- Does the system have strong guardrails, monitoring, and integration points into your stack?

- Can it provide the customizability to be a high-value part of your technology ecosystem for the next 5 years?

- What happens when the model fails?

- What were the hardest parts of productizing their work?

In my experience, the best vendors will share more information because they’re proud of what they’ve built, and they know it would be hard to replicate. Conversely, my experience suggests that if someone hand-waves too much about their technology being “proprietary”, or they just keep repeating buzzwords, it usually means there isn’t much real engineering substance behind it.

Internal AI Projects

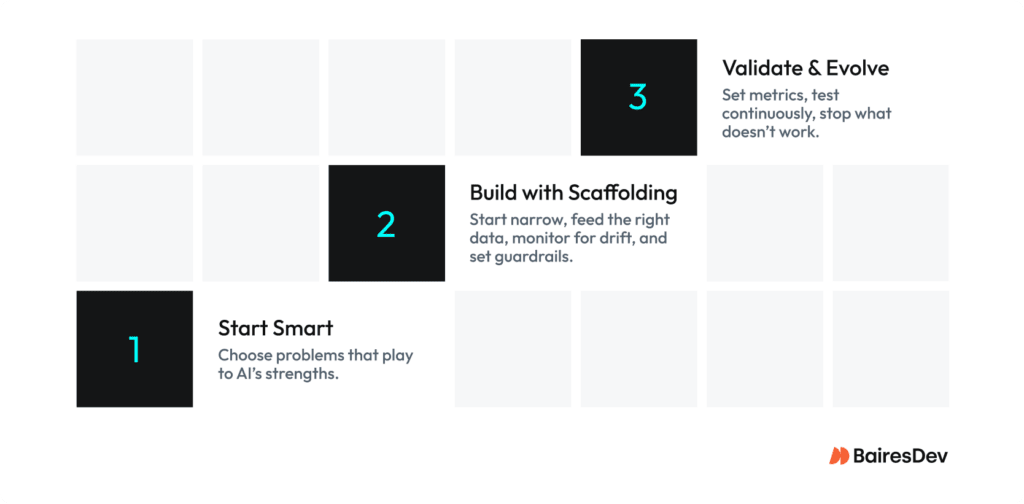

Internal AI projects, where you build bespoke AI solutions to solve your own business problems, are the highest risk and highest effort endeavors, but can produce the greatest reward. You must be very careful to manage expectations. This is where the hackathon problem rears its head worst of all. Here are three basic steps that your internal projects should check:

Step 1: Choose the Right Problems for AI

When evaluating an opportunity for an LLM-based solution, first do a realistic analysis as to whether the strengths and weaknesses of the technology make it a likely win for the problem you’re solving. LLMs are great at things like parsing unstructured data, summarization, text generation, or lightweight classification. They’re far less reliable when asked to act as databases, perform precise arithmetic, or produce legally/auditably correct outputs without oversight.

There’s often a lot of political pressure to be doing things using AI, when in fact, many of our problems are still better solved with traditional software. In many cases, it still outperforms AI solutions—offering lower latency, higher predictability, and lower cost.

Step 2: Build with Discipline and Scaffolding

If you have found a good use case, discipline is everything. Start with a narrow, high-value workflow rather than a broad platform goal. As with traditional software development, it’s far better to succeed on a slice of a process than to overpromise ambitious, end-to-end automations that collapse under the weight of their own complexity.

Your success here depends on your investment in the scaffolding: retrieving the right information to feed your model, constraining the generation to meet your needs, monitoring to track failures and drift, and implementing high-quality guardrails that keep your system behaving. Without such scaffolding, your demo will quickly deteriorate under real-world usage.

Editor’s note: At BairesDev, our AI engineering teams follow this principle closely, starting with small, high-impact workflows and expanding only once stability and measurable ROI are proven. This disciplined approach prevents the “demo-to-disaster” pattern many AI pilots fall into.

Step 3: Measure, Validate, and Adapt

Finally, don’t forget the best practices you apply to any project.

- Define clear goals and success metrics up front.

- Validate at every step of the way whether there’s measurable value.

- Don’t be afraid to pull the plug on experiments that aren’t working, instead of spending months trying to “rescue” them because of the sunk-cost fallacy.

Ultimately, Generative AI is Neither Magic nor Useless

GenAI isn’t going to save your company overnight, but it’s also not a fad that’s about to blow over.

If you’re a skeptic, beware. Refusing to engage with AI is an abdication of your role as technology leader. If you’re a cowboy, slow down. Every shiny demo that half-works in a contrived scenario can break at scale, costing you time, money and credibility.

The future belongs to leaders who can walk the middle path: building the scaffolding and delivering real, durable value.