Imagine your team ships a note summarizer to reduce documentation time. In the first week, a resident copies the output into a chart. A missed medication allergy almost slips through, and a patient’s name appears in a usage log. Nothing catastrophic happens, but everyone feels it. Trust takes a hit, and rollout pauses.

Healthcare needs the gains from modern language systems powered by machine learning, deep learning, and natural language processing, but it can’t tolerate brittle processes.

This guide offers an operating model for deploying LLM and RAG systems in clinical settings that depend heavily on electronic health records, patient data, and clinical decision support. It focuses on PHI boundaries, retrieval quality, evaluation gates, and human oversight so you can deliver measurable value without creating compliance or reliability debt.

The Stakes: Why Healthcare ML Requires a Different Playbook

Healthcare ML operates under asymmetric risk. A minor hallucination in a marketing bot wastes time. A similar failure in a discharge summary can cause real harm. Systems must respect PHI rules, earn clinical trust, and produce an audit trail that stands up to review.

The tension is simple. You need automation to reduce the burden and improve access. You also need predictable behavior, clear boundaries, and a plan for when things go wrong. The task at hand is translating that tension into concrete design patterns and go-live gates that make deployment safe and repeatable for healthcare professionals, data scientists, and healthcare organizations alike.

PHI Boundary Patterns: Where Data Lives and How It Moves

Every healthcare ML system makes architectural choices about where patient data lives, how it moves, and who can access it. These choices shape your risk profile, compliance posture, and operational complexity. The key decision is between inference-only architectures that never store PHI and retention-permitted pathways that keep data for retrieval and model improvement.

Inference-Only vs Retention-Permitted Pathways

Inference only means PHI flows through the model and disappears. Patient data comes in, the model processes it, outputs return to the clinician, and nothing persists beyond what you need for immediate audit. Retention permitted means you store PHI in vector databases—sometimes used to process unstructured data and analyze data for machine learning applications—to support retrieval or improvement of machine learning algorithms and deep neural networks over time.

| Dimension | Inference Only | Retention Permitted |

| PHI Storage | None except temporary cache | Stored in vector indexes, logs, training data |

| Common Uses | Real-time decision support, patient chat, live note summarization | Knowledge retrieval across cases, quality analytics, model fine-tuning |

| Primary Risk | Limited model improvement, no historical context | Larger breach surface, re-identification exposure |

| Control Requirements | Input validation, output filtering, minimal logging | Encryption, access policies, audit trails, automatic data purging |

| Improvement Strategy | Prompt tuning, model version upgrades | Retrieval optimization, fine-tuning on corrections, feedback loops |

Choose inference only when your task doesn’t need cross-patient retrieval or learning from past encounters. A triage assistant scoring the current patient’s vitals doesn’t need access to last month’s cases.

Choose retention permitted when value comes from corpus memory. A clinical knowledge system answering “show me similar presentations” requires stored historical data.

Redaction, Obfuscation, and Differential Access

Even within retention systems, you can limit PHI exposure through technical controls applied before data reaches retrieval or inference pipelines. The key is gating every layer so PHI exposure is limited long before retrieval:

- Redaction strips identifiers before indexing. Names, medical record numbers, phone numbers, and addresses all get removed. Use structure-aware rules that preserve clinical meaning. A salted token can replace each identifier to maintain linking across notes.

- Obfuscation replaces real identifiers with pseudonyms or tokens within processing systems. If a workflow must resolve back to identity, make that a separate authorized action with its own access log.

- Differential access enforces role-based policies. Most staff see masked records by default. Break-glass access requires a documented reason and triggers post-hoc review.

Apply these rules consistently at ingestion, indexing, and output. Without strict data governance and auditing, models can still leak patient-level information despite de-identification efforts. In other words, don’t rely on a model to hide PHI you’ve already exposed upstream.

Data Residency and Secrets Management

Architectural safety extends beyond your own infrastructure. For health systems operating across regions, ensure your cloud and model providers guarantee data residency. For example, keeping PHI processing within specific legal jurisdictions (e.g., US-only zones) to satisfy sovereignty requirements.

Internally, enforce strict secrets hygiene: encrypt vector stores at rest and in transit, and manage API keys through dedicated vaults rather than environment variables.

If a key leaks, you must be able to rotate it instantly without redeploying the application.

Data Contracts and Access Controls

Formalizing PHI boundaries requires solid data contracts with explicit schemas defining which fields contain PHI and how they’re classified. These boundaries matter not just for engineers, but for medical professionals, healthcare providers, and health systems integrating these tools into real clinical practice.

These controls aren’t theoretical.

During clinical sign-off, physicians validate that PHI boundaries hold under real-world conditions, including edge cases in complex data or odd formats common in healthcare data. If the boundaries aren’t defensible during these tests, the system doesn’t go live.

Retrieval Quality and Lineage: RAG Done Right for Clinical Workflows

Healthcare teams won’t trust a RAG system that can’t explain where its answers come from. This means any retrieval system, regardless of how accurate it seems, is DOA without auditability and transparency. From chunking to guardrails, every design decision needs provenance that supports clinical review and audit.

Chunking Strategies Tied to EHR Structure

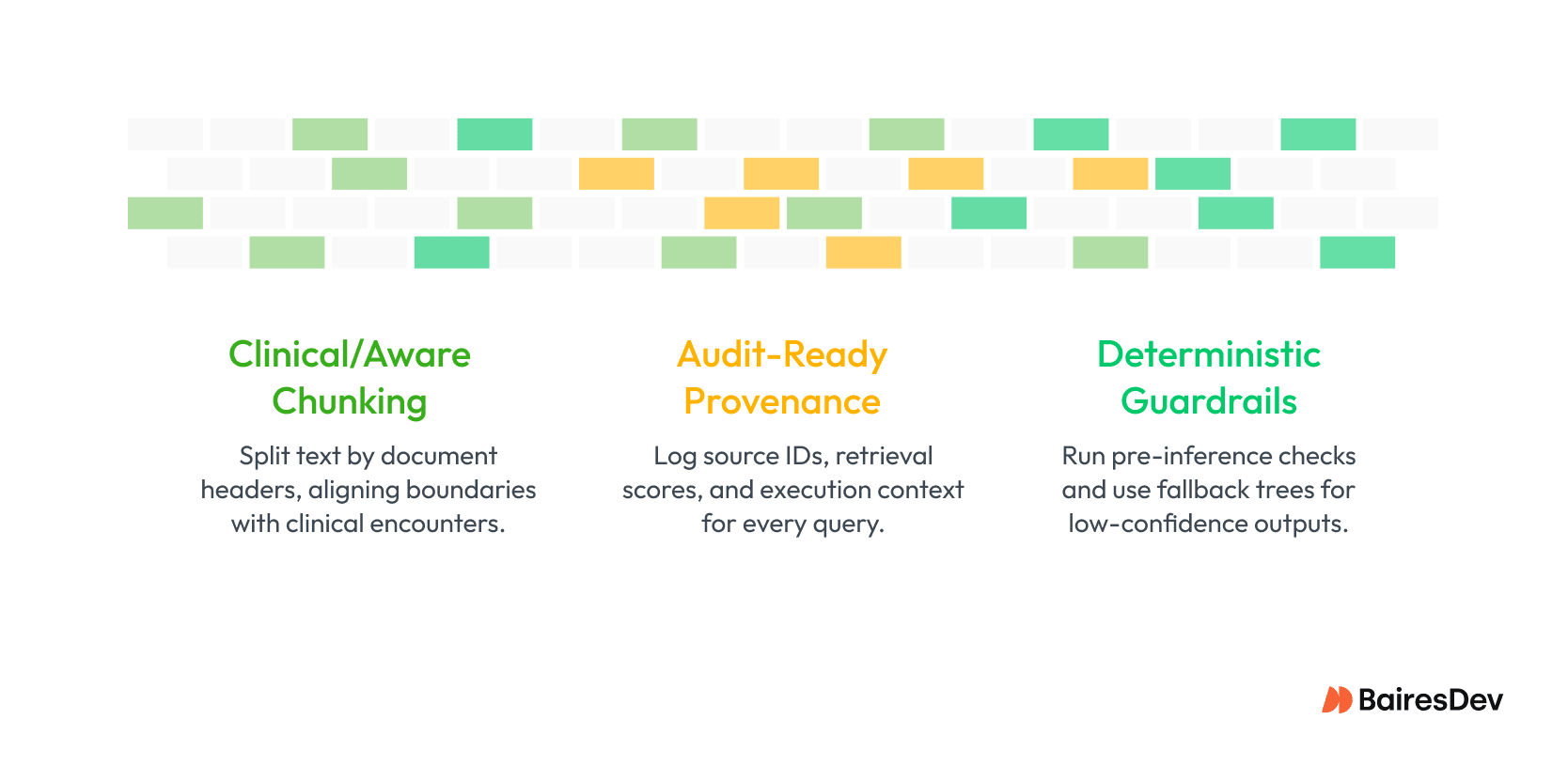

Chunking isn’t straightforward in any domain. Clinical documents, however, are shredded with basic chunking approaches. Progress notes, discharge summaries, lab results, radiology reports, medication lists: cut these in half and you lose all coherence. A section-based approach that respects document structure preserves clinical coherence.

It’s not a data science problem. It’s a clinical workflow problem. The right granularity depends on clinicians’ use. A triage assistant needs quick access to chief complaint and vital signs, so those should be separate, high-priority chunks. Conversely, a chronic disease management tool needs longitudinal context, so seams should align with encounter boundaries and retain temporality.

Provenance, Citations, and Evidence Logging

Clinicians need to know exactly where an answer came from before they rely on it. Provenance is the backbone of a trustworthy RAG system because it makes every retrieval step visible and reviewable. When a system logs its trail clearly, you can defend decisions, investigate issues, and give clinicians the context they expect at the point of care.

Key pieces of provenance to capture:

- Source details

- Retrieval signals

- Execution context

- Clinician-facing citations

This level of tracking does more than satisfy audit needs. It tells you whether a bad output came from poor retrieval or faulty generation, which guides your fix. It also drives improvements by showing what clinicians click, ignore, or override. When patterns emerge, the reliability of your retrievals goes up, along with trust in the system.

Guardrails for Hallucination Control

The only thing worse than LLM hallucinations are LLM hallucinations in a clinical context. Retrieval helps keep outputs grounded, but models can still go off track. That’s where guardrails come into play.

Answerability checks test whether a request is relevant before passing it to the model. If the top-ranked chunks don’t meet a confidence threshold, or if the query is too vague, the system kicks the request back. Fallback trees can catch those flagged requests and pass them to a human for review. Abstention patterns flat out refuse with an “I don’t know” rather than using the model’s imagination to answer.

Guardrails are baked in at the orchestration layer, before and after inference. This makes them portable across model versions and, better still, all providers. When we discuss human oversight later, you’ll see how they integrate with clinician workflows to maintain control over high-risk decisions.

Evaluation: The Offline-to-Online Ladder for ML Safety

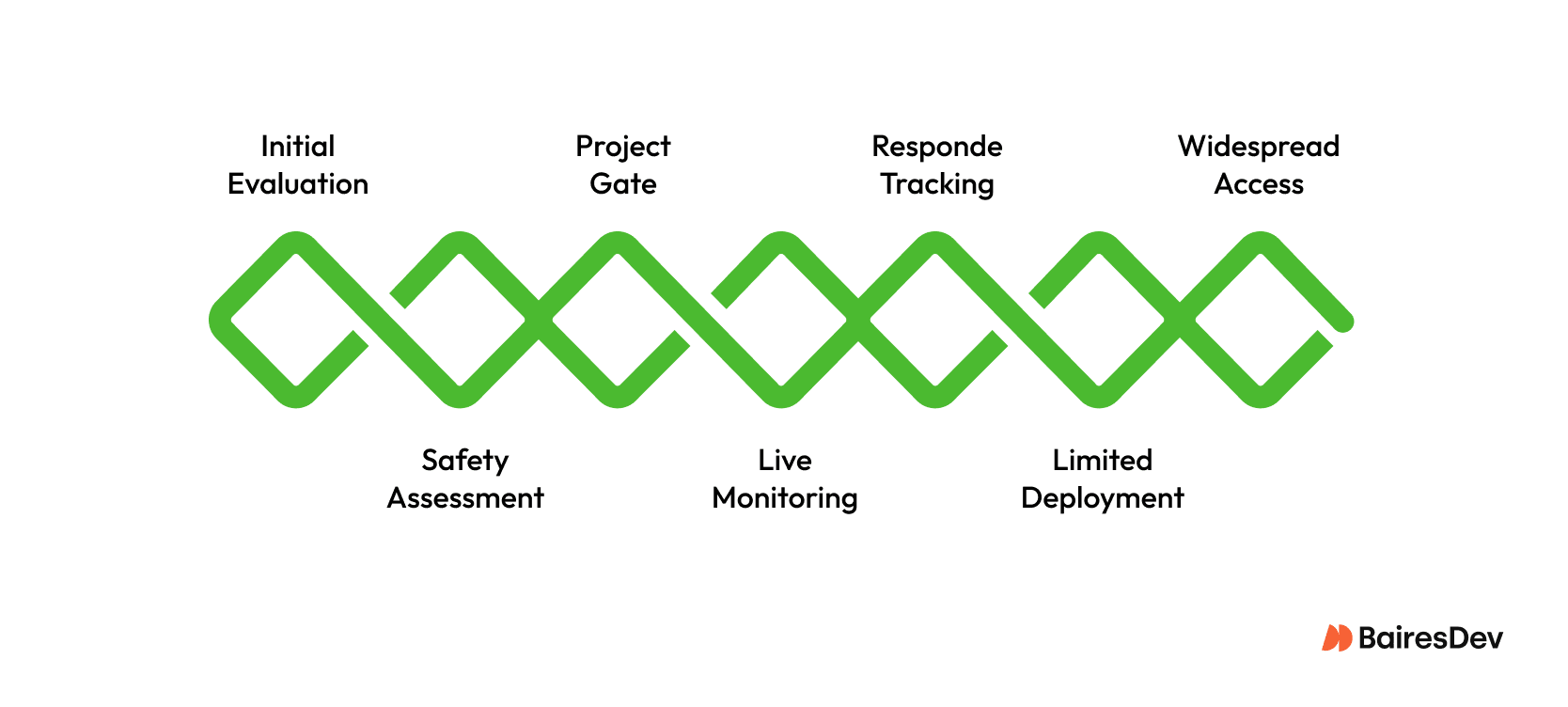

Every successful LLM deployment in the healthcare sector has likely followed a similar path to production. And that path is defined by evaluation. No AI-powered system is ready until engineers and clinicians alike have poked, prodded, kicked, and twisted every surface of it.

- Start with offline tests that look like real cases. Mix in summaries, Q&A, coding, billing, and triage. Add weird charts, ambiguous notes, and adversarial prompts. See if you can induce hallucinations or get the model to offer unsafe suggestions.

- Ask clinicians to grade outputs: not just right or wrong, but harmless or dangerous too. Wrong and dangerous kills a deployment.

- Use these results to decide if the model even deserves a shot in production. If it fails basic tasks or leaks sensitive info, scrap the project.

- Next, run it in live with “shadow” mode, where it answers the same questions as clinicians, but doesn’t surface them. Later, clinicians can review them to determine their usability.

- While you do that, track how people are responding. How often do clinicians override the model? Do the outputs save time? Does it choke on messy copy-paste text?

- If clinicians say they trust it, move to a small canary rollout: keep it to one team or scoped to one use case. Examine error rates, overrides, latency, and any hint of patient harm.

- Only when the canary hits targets should you slowly widen access. If it slips, roll it back and fix it first.

While not always easy, the goal is simple: you want a system that earns clinician trust and improves care when it meets the chaos of production. Because even under ideal conditions, modern LLMs have non-zero factual error rates. Imagine what those error rates would be without evals.

Operations and Governance: Running ML Systems at Clinical Scale

You do not get a safe, clinical scale system by writing a nicer prompt. You get it by treating the model like any other critical system in a hospital. It needs eyes on it and a mechanism to sever connections instantly. Implement a hard “kill switch” that disconnects the model from clinical workflows without a code deployment.

If you detect a prompt injection attack or a spike in hallucinations, operations teams must be able to revert to manual processes in seconds. Speed is your primary containment strategy.

- Track what matters to clinicians, not just engineers. Measure end-to-end latency from click to answer, how often the system fails, why it fails, and how much each request costs.

- Break failures into clear buckets. Retrieval issues. Timeouts. Guardrails that block too often. Upstream EHR glitches. You cannot fix what you lump together.

- Put numbers where everyone can see them. Engineers get detailed logs to debug. Clinicians get simple dashboards showing task success, override rates, and hints of patient impact.

- After any serious incident, run a proper review. Ask what failed, why guardrails did or did not help, and what tests or alerts would have caught it earlier.

- Watch for slow change over time. If patient mix, input style, or clinical guidelines move, output can drift or lag behind.

- Bias toward stability over shiny new models. In healthcare, boring and predictable beats clever but fragile every time.

Secure the ML Supply Chain

Treat your model artifacts like any other software dependency.

Implement a Software Bill of Materials (SBOM) for all ML components, pinning specific versions of model weights, tokenizers, and libraries. If a vulnerability is discovered in an open-source library or a model provider deprecates a version, an SBOM allows you to identify exposure immediately. In healthcare, you cannot afford to wonder which version of a model is currently reading patient charts.

The goal is not just to keep the service running. It is to make sure that every change, outage, and upgrade happens in a way that protects patients first and keeps clinicians confident that the system is on their side.

Integration and Interoperability: Connecting ML to Clinical Practice

The tricky part of healthcare ML is rarely the model. It is persuading the EHR, imaging systems, and workflow owners to play nicely together. Real progress happens when the tech fits the organization, the interfaces stay stable, and everyone understands who owns what. Durable standards matter, but so does patience.

Connecting to EHRs and Medical Imaging Systems

Clinical data sits in a patchwork of systems with their own formats and rules. FHIR gives you a clean path for structured data, but coverage is uneven. HL7 still moves a lot of real-time events, though it can feel cranky and fragile. Direct database access fills in the gaps, but it also creates brittle coupling when vendors change schemas. Most real deployments blend all three. The hard part is not wiring them up; it is getting IT, compliance, and clinicians aligned on what access is allowed and how workflows will use it. That is where diplomacy does more than code.

Be aware that direct database access often bypasses the application-layer audit logging and access controls built into standard FHIR or HL7 interfaces. If you choose this path for speed, you are responsible for rebuilding those security layers to maintain compliance.

Keeping Retrieval, Orchestration, and Application Separate

It sounds obvious to keep retrieval, orchestration, and UX separate, yet this pattern saves you every time the stack evolves. A clean boundary lets you swap vector stores or model providers without rewriting half the system. It also gives you clearer testing lines, since you can evaluate retrieval quality, guardrail behavior, and end-to-end flow independently.

The point is simple. Good architecture buys you room to grow, room to adapt, and a calmer future when the rest of the system refuses to sit still.

From Pilot to Production, Safely

Bringing machine learning into healthcare isn’t a choice between moving fast or staying safe. The point is to design systems that do both.

That means setting clear boundaries for sensitive data, tuning retrieval so it reflects real clinical structure, keeping audit trails intact, and using evaluations that tie technical results to patient impact. Clinicians stay in control when the tools surface evidence clearly and explain their reasoning. Strong operations and governance keep everything reliable as you scale.

These ideas are flexible. Different teams, regulations, and workflows call for different setups, but the core principles stay the same: safety, clear oversight, and trust. As models improve and the healthcare industry shifts, revisit your approach so your systems grow with the field.

The potential here is big, from better diagnoses to lighter workloads and more personalized care. The risks are real, too, which is why these patterns exist. They offer a practical path from early experiments to dependable production systems that unlock the benefits without inviting harm.