Everyone we know is experimenting with AI in one form or another. Chatbots that summarize tickets, assistants that review code, agents that triage support issues. It’s all happening. Most teams we talk to have at least a few models running in test environments or tucked into internal demos.

But as AI development has grown, and while pilots seem promising, actually integrating AI into day-to-day operations still feels painfully manual. Every new model seems to require another round of bespoke connectors, internal API wrangling, and security exceptions. What starts as an optimistic proof of concept quickly turns into a maintenance headache.

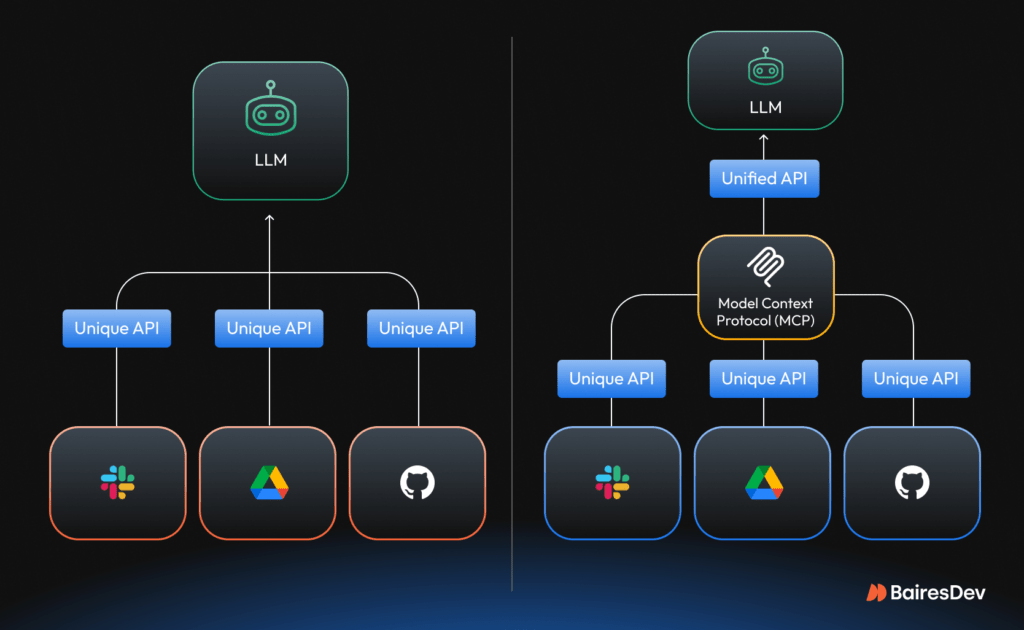

This isn’t a model problem. It’s an integration problem, and it scales poorly. Every connection between a model and an internal system becomes its own custom build. Multiply that across tools and platforms, and you’re quickly buried under a web of one-off integrations, each with unique auth flows, data mappings, and failure points.

It adds up fast and can quickly bog down engineering teams with glue code. Security teams scramble to review it. And finance? They’re wondering why your AI budget is going to infrastructure, not impact.

Worse, this patchwork can’t meet emerging compliance standards. The EU AI Act, for example, requries traceability and oversight, something ad hoc scripts just don’t provide.

So, it’s no surprise that many AI projects stall post-demo. Not because the model failed, but because the integration couldn’t keep up.

The Model Context Protocol: A Practical Fix

That’s where MCP (Model Context Protocol) comes in. By now, you have surely heard of it. It’s an open protocol quietly gaining momentum as a practical fix to this AI integration problem. One of our AI experts shared that, from his point of view, MCP protocol allows teams to focus more on building robust and solid implementations by removing the need to worry about integrating different tools. Instead, it provides a single interface that connects multiple services, enabling plug-and-play deployments.

Let’s take a step back and remember how MCP entered the stage. MCP was introduced by Anthropic in late 2024, after their team realized that their Claude models needed a cleaner, more scalable way to interact with tools and data sources. Rather than keep the approach proprietary, they open-sourced it. Within a few months, major players like Microsoft, AWS, and Atlassian began incorporating it into their products and reference architectures.

Think of MCP as USB-C for AI. It gives AI systems a standardized way to talk to enterprise tools without every connection becoming a one-off build.

Here’s the basic model:

- An AI-powered system acts as the MCP client.

- Internal systems (like your CRM, ERP, or database) expose lightweight MCP servers—adapters that translate requests into real actions.

- The client sends structured JSON requests (e.g., get_open_tickets or update_customer_status), and the server handles the rest, such as authentication, data retrieval, and trace logging.

Each MCP server provides a manifest, some kind of menu, that tells the AI which tools are available, what inputs they require, and how they respond. The AI uses that information to craft structured calls, while each interaction is logged for traceability and auditing.

It means that instead of building custom connectors for every pair of tools and models, you just build one client and one server per system. From exponential mess to linear sanity.

Just like REST and GraphQL define how data moves through web APIs or how SMTP governs email communication, MCP standardizes how AI models interact with tools. One of our experts compares it to The Matrix: “It’s like loading a new skill through a floppy disk. Suddenly, your app knows how to handle finance queries or support tickets without rebuilding from scratch.” That’s the power of a shared specification. Developers don’t need to know every server in advance. If both sides speak MCP, they can plug in and start exchanging context right away.

What MCP Looks Like in Practice

Imagine you’re the CEO of a growing mid-size finance company. Your team just rolled out an AI-powered internal assistant to help with operational questions. Nothing crazy, just a model that can answer things like “What’s our current burn rate?” or “How did Q3 collections compare to Q2?”

Today, getting those answers means toggling across NetSuite, your internal analytics dashboard, and a spreadsheet updated manually by operations every Friday. So the assistant stalls out. It either can’t access the data or requires three brittle API connectors just to answer one question.

Now, picture the same setup using MCP. Your dev team configures the assistant as an MCP client. They then expose each data source through its own MCP server:

- One wraps your NetSuite API.

- Another interfaces with your internal financial database.

- A third pulls from your ops team’s reporting dashboard.

The next time someone asks, “How’s our cash flow trending month over month?” the AI doesn’t guess or generalize. It sends a standard call to each relevant MCP server, securely fetches real data, and responds with context-aware insight, while logging each interaction for traceability.

No one had to hardcode a new workflow. The same servers could power ten other assistants in finance, customer service, or executive reporting.

And crucially, you didn’t have to ship data off-platform or depend on a single AI vendor. The entire system runs within your environment, governed by your existing policies. If you want to swap models later or add new tools, you don’t have to start over. You just plug into what’s already there. This protocol adds significant flexibility and freedom for companies, which in the short term will ultimately benefit end users. It allows organizations to define their own architectures and mix on-premise infrastructure with cloud-based services, regardless of the AI model they choose to use.

The protocol is model-agnostic, open-source, auditable, and deployable inside your own infrastructure. That last part matters: you don’t have to push data outside your walls to make this work. MCP servers can live behind your firewall, governed by your policies, observed by your tools.

What’s the Business Case for MCP?

Okay, so you don’t have to write as many connectors. That’s nice enough, but what’s the bigger picture? For teams developing AI systems or embedding models into workflows, MCP has five key upsides:

1- Flexibility without vendor lock-in

AI vendors evolve quickly. You might start with Claude, then move to OpenAI, or fine-tune your own model next quarter. MCP gives you a consistent interface across all of them. Swap the model, keep the wiring. That modularity lets you adapt without re-architecting.

2- Secure by design, easier to govern

MCP runs entirely within your infrastructure, using your existing identity and access systems. Every AI request passes through a controlled gateway that logs and enforces policy. You stay in control of data, actions, and access, which also makes compliance easier.

3- Faster, leaner integration

AWS reports that by using MCP with Amazon Bedrock, the number of required connector implementations dropped from nine to six in one example. That means less glue work and more time for actual innovation.

4- Structured traceability for compliance

Every tool call made through MCP is logged in a structured, inspectable format. You know what the model did, with what data, and why. That kind of auditability is becoming essential in regulated industries and much harder to achieve with ad hoc scripts.

5- Higher long-term ROI

The more MCP servers you deploy, the easier it becomes to launch new use cases. Instead of rebuilding integrations each time, you plug into what’s already there. That reuse compounds, reducing costs and increasing your speed to value. In fact, any service offered as a publicly consumable API is a strong candidate for MCP implementation. That makes it an attractive option for a wide range of use cases, especially when building agent-style systems that need to interact with different data sources.

Early adopters are already seeing these benefits in practice. Block (formerly Square) wired Claude into financial systems via MCP to automate ledger queries and analysis. Developer platforms like Replit and Sourcegraph are using it to let AI agents navigate code and documentation more reliably. Microsoft’s Copilot Studio now supports MCP out of the box, allowing low-code users to connect internal tools with minimal engineering effort.

If You’re Considering It, Here’s Where to Start

If you’re a CTO, VP of Engineering, Head of AI, or even a business leader tasked with scaling AI initiatives, this is where the protocol discussion becomes real. Let’s assume you’re interested. How do you test this in your own org?

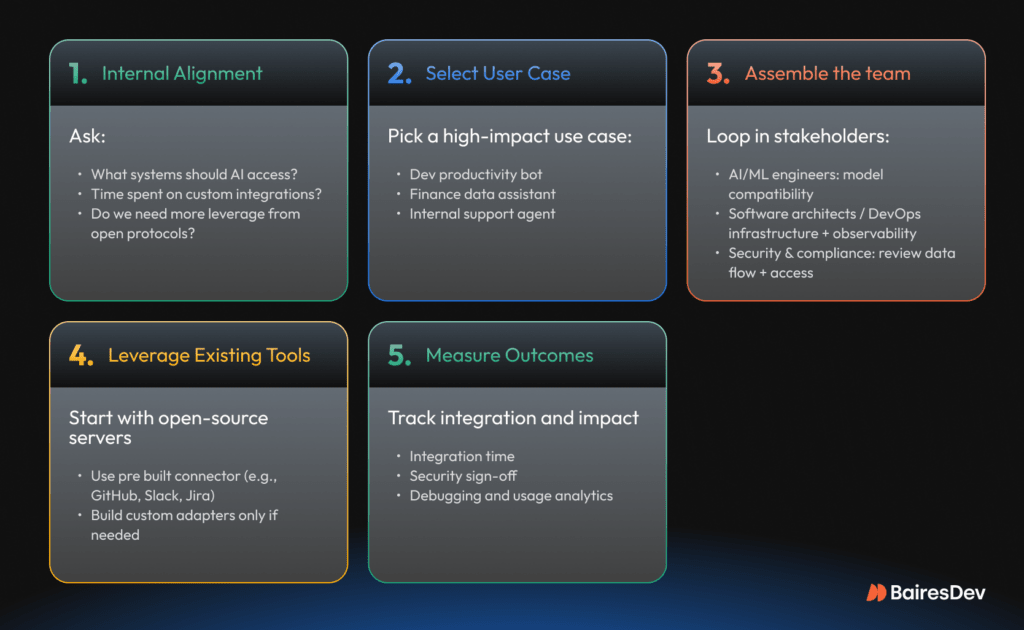

First: ask a few key questions internally.

- What tools or systems would make our AI models actually useful if they could access them?

- How much time are we spending on custom integration work today?

- Are we boxed in by vendor lock-in? Would an open protocol give us more leverage?

Next: pick a starter use case. It should be small but meaningful, something like a dev productivity bot, a finance data assistant, or an internal support agent. Keep it internal. Aim to prove out the architecture, not solve everything at once.

You’ll want to involve:

- First and foremost, AI/ML engineers to test model compatibility

- Then, software architects or DevOps to spin up MCP servers and handle observability

- And of course, security and compliance teams to review data flow, authentication, and logging

There are open-source servers already available for many systems like GitHub, Slack, Jira, and more. Start with one of those if you can. Build a custom adapter only if you need to (and if you do, consider it a learning investment).

Lastly, measure what happens. What changed in terms of integration effort? How long did it take to connect systems? Was security able to sign off faster? Did the logs help you debug or analyze usage?

Those answers become your business case.

Step by Step MCP Adoption

A Note on Talent

Now, an honest caveat: just because MCP reduces complexity doesn’t mean the implementation is trivial.

To do this right, you’ll need people who understand how to secure services, manage token limits, trace model behavior, and integrate with enterprise auth systems. That talent exists, but it’s not yet widespread. Remember, we’re talking about very recent tech, and knowledge is building as we speak.

This is where many organizations decide to bring in external help. Whether it’s for a short advisory engagement or a full managed service, the goal is the same: build the initial pieces right, share knowledge with your team, and avoid costly missteps. Partnering with an experienced AI development firm like BairesDev is a shortcut to understanding this tech and pushing that pipeline.

Smart Integration, Stronger AI

Protocols rarely make headlines, but they often define how smoothly a company can scale. MCP is quietly solving a real problem: how to connect your models to your business without piling on brittle, expensive integrations.

Major players like Microsoft, AWS, and Anthropic are already behind it. New MCP-compatible servers are being released every month. At this pace, it won’t be long before “MCP-ready” becomes a standard procurement checkbox.

You don’t need to chase every AI trend, but aligning early on integration standards can save serious time and cost down the road. MCP is still evolving, but it’s already solving real challenges around model-to-tool connectivity. If you’re exploring it, our team can help you design MCP-compatible tool servers, streamline integration paths, and ensure your AI stack scales with less friction.