Scaling Node.js is easy, but passing audits at scale isn’t. The teams that win enterprise deals pair throughput with evidence: SBOMs, signed artifacts, immutable logs.

This article outlines how to scale traffic and trust together.

What breaks at scale isn’t throughput—it’s compliance.

For SaaS companies, Node.js scalability feels like an easy win.

Even a simple JS application, sometimes just a few lines in a single JS file, shows how event-driven architecture handles concurrent requests with efficiency. Add the event loop that manages thousands of incoming requests on a single node server, and Node.js applications seem limitless in their potential.

But when you start scaling Node.js applications across multiple servers and instances, hidden risks surface.

Dependency sprawl, license drift, and secrets in pipelines don’t cause outages in development — they show up during audits or enterprise sales. Without evidence like signed artifacts or SBOMs, procurement cycles stall.

Scalability without governance accelerates risk, not growth.

The core question isn’t whether Node.js can handle more servers or more traffic. It’s whether you can scale trust at the same pace you scale throughput.

Node.js Scalability Challenges in SaaS Applications

You choose Node.js for speed. Its asynchronous programming model allows you to handle thousands of API requests, user requests, and client requests with minimal resource utilization.

With horizontal scaling across multiple nodes and vertical scaling of a single server’s processing power, Node.js applications can serve millions of requests. The cloud lets you spin up more resources almost instantly, but scaling isn’t just adding machines.

When you scale Node.js applications, you need to prove governance is growing at the same pace.

Adding CPU cores and memory to a single-node server improves throughput, but a failed master process can bring everything down. Running processes across multiple servers gives you resilience — but only if you implement load balancing algorithms, monitor event loop utilization, and leave behind evidence of fault tolerance.

Vertical and Horizontal Scaling in Node.js

If you start with a single server, you’ll quickly discover its limits.

Vertical scaling buys time by adding CPU/RAM to one host, but it also enlarges the blast radius if the primary process fails. Horizontal scaling means running multiple instances across hosts or containers behind a load balancer, which improves resilience when managed by an orchestrator.

Don’t conflate multi-process with multi-host. Node’s cluster (or a process manager) forks worker processes on a single machine to use all cores. That’s useful for lightweight, single-host setups or to maximize CPU inside a container. True horizontal scale is handled by your platform (containers + Kubernetes/ECS/nomad), which gives you rolling deploys, health checks, autoscaling, and failure isolation across machines.

The scaling process is technical, but the governance requirement is strategic. You need monitoring to catch failed requests early, prove optimal performance, and show auditors you have fault tolerance built in.

Where Node.js Works Best (and Where It Doesn’t)

Node.js excels when requests are light on compute but heavy on concurrency.

It’s well-suited for gateways that validate requests, APIs that orchestrate multiple requests, or real-time messaging systems managing streams of client requests.

| Workload | Node.js Fit | Why It Works (or Doesn’t) | Guardrails |

| API Gateway/BFF | Strong | High concurrency, I/O bound | Validate user requests, structured logs |

| Realtime (WebSockets) | Strong | Long-lived connections, low CPU usage | Back-pressure, autoscaling |

| ETL/Data shaping | Conditional | Light transforms, orchestration | Worker process pools, performance monitoring |

| Media/ML/crypto | Weak | High processing power blocks the event loop | Independent services, thin APIs |

Scaling Node.js well means knowing where not to use it. When you assign CPU-intensive tasks like encryption or machine learning to Node.js, you weaken both performance and compliance posture. Use independent services for those workloads, and let Node.js focus on what it does best: orchestrating requests.

Load Balancing, Caching, and Monitoring

As you scale Node.js applications across multiple servers, you’ll need resilient load balancers and tested load balancing algorithms. Round-robin distribution works if traffic is uniform, but least-connections or EWMA balance better when incoming traffic or network traffic is uneven.

Add tenancy guardrails as you scale out. Use per-tenant API keys and quotas, isolate tenant data in caches/queues (namespaces or accounts), and enforce rate limits at the edge. Include data-segregation tests in CI and staging to prove isolation before release. Monitor per-tenant error budgets and p99 latency so a noisy neighbor can’t sink the fleet.

Load balancing only works if you also control demand. That’s where caching strategies come in. A CDN cache accelerates static assets, in-memory cache speeds up frequently accessed data, and application-level caching reduces database queries, database connections, and load on the database server. Each layer reduces subsequent requests and improves performance.

You also need continuous performance monitoring. Track event loop utilization, worker processes, and resource utilization across CPU cores. Monitor network requests and memory usage under load. Without this visibility, scaling Node.js just multiplies the chance of failed requests.

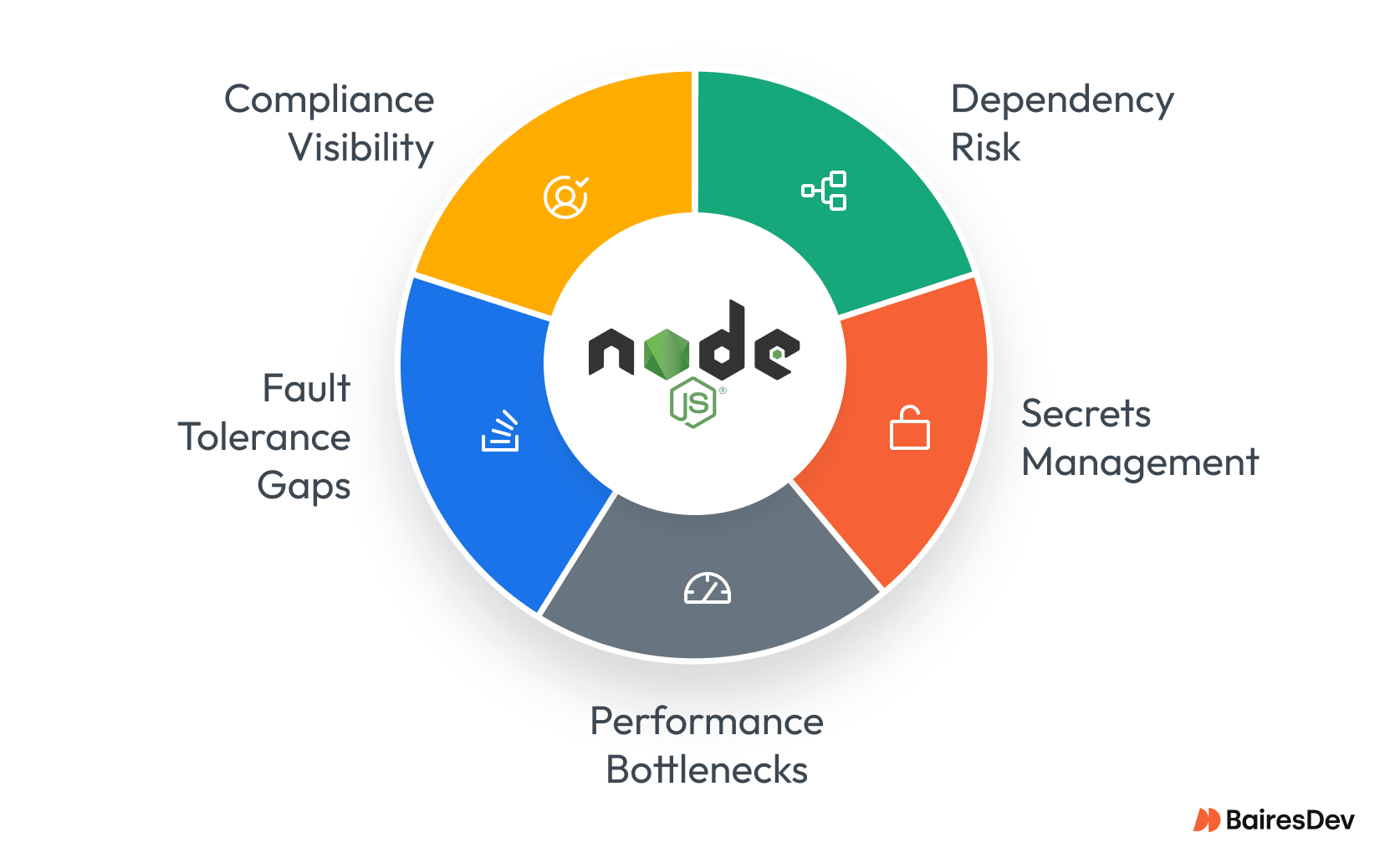

The Hidden Compliance Landmines

The minefield isn’t technical limits — it’s governance gaps. You risk security and compliance when you let dependencies float without locks, overlook typosquatting in npm packages, or fail to track license drift.

Missing provenance is just as dangerous. Without signed builds or attestations, you have no way to prove integrity. Secrets in CI/CD pipelines create breach paths. Runtime saturation leads to SLA violations. And unaudited production access will fail both SOC 2 and HIPAA reviews.

These landmines don’t show up in development. They explode during audits or enterprise sales. Address them early, and you turn compliance from a blocker into an accelerator.

Best Practices for Scaling Node.js Applications

Scaling responsibly requires best practices that combine performance and governance. You should supervise worker processes with a process manager, validate requests before granting access, and measure performance monitoring continuously. Fault tolerance comes from combining vertical scaling and horizontal scaling with resilient load balancers.

You should also use caching strategies to improve performance: accelerate static assets with a CDN, keep frequently accessed data in memory, and cut subsequent requests with application-level caching. By following these best practices, you’ll deliver high performance applications and high performance web applications that scale while passing audits.

Compliance Mapping for Leaders

SOC 2 and HIPAA map directly onto Node.js practices. SOC 2 Trust Services Criteria require authentication, audit logging, and availability testing. HIPAA Security Rule §164.312 demands access control and immutable logs.

The point is simple: the same practices you use to prevent failed requests or outages are the ones that satisfy auditors and speed enterprise procurement.

From Guardrails to Evidence

If a control leaves no artifact, it isn’t compliance—it’s hope.

Compliance is not about writing policies. It is about producing durable, reviewable evidence. For Node.js, that means every guardrail must leave an artifact that can be audited.

| Area | Guardrail | Evidence |

| Dependencies | Lockfiles, license/vuln gates | CI logs, SBOMs, scan reports |

| Provenance | Signed artifacts, attestations | Build metadata, signature checks |

| Change control | Protected branches, approvals | PR history, pipeline runs |

| Secrets | Short-lived tokens, vaults | Configs, rotation logs, scans |

| Runtime | Rate limits, autoscaling | Service configs, SLO dashboards |

| Access | SSO/MFA, JIT | IAM diffs, review logs |

| Auditability | Immutable logs | Retention policy, queries |

This table is not just an engineering checklist. It’s a board-level view of how to translate hidden landmines into visible guardrails and, critically, into evidence that clears procurement.

For enterprise buyers, a small set of artifacts clears procurement fastest: an SBOM (SPDX or CycloneDX), build attestations (e.g., SLSA/in-toto), artifact signatures (e.g., cosign), access review logs, and SLO reports. Make them downloadable per release and reference them in your security packet.

That’s how you turn governance into a sales accelerator.

The SaaS Scorecard

You should measure Node.js scalability with velocity, reliability, and risk posture.

Velocity comes from faster deployments and shorter lead times. Reliability is proven with low error rates, stable event loop lag, and few failed requests. Risk posture is shown with SBOM coverage near 100% and audit exceptions at zero.

| Category | Metrics | Outcome |

| Velocity | Lead time ↓, deployments ↑ | Faster delivery |

| Reliability | Latency within SLOs, event loop lag low | Stable services |

| Risk posture | SBOM coverage ~100%, audit exceptions 0 | Trust and compliance evidence |

Business Impact and ROI

Embedding governance pays off. You speed procurement when you have SBOMs ready. You reduce costs by running multiple instances across multiple servers instead of oversizing a single server. You reduce risk when you monitor resource utilization and network requests continuously. IBM data puts the cost of an average breach at $4.88M. Prevention costs less than remediation.

What Good Looks Like

A mature Node.js environment doesn’t look like a checklist. It looks like disciplined engineering. You peer-review PRs, sign builds, attach SBOMs, test autoscaling against incoming traffic, and capture immutable logs. Compliance stops being an afterthought and becomes a natural output of delivery.

Implementation Realities

Scaling responsibly takes time. In the first quarter, you reach readiness: IAM enforcement, dependency scanning, SBOM generation. In the second, you build repeatability: tuning load balancers, signing artifacts, performance monitoring. Only then can you optimize with policy-as-code, microservices, and cloning scalability strategies across various services.

You must assign ownership. Platform, security, app, and RevOps teams all need responsibility. Without it, costs rise and timelines slip.

BairesDev’s Perspective

Scaling Node.js is about more than adding servers — it’s about proving resilience and compliance as you grow. You can’t win enterprise trust if evidence and governance lag behind performance.

BairesDev helps SaaS leaders close that gap. Our engineers bring Node.js performance expertise together with compliance disciplines like SOC 2, HIPAA, and ISO 27001. We design delivery pipelines where monitoring, SBOMs, and signed builds are built in — so you scale faster without creating audit risk.

With BairesDev, you don’t just deliver scalable Node.js applications. You deliver scalable trust.

Strategic Takeaways

- Scale throughput and trust together. Treat compliance as a product requirement, not an afterthought.

- Harden the supply chain. Lock dependencies, gate licenses, and treat npm/process managers as an attack surface.

- Prove integrity by default. Ship SBOMs, signed artifacts, and immutable logs with every release.

- Engineer for reliability you can defend. Caching, monitoring, and load balancing keep p99 within SLOs and clear audits faster.

Frequently Asked Questions

How do multiple servers and multiple instances improve scalability?

When you run multiple instances across multiple servers, you build resilience and fault tolerance. A process manager supervises worker processes, and a load balancer orchestrates incoming traffic and network traffic. This design reduces failure rates and gives you proof of optimal performance for audits.

What role do worker processes and cluster modules play in scaling Node.js applications?

The built-in cluster module lets you create child processes from a master process. Each worker process runs in its own memory space, allowing multiple processes across CPU cores. With native cluster module features, you can create child processes or even master process forks. You need to monitor memory usage and resource utilization to ensure fault tolerance.

What’s the best caching strategy for scalable Node.js?

The best strategy combines multiple caching techniques. Use a CDN for static assets, keep frequently accessed data in memory, and reduce requests with application-level caching. This design improves performance, lowers load on the database server, and demonstrates best practices for high-performance web applications.

How do I validate user requests and prevent failed requests?

You should validate user requests at the gateway before they reach application logic. By monitoring event loop utilization and worker processes, you can catch failures early. Best practices include testing the scaling process with simulated incoming requests and ensuring database queries and database connections are monitored.

How do cloud services change Node.js scalability?

When you leverage cloud services, you can add more servers or more resources almost instantly. That elasticity is powerful, but you must clone scalability strategies carefully and ensure governance keeps pace. Auditors expect to see logs, signed builds, and monitoring artifacts whether you run on-prem or in the cloud.

How do I explain ROI to my board?

You should position governance as a way to reduce risk and accelerate revenue. By embedding best practices in load balancing, caching, and monitoring, you improve performance, shorten procurement cycles, and reduce breach exposure. IBM’s data proves governance pays back faster than firefighting.