Enterprise software development carries a weight that small organisations rarely feel. A missed release can stall revenue, deepen compliance exposure, and erode customer trust. Scale is not only about traffic; it includes tangled integrations, regional data laws, and products that must evolve without breaking years of accumulated behaviour.

Many firms still lean on development processes designed for startups, processes that ignore the constraints of a multinational environment: parallel work streams, geographically dispersed contributors, and boards that demand both speed and auditability.

When that mismatch surfaces, engineers rush, quality slips, and technical debt multiplies faster than it can be paid down.

Adapting to New Requirements and Business Goals

A mature software development process does more than outline phases. It provides a cadence that absorbs change rather than resisting it.

Leaders examine user feedback loops, release metrics, and incident logs, then shape the next cycle accordingly. Documentation stays light yet clear. Governance exists, yet it never hides responsibility behind paperwork. Over time, the development process itself becomes a competitive asset, guiding teams through mergers, new regulations, and sudden market pivots without losing momentum.

Software engineering teams that treat metrics as a guide, not a scoreboard, reinforce that ethos. Delivery lead time, mean time to recovery, and defect-escape rate tell a nuanced story when read together, revealing whether development teams improve flow without compromising resilience. The data prompt conversation, which in turn shapes the next iteration.

Over months, this discipline compounds, and the organisation moves from reactive firefighting to deliberate, predictable change management.

Building Teams That Deliver

Even the best framework fails when the wrong people run it. In complex environments, success begins with deliberate team design. Senior engineers who understand software architecture and domain rules set technical direction. Then, product owners translate strategy into backlog items that matter. Test specialists automate quality gates rather than policing them after the fact. Together they give a project both muscle and shape.

Culture Fit: More Than a Corporate Buzzword

Effective onboarding is the first stress test. Mature partners invest in knowledge-transfer sessions, architecture deep dives, and security workshops before writing the first line of code. Those rituals build a shared context that prevents expensive misunderstandings months later. When new engineers join mid-stream, clear playbooks and mentoring keep velocity steady instead of resetting the learning curve.

Integration with the client’s culture is equally critical.

Teams that share working hours with stakeholders remove latency from decisions. Dialogue replaces overnight mail threads and misunderstandings are identified in minutes. Cultural proximity also shapes code. When engineers grasp business nuance, they choose patterns that serve the entire project, not a single sprint.

Leveraging Nearshore Talent and Specialization

Nearshore delivery strengthens these advantages. Engineers operate in familiar legal and security frameworks, yet remain cost-effective compared with local hiring at scale. The talent pool is deep, and turnover stays low because professionals see clear growth paths, not short contracts. That stability protects institutional knowledge, a key factor behind predictable velocity.

Specialisation rounds out the equation. Large enterprises juggle analytics pipelines, legacy mainframes, and cloud-native microservices at the same time. Partners who can field experts in each layer shorten discovery, spot risks early, and keep programmes aligned with roadmap commitments.

Productivity metrics matter, yet they tell only part of the story. High-performing teams track engagement, code-review quality, and incident post-mortem participation, because burnout erodes quality long before throughput drops. By valuing sustainable pace and continuous learning, enterprises protect their most scarce resource: senior engineering judgment.

Choosing and Adapting Development Methodologies

Modern enterprises rarely fit cleanly into a single software development methodology.

Beyond the Waterfall vs. Agile Model Debate

A team that maintains a stable billing engine while building a new mobile layer cannot survive on textbook Scrum alone, yet a rigid Waterfall model would smother discovery work. Seasoned leaders start elsewhere: they identify the constraint that hurts the business most, then tune process until that pain eases.

A high-variance stream such as prototype research thrives on short feedback loops, slim documentation, and informal show-and-tell sessions where architects and product managers review code before opinions harden. Work tied to a regulatory deadline benefits from gated checkpoints, traceable requirements, and clear hand-offs to compliance reviewers.

Both streams can succeed inside one organisation if guardrails are explicit and interfaces remain simple.

Embedding Continuous Improvement

Adaptation rests on evidence. Delivery lead time, change-failure rate, and hours lost to unplanned rework speak louder than any manifesto. When a metric refuses to move, the team experiments: smaller batches, an early security scan, paired work on brittle modules.

The adjustments can improve flow of complex projects, or fail. Either way, the data folds into the next planning cycle and the process shifts closer to what the organisation needs.

Elasticity matters when corporate strategy pivots mid-year. A merger, a surprise regulation, or a budget freeze can shatter project plans overnight. Teams that already treat software projects as clay adapt quickly. Progress slows for a moment, then settles into a new groove, because the habit of adaptation was rehearsed long before the shock arrived.

Engineering for Quality at Scale

CI / CD in Regulated and Distributed Environments

Continuous integration and delivery look simple on paper: commit, build, ship. However, at scale the picture complicates, not because the ideas fail, but because legacy dependencies fight back.

Databases refuse to start in containers, network zones isolate staging from the production environment, audit snapshots demand signatures before any byte moves. Quality slips through those seams if the pipeline ignores them.

An effective pipeline treats every constraint as a user in its own right. The build server calls service mocks when the mainframe cannot appear in a test cluster; integration checks record and replay traffic so coverage survives zone boundaries; deployment plans include the business step that grants release approval.

Tooling alone is insufficient. Software developers must map the invisible tasks. Then, they automate what they can, and document parts of the process that must remain human.

Testing as a First-Class Discipline

The testing phase follows the same philosophy. Unit testing catches simple errors, but many faults appear only under production-like stress.

Organisations that keep a synthetic environment close to reality see failures early, well before users notice. Chaos drills, run on quiet mornings, reveal how the stack behaves when a storage node disappears or a feature flag misfires. System testing lessons loop back into code, infrastructure, and alert rules, and each round raises the floor on reliability.

When a pipeline shortens the gap between idea and release, feedback grows sharper. Product owners stop relying on quarterly analytics; they study yesterday’s cohort and choose today whether a feature stays or goes. Developers see their work in production within hours and refactor while context is fresh. Rigorous testing eliminates guesswork and the roadmap adjusts in near real time.

Communication and Collaboration

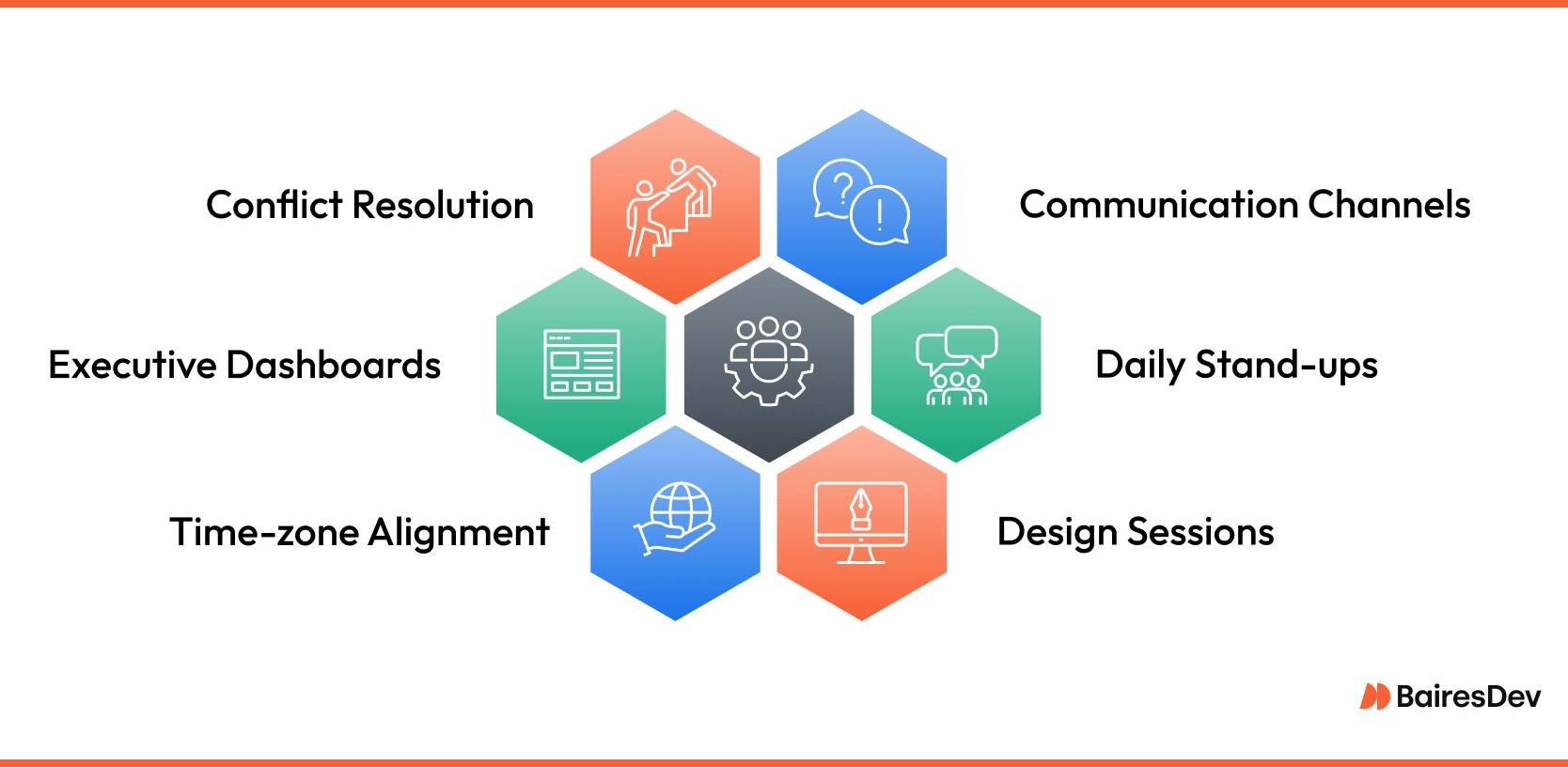

Software quality relies on clear communication and collaboration between individuals, no matter where they sit. At enterprise size the challenge multiplies. Product owners refine priorities in New York while architects review diagrams from São Paulo and security officers monitor controls in Phoenix. Every extra hop in that chain adds latency and risk.

High-trust teams counter the distance by treating communication as a first-class engineering task. They choose a handful of channels, assign each a purpose, and resist the temptation to improvise whenever stress rises.

Daily stand-ups stay short and focused on blockers. Design sessions adopt visual whiteboards and record outcomes immediately, so late-joining colleagues do not re-litigate old decisions. Long-form proposals live in version-controlled documents, allowing inline comments, historical context, and rollbacks.

Time-zone alignment removes much of the friction that plagues follow-the-sun models. When engineers in Buenos Aires share office hours with stakeholders in Chicago, questions resolve within minutes rather than overnight.

Executives do not need a barrage of task details, but they do need to know whether investment is converting into progress. Lightweight dashboards that track lead time, incident count, and burn-down offer the right altitude. Teams review the same boards during internal retrospectives, which keeps narrative drift in check and aligns stories told to leadership with day-to-day facts.

Even conflicts turn productive when they’re identified. A frontend squad may flag an API change that breaks accessibility rules, or an SRE may question a release window that collides with a regional marketing push. Because dialogue is continuous and structured, the debate ends quickly, sparing the organisation silent delays and unpleasant surprises.

Trends, Risks, and What Comes Next

The ground under enterprise software is always moving, though not every headline warrants action.

AI: Challenges and Opportunities

Artificial intelligence now generates test cases, detects anomalous log patterns, and recommends code refactors, yet it also introduces new attack surfaces and compliance puzzles. Leaders weigh each benefit against the cost of explainability, model drift, and data-privacy audits. The smartest adopt AI where it amplifies existing talent and automates routine toil, while keeping human review on decisions that shape customer trust.

Platform Engineering

Platform engineering, the discipline of treating infrastructure as an internal product, continues to mature. Self-service pipelines, golden-path templates, and shared observability layers let application squads ship without waiting on central teams. The risk lies in hidden complexity: if a platform team grows faster than its documentation, confusion spreads, and velocity drops. Clear versioning policies and regular platform demos help prevent that outcome.

Cloud-native With a Caveat

Cloud-native services keep expanding, but sovereignty requirements and cost spikes drive selective repatriation. Enterprises now think in terms of workload placement rather than cloud-first slogans. Latency-sensitive microservices may sit in regional data centres, while burst traffic or analytics jobs land in public clouds. A flexible architecture plan, backed by portable tooling and contract-aware abstractions, protects freedom of movement.

Security Remains a Constant Challenge

Security threats evolve in parallel. Software-bill-of-materials scans, signed artifacts, and zero-trust networks shift from aspirational to mandatory as regulators sharpen their view of supply-chain risk. Teams that already practise automated provenance tracking will adapt quickly. Those that rely on manual sign-offs will find compliance windows shrinking.

Talent Sourcing and Retention

Finally, talent markets remain volatile. Hybrid work is now the norm, and retention hinges on meaningful projects, transparent career ladders, and a culture of continuous learning. Organisations that treat upskilling as overhead will watch knowledge walk out the door. Those that embed professional growth into delivery retain the expertise that distinguishes a commodity code shop from a long-term strategic partner.

Conclusion: Raising the Bar for Enterprise Delivery

Process, talent, and technology form a tripod. Weaken one leg and the structure wobbles. Organizations that view methodology as an adaptable toolkit, staff projects with senior engineers who own outcomes, and enforce quality gain a compound advantage. They release features sooner, recover from incidents faster, and react to market shifts with confidence rather than panic.

Nearshore engineering partnerships amplify those gains. Add a pipeline that treats compliance and resilience as table stakes, and high quality software becomes the norm. It becomes a lever leadership can pull to open new revenue streams, strengthen customer loyalty, and respond to risk.

The bar for enterprise software delivery has risen, and expectations will keep climbing. By investing in adaptive process, integrated teams, robust automation, and transparent collaboration, organisations place themselves ahead of that curve, ready for the next surprise rather than bracing for impact.

Frequently Asked Questions

How do you keep our software development lifecycle from turning into a paperwork marathon?

We strip it down to essentials. Up-front software design gets enough rigor to avoid rework, then we move fast: small stories, quick code reviews, real metrics. The lifecycle stays visible but never feels bureaucratic.

We already develop software in-house. Where does your team plug in without slowing people down?

Think of us as extra muscle, not more meetings. We slot into your existing iterative development flow, pick up clearly scoped components, and push code to the same repos. Your engineers keep ownership, and we provide additional capacity.

I need a robust software solution now, not six months of discovery. What’s realistic?

If the core requirements are clear, we can get the first slice into production in weeks. From there, we follow an iterative approach: release, measure, adjust. You get value early, plus room to steer without a restart.

How do you treat ongoing maintenance once the launch buzz fades?

We budget for it from day one. After the initial rush, the work shifts into a dedicated maintenance phase, e.g., patches, minor features, performance tuning. Same engineers stay on the code so context never evaporates.

We have a legacy stack and a new application development roadmap. Can one team handle both?

Yes, with the right mix of specialists. Senior folks tackle refactors and integration points; newer devs focus on green-field modules. Shared reviews keep the codebase coherent even as we modernize it piece by piece.

What if the first iteration misses the mark?

That’s why we release thin and fast. An iteration that lands off-target is a data point, not a disaster. We use customer feedback, tweak the backlog, and roll the next build. Momentum beats perfection every time.