When machine learning (ML) moves from notebooks to production, the open source framework you choose becomes a governance decision, not just a technical one. Benchmark results matter less than choosing the option that reduces long-term risk across teams, infrastructure, and hiring.

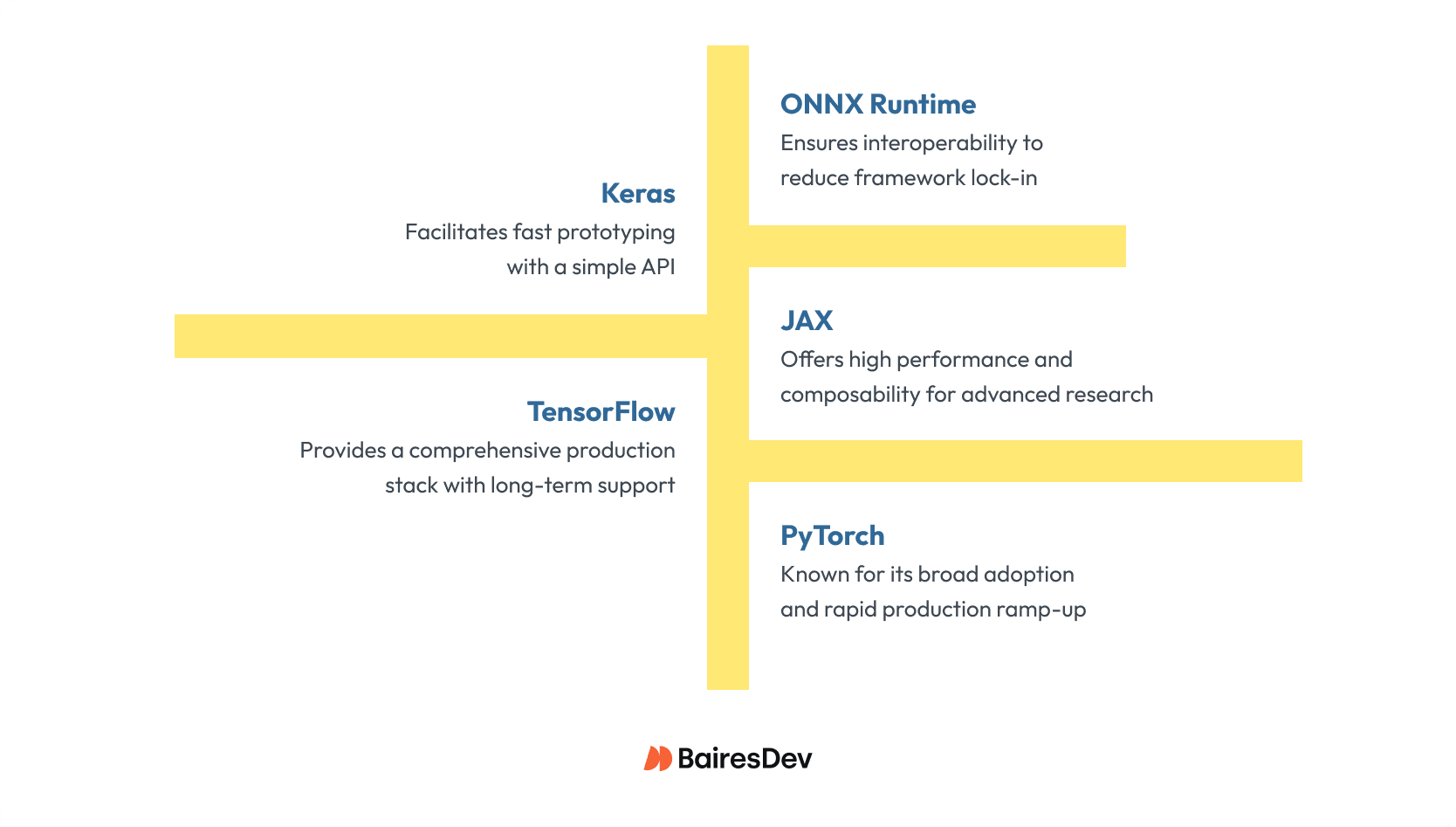

This guide examines leading Python neural network libraries (TensorFlow, PyTorch, JAX, Keras, and ONNX Runtime) through that lens. The goal is not to pick a winner but to help engineering teams make a defensible, lower-risk choice that aligns with their delivery strategy.

Why Framework Choice Matters

Most organizations reach this crossroads after several machine learning projects mature into products. Different teams may have prototypes in different machine learning models. One team used PyTorch for natural language processing, another chose TensorFlow for computer vision. As those prototypes head toward production, the platform team must standardize for maintainability, compliance, and observability.

The framework decision shapes hiring, performance tuning, infrastructure cost, and even supply-chain risk. The wrong choice slows integration across CI/CD pipelines and complicates audit trails for regulated industries.

Framework selection is an architectural commitment. It affects reproducibility, cost, and developer velocity long after the model ships.

Comparing the Leading Libraries

Each framework has its own strengths, community, and operational maturity. The comparisons below focus on what matters to organizations: ecosystem stability, developer experience, performance, machine learning operations (MLOps) alignment, interoperability, governance, and total cost.

TensorFlow

TensorFlow remains the most mature end-to-end framework for both training and serving deep neural networks. Its ecosystem includes TensorFlow Extended (TFX) for pipelines, TensorFlow Serving for deployment, and TensorBoard for experiment tracking. The release cadence is steady, and Google provides long-term support for major versions, which is critical for businesses needing predictable upgrades.

TensorFlow’s graph execution (with optional eager mode) offers determinism and reproducibility across distributed workloads. Pre-built Docker images and Kubernetes integration make deployment consistent across environments.

However, debugging can feel more cumbersome compared to Pytorch, since TensorFlow’s computation graphs and layered APIs can obscure the execution flow, making the learning curve steeper for engineers used to straightforward Python workflows.

Takeaway: TensorFlow offers reliability and strong deployment tooling, but onboarding and debugging require discipline. It’s well-suited for organizations that prioritize compliance, reproducibility, and managed service alignment.

PyTorch

PyTorch has grown to become the industry’s dominant open source framework for applied research and production ML alike. Governed by the PyTorch Foundation under the Linux Foundation, it benefits from open governance and predictable releases. Its dynamic, native Python API enables intuitive model construction and debugging, which is one of the reasons it dominates academic and applied data science teams.

PyTorch 2.0 introduced torch.compile, bridging the performance gap between eager and graph modes. Combined with TorchScript and TorchServe, it now spans research to production without changing frameworks. PyTorch integrates easily with modern MLOps tools, including Weights & Biases, MLflow, and container-based CI/CD.

Takeaway: PyTorch is the pragmatic default for most organizations. It is mature, flexible, and easy to hire for. But, if you require strict long-term-support or compliance requirements, then TensorFlow might be a better fit.

JAX

JAX is a research-first framework from Google focused on high-performance differentiation and compilation through Accelerated Linear Algebra (XLA). It enables functional-style programming, automatic vectorization, and parallelization. For data science teams exploring custom hardware acceleration or frontier machine learning tasks, JAX can offer measurable performance advantages.

However, its ecosystem remains research-oriented, with fewer production tools for serving and observability. Integration with CI/CD or model registries often requires custom work. For advanced teams with in-house platform capabilities, that trade-off may be acceptable.

Takeaway: JAX delivers exceptional performance and composability for advanced workloads but increases operational overhead without strong platform engineering.

Keras

Keras began as a high-level API for TensorFlow and remains a productive entry point for rapid machine learning projects. It’s designed for readability, quick prototyping, and education. For businesses, it serves as a thin layer over TensorFlow, reducing boilerplate without losing access to the TensorFlow backend.

Because Keras is tightly coupled to TensorFlow, its production viability depends on TensorFlow’s stack. Debugging low-level performance issues often requires dropping into TensorFlow directly.

Takeaway: Keras accelerates early exploration but should transition to TensorFlow proper as projects mature towards production.

ONNX Runtime

ONNX Runtime is not a training framework but an inference engine that standardizes model representation across ecosystems. Developed by Microsoft, it executes models exported from TensorFlow, PyTorch, or other tools via the ONNX specification.

Its value lies in interoperability. ONNX Runtime simplifies deployment pipelines by decoupling training and inference stacks. It supports hardware acceleration through DirectML, TensorRT, and CUDA, enabling efficient deep learning models at scale.

For enterprises consolidating diverse ML toolchains, ONNX Runtime provides a stable serving layer with clear governance boundaries.

Takeaway: Use ONNX Runtime to standardize inference and mitigate framework lock-in, especially when multiple machine learning stacks coexist.

Library Comparison Summary

| Library | Ecosystem | Dev Exp | Perf | MLOps | Interop | Governance | Hiring | TCO |

| TensorFlow | ✅ | ✅ | ✅ | ✅ | ✅ | |||

| PyTorch | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| JAX | ✅ | ❌ | ||||||

| Keras | ✅ | ✅ | ✅ | ✅ | ✅ | |||

| ONNX Runtime | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

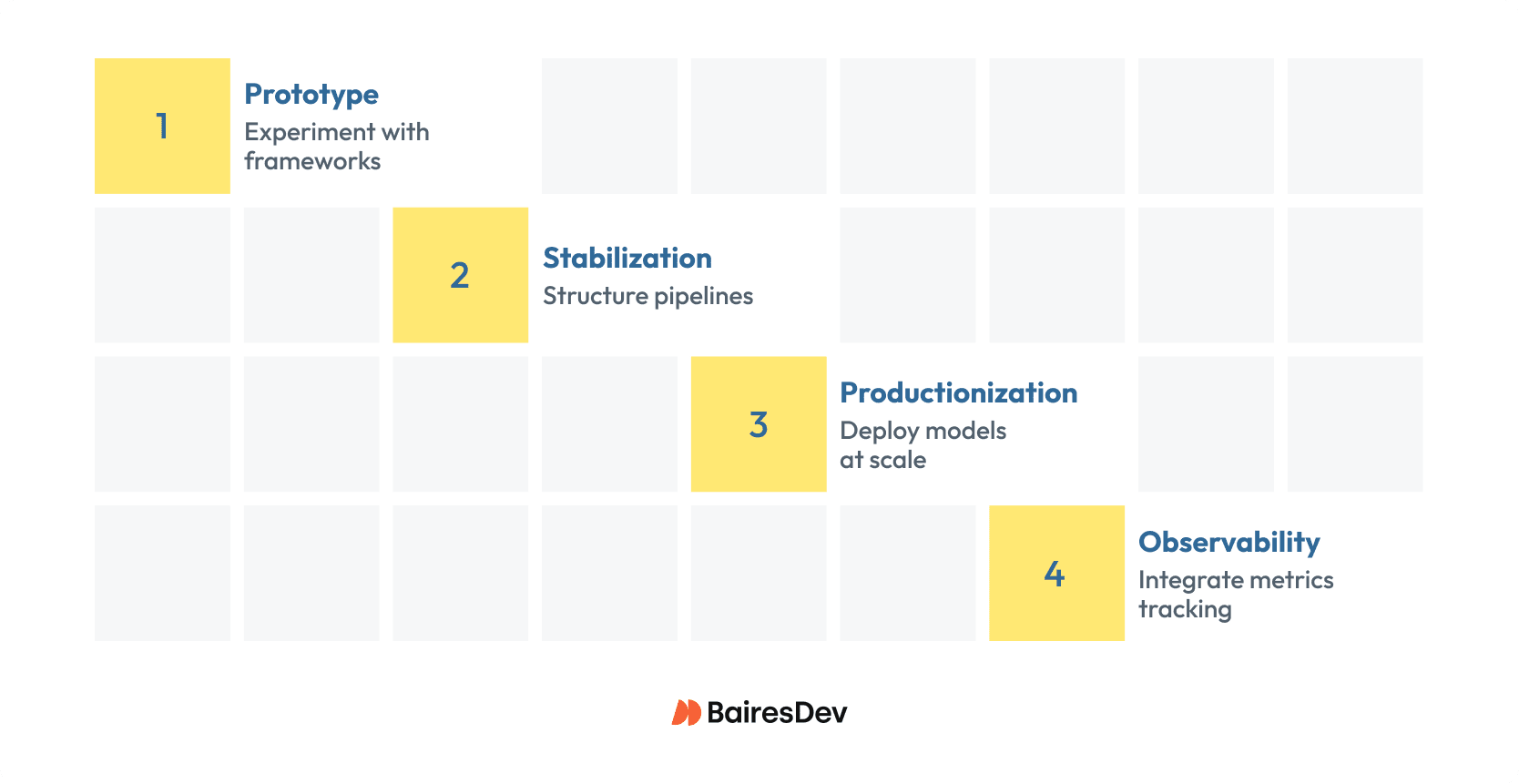

From Prototype to Production

Most organizations follow a four-phase journey in adopting machine learning: Prototype, Stabilization, Productionization, and Observability.

Success depends less on which library is chosen and more on enforcing promotion gates that ensure reproducibility, security, and rollback readiness.

In the Prototype phase, teams experiment with frameworks such as Keras, PyTorch, or JAX, prioritizing model quality over scalability.

During Stabilization, pipelines become more structured. Dependencies, drivers, and random seeds are pinned to ensure reproducible results.

Productionization brings models into operation at scale. Models become packaged with tools like TensorFlow Serving, TorchServe, or ONNX Runtime.

Finally, Observability integrates metrics tracking, retraining triggers, and rollback plans into CI/CD pipelines, ensuring models remain reliable and maintainable in production.

Governance, Security, and Supply Chain Risk

In regulated or large-scale environments, machine learning governance equals risk management. Frameworks evolve rapidly and untracked dependencies or mismatched GPU drivers can introduce instability, making compliance and reproducibility a constant concern.

A core best practice is maintaining a Software Bill of Materials (SBOM) for all model dependencies. Alongside this, teams should pin versions for critical libraries and GPU drivers, such as CUDA and cuDNN, to ensure deterministic behavior and reduce the risk of unexpected failures in production.

Containerization further mitigates supply-chain risk. Using containers from trusted registries helps guarantee that the code running in production matches what was tested, while establishing blue/green deployment strategies ensures that model rollbacks can be executed safely and quickly if issues arise

Framework transparency also simplifies compliance alignment. Open projects like PyTorch RFCs and TensorFlow release notes make it easier for teams to track changes, understand deprecations, and anticipate impacts on the pipelines.

Frameworks with open governance and predictable releases reduce audit friction, limit supply-chain exposure, and make it easier to maintain secure, reliable ML systems.

People, Hiring, and Organizational Fit

Framework adoption is as much a labor-market decision as a technical one.

PyTorch dominates hiring pipelines and data science education, making it easier to staff projects quickly with experienced engineers. TensorFlow retains strong adoption in some enterprise environments and across Google Cloud, while JAX enterprise remains relatively niche. ONNX Runtime is typically leveraged by teams already familiar with PyTorch or TensorFlow, rather than forming the core of new talent pools.

Given these dynamics, optimizing for talent availability is critical. The incremental performance gains from adopting a less common or “exotic” stack rarely justify the cost of retraining teams or migrating existing workflows. Prioritizing widely adopted frameworks can reduce hiring friction, shorten onboarding, and increase the likelihood of long-term project success.

Total Cost of Ownership

Total cost of ownership (TCO) encompasses infrastructure, developer time, ongoing maintenance, and the costs associated with switching frameworks.

PyTorch’s intuitive APIs and strong support help reduce training time and debugging effort, making it easier to ramp up teams quickly. TensorFlow’s mature serving and observability ecosystem lowers operational risk, providing stability in production environments. Meanwhile, ONNX Runtime enables a clear separation between training and serving, which can simplify future migrations and reduce long-term friction.

When evaluating frameworks, it is important to prioritize those that minimize switching and maintenance costs across the entire ML lifecycle, rather than focusing solely on short-term GPU performance. A framework that streamlines development, deployment, and monitoring can deliver far greater efficiency and lower risk over time, making it a more sustainable choice for enterprise-scale machine learning.

Standardization vs. Flexibility

Platform teams frequently debate whether to enforce a single ML framework or allow multiple options across the organization.

Fully standardizing on one library can improve governance, consistency, and maintainability, but it may also slow research and experimentation. Conversely, allowing complete flexibility can accelerate innovation at the cost of operational complexity and increased risk.

A pragmatic approach is to standardize where it matters most: downstream serving and deployment. Using tools like ONNX Runtime or TorchServe ensures consistency and reliability in production, while teams retain the freedom to choose the most appropriate framework for training and experimentation. This balance maintains governance without stifling upstream innovation, enabling both robust operations and research agility.

Final Guidance

For most enterprises, PyTorch strikes the best balance of usability, performance, and ecosystem maturity, making it the pragmatic default for a wide range of projects.

TensorFlow remains strong in compliance-heavy or large-scale environments, while JAX is well-suited to performance-focused research teams with robust internal platform engineering. Keras accelerates prototyping within a TensorFlow ecosystem, and ONNX Runtime helps reduce lock-in by harmonizing inference across different frameworks.

As machine learning systems become increasingly mission-critical, the focus shifts from asking “which framework is fastest?” to asking “which can we operate, audit, and evolve safely?” Choosing a neural network library should therefore be treated as a platform policy rather than a matter of developer preference, balancing talent availability, operational reliability, and long-term risk.