Executive Summary

This article explains how generative AI reshapes enterprise security risk. It shows why traditional security models fail for probabilistic and agentic systems, how shadow AI expands exposure, and how attackers are using AI to scale threats.

The rapid and ubiquitous adoption of artificial intelligence (AI), particularly Generative AI (GenAI), marks a watershed moment in enterprise technology. As AI systems become embedded in mission-critical processes, they introduce a new class of risk defined by non-determinism, probabilistic outputs, and the blurring of boundaries between data and code. Security models built for deterministic software were not designed to protect these assets, and, in many cases, are structurally inadequate to do so.

This article examines how AI is reshaping the enterprise threat landscape. It outlines the security implications of probabilistic models, agentic systems, and unsanctioned AI usage across the workforce, and explains why traditional cybersecurity controls fall short in this new environment. The focus is on establishing a shared understanding of the problem, setting the foundation for the governance and architectural frameworks explored in the next article of this series.

What Is the New AI Risk Landscape for Enterprises?

The AI risk landscape is defined by probabilistic models, autonomous systems, and the convergence of data, logic, and execution. Unlike traditional software, AI introduces risks that cannot be controlled through static rules or perimeter defenses alone.

Artificial Intelligence is no longer an emerging technology. It is now a foundational capability embedded across modern enterprises, public-sector institutions, and global mission systems. Over the past few years, AI adoption has shifted materially. What began as isolated experiments in machine learning has evolved into enterprise-scale AI ecosystems. These now include foundation models, Retrieval-Augmented Generation (RAG) pipelines, agentic systems, autonomous workflows, and multimodal intelligence frameworks.

At the same time, employees across the organization—from executives to entry-level analysts—are increasingly using third-party AI tools to support daily work. This parallel expansion of formal deployments and informal usage has widened the enterprise AI footprint far faster than most security and governance programs were designed to handle.

This acceleration sets the stage for a core challenge facing technology leaders today.

Why Do Traditional Security Models Fail for AI Systems?

Traditional security models fail because they assume deterministic behavior, auditable logic paths, and binary failure modes. These assumptions do not hold for probabilistic AI systems.

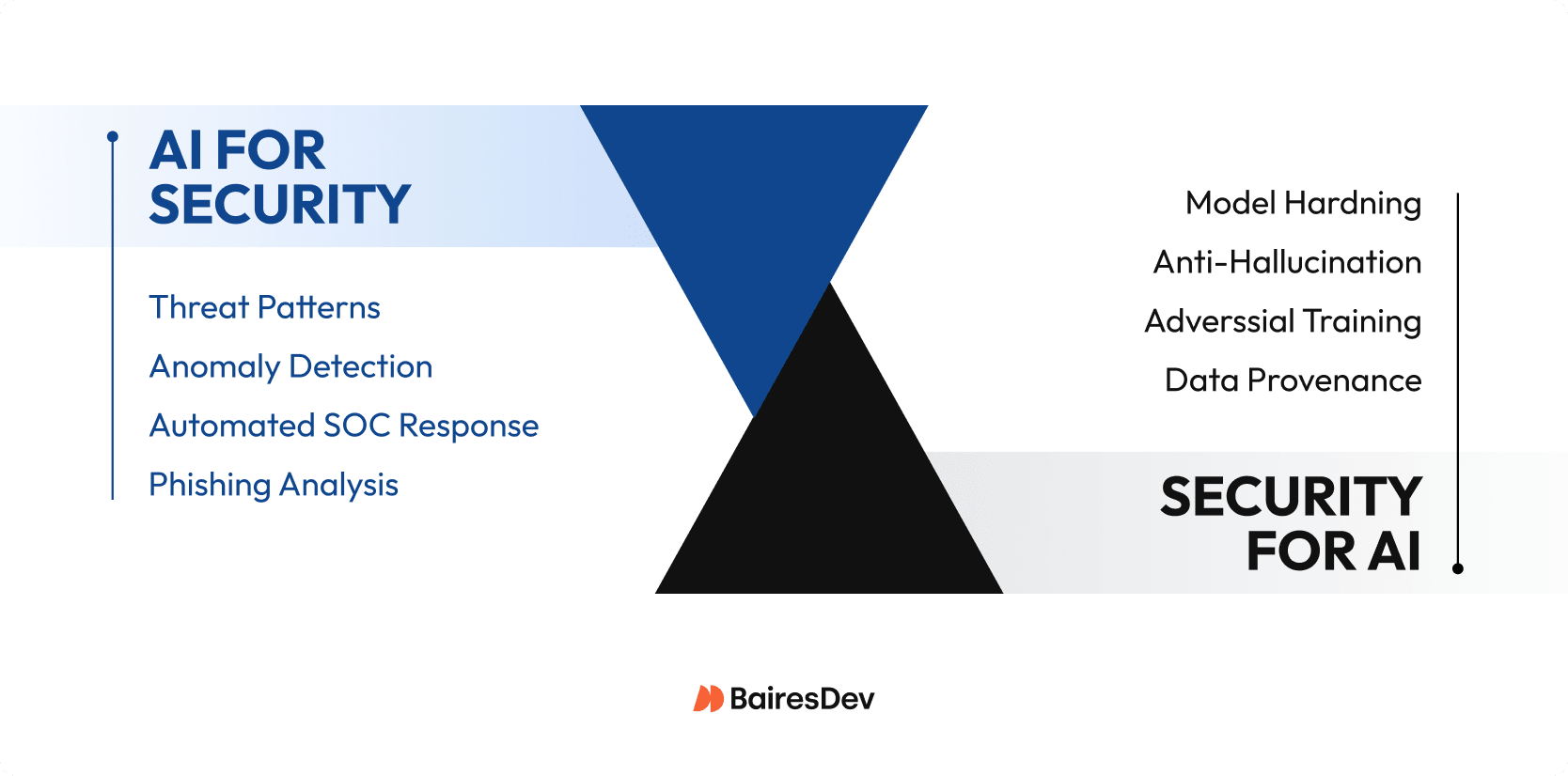

Enterprises now operate within a bifurcated security landscape. On one side, AI is increasingly used for security—to automate detection, correlate signals, and improve defensive responsiveness. On the other, organizations must secure AI itself, protecting the integrity, confidentiality, and availability of probabilistic model architectures.

This dual mandate exposes a structural insufficiency. Probabilistic AI systems violate each of these assumptions. Closing this gap requires understanding how AI systems diverge architecturally from conventional software—and why those differences matter from a security perspective.

The resulting challenges are not incremental. They stem from fundamental properties of probabilistic AI systems that reshape how risk manifests in enterprise environments.

Stochasticity and Opacity

AI models generate outputs through probabilistic inference rather than explicit, traceable rules.

Unlike deterministic software, where logic is explicitly programmed and traceable, AI models operate through probabilistic inference. Outputs emerge from learned patterns across vast parameter spaces rather than fixed rules, making traditional controls such as signature-based detection and static code analysis poorly suited to constrain model behavior.

This opacity creates an interpretability gap. The causal link between input and output is often unclear, complicating root-cause analysis, incident response, and forensic investigation.

Serialization of Knowledge (Data as Code)

In Generative AI systems, proprietary enterprise knowledge is not merely accessed at runtime, it is encoded directly into a model’s weights and biases through training or fine-tuning. This transforms the model artifact itself into a critical intellectual property asset.

Protecting data at rest or in transit is no longer sufficient. Teams must safeguard model weights, inference processes, and downstream outputs against inversion, extraction, and replication attacks.

Semantic, Not Binary, Failure Modes

AI systems fail semantically rather than through binary system errors.

Traditional software failures are typically binary. Systems crash, throw exceptions, or fail to execute. AI systems fail differently. Errors often appear as hallucinations, bias, or subtle misalignment—outputs that are syntactically valid but functionally hazardous.

These failures require a shift away from availability-focused monitoring toward continuous validation of output integrity verification.

Adversarial Sensitivity

AI models can be manipulated through adversarial inputs that exploit semantic and contextual weaknesses.

AI models are vulnerable to adversarial perturbations, inputs crafted to exploit high-dimensional embedding spaces. Techniques such as prompt injection and indirect prompt injection do not target system code. Instead, they manipulate model behavior by reshaping context and instruction hierarchies. Because these attacks operate at the semantic level, they bypass traditional detection mechanisms while producing outputs that appear legitimate.

Why Does Agentic AI Create New Security Risks?

Agentic AI introduces risk because non-deterministic reasoning is combined with autonomous execution.

As AI systems evolve from passive generators to agentic entities capable of invoking tools, calling APIs, and executing workflows, the attack surface expands exponentially. The non-deterministic nature of Large Language Models (LLMs) combined with autonomous execution introduces a new class of risk.

In these environments, theoretical vulnerabilities can translate directly into kinetic or transactional impacts within the enterprise infrastructure.

How Does Enterprise AI Adoption Expand the Attack Surface?

Enterprise AI adoption expands the attack surface by placing probabilistic systems on the critical path of business execution.

In practice, this adoption has moved beyond experimental piloting to become a fundamental component of the corporate technology stack. This proliferation spans major verticals, including healthcare, financial services, defense, and critical infrastructure, signaling that AI is no longer a differentiator but an operational baseline.

The integration of AI is not monolithic. It permeates the enterprise through distinct functional modalities, each introducing different dependency risks. Here are some common use cases:

Knowledge Synthesis and Retrieval

- Enterprise Search & knowledge management (RAG Architectures): shifting from keyword-based indexing to semantic retrieval.

- Document Summarization & Automation: processing unstructured legal, medical, and technical corpora at scale.

Generative Engineering

- Code Generation & DevSecOps: utilizing large language models (LLMs) to accelerate the SDLC, introducing risks of insecure code injection and IP leakage.

Predictive Intelligence

- Risk Assessment & Fraud Detection: applying high-velocity inference for anomaly detection in transaction layers.

- Predictive Analytics: driving supply chain logic and financial forecasting models.

Autonomous and Agentic Systems:

- Customer Support Automation: shifting from static decision trees to dynamic conversational agents.

- Agentic AI: systems granted permission to execute tools, manipulate APIs, and make autonomous decisions without human-in-the-loop oversight.

These enterprise AI use cases increasingly place models on the critical path of business execution. By embedding probabilistic systems into otherwise deterministic workflows, enterprises create new dependency chains. A failure at the model layer becomes an operational disruption.

As systems move closer to autonomous decision-making—particularly in agentic AI—the potential blast radius expands. Security failures can extend beyond data exposure to the direct manipulation of business logic, transactions, and external systems.

What Is Shadow AI and Why Is It Dangerous?

One of the least visible yet most consequential contributors to this expanded attack surface is shadow AI.

“Shadow AI” refers to the widespread, unsanctioned use of third-party generative tools by employees, often outside formal procurement, security review, and IT oversight. Unlike traditional Shadow IT, which typically involves persistent software installations, shadow AI usage is frequently ephemeral and browser-based. This makes it difficult to detect through standard endpoint controls. This creates a parallel, unmonitored inference layer where corporate data interacts with external, black-box models.

For example, in a notable 2023 leak, Samsung employees inadvertently exposed proprietary source code by pasting it into ChatGPT for debugging. Because the interaction was browser-based and unsanctioned, it bypassed standard security controls, moving sensitive IP into an external model’s training pool.

Mechanisms of Data Exfiltration and Contamination

The primary risk associated with shadow AI is not access alone, but the implicit transfer of sensitive assets. When employees use public large language models to debug proprietary code, summarize legal contracts, or analyze HR datasets, they effectively shift data stewardship to external providers. This introduces several exposure vectors.

- Corpus Absorption

Data submitted to public model endpoints may be incorporated into provider retraining pipelines. In this scenario, proprietary intellectual property is not simply leaked; it may be encoded into future model weights, making it recoverable by third parties through targeted prompting.

- Regulatory Non-Compliance

Unmonitored data egress bypasses established Data Loss Prevention controls and can trigger immediate violations of data sovereignty and privacy regulations such as GDPR, HIPAA, and PCI DSS. Once data crosses the inference boundary, organizations lose the ability to audit provenance or enforce retention policies.

- Model Contamination

Reliance on unverified external models for code generation or decision support can also introduce risk inward. Hallucinated, biased, or poisoned outputs may be reintegrated into internal systems, contaminating development streams.

Why Does Blocking AI Fail as A Security Strategy?

Blocking AI fails because it suppresses visibility while accelerating unsanctioned usage.

Attempting to mitigate AI-related risk through total obfuscation or network blocking is increasingly nonviable. As noted in industry analysis, as AI capabilities are embedded into standard SaaS platforms, they function less like discrete applications and more like a ubiquitous utility. A strict “block-by-default” posture not only stifles innovation, but also drives AI usage further underground.

As a result, the architectural challenge shifts. Rather than enforcing rigid perimeter controls, organizations must prioritize observability and safe enablement—establishing guardrails that sanitize inputs, anonymize sensitive data, and mediate interactions before information reaches external inference engines.

How Are Attackers Using AI Against Enterprises?

Attackers use AI to scale, personalize, and automate attacks while simultaneously targeting enterprise AI systems themselves.

The integration of AI into the cyber-threat landscape introduces a “force multiplier” effect for adversaries. It lowers the barrier to sophisticated attacks while simultaneously expanding the enterprise attack surface. This convergence manifests in two reinforcing dimensions:

1. AI-Enhanced Offensive Capabilities

Adversaries increasingly leverage generative models to accelerate the “Kill Chain” (attack lifecycle), compressing the timeline from reconnaissance to execution. Here are some salient examples:

- Hyper-Personalized Social Engineering uses LLMs to generate context-aware phishing campaigns at scale, bypassing traditional syntactic filters.

- Polymorphic Malware Generation automates the creation of mutating code to evade signature-based detection.

- Automated Vulnerability Discovery uses AI agents to autonomously scan and reverse-engineer API logic to identify exploitable gaps.

- Synthetic Traffic & Disinformation flood detection systems with synthetic traffic patterns to mask malicious lateral movement or destabilize trust.

2. What are the main categories of AI security vulnerabilities?

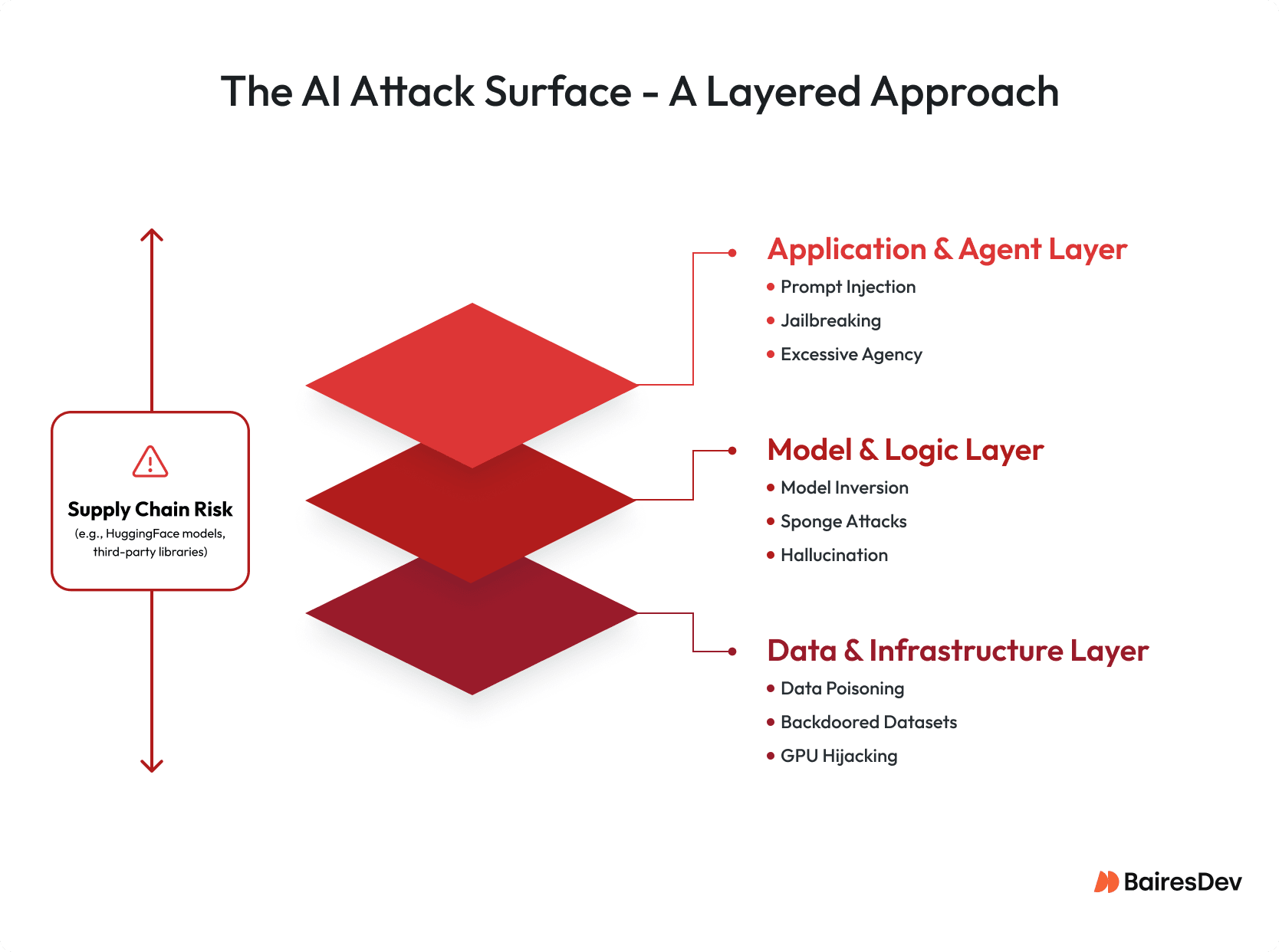

At the same time, AI systems deployed within the enterprise represent high-value targets. The vulnerabilities inherent in these systems span the entire MLOps lifecycle, from data ingestion to inference runtime. These risks are best understood across three layers.

- Model-Level Threats (Inference and Logic)

Model-level attacks target the probabilistic behavior and alignment of AI systems. Techniques such as prompt injection and semantic exploitation manipulate input context to override system instructions, effectively bypassing safety guardrails without modifying underlying code.

More advanced attacks focus on model inversion and extraction, where adversaries reconstruct training data or functionally replicate proprietary model weights, resulting in intellectual property theft. Alignment bypass techniques further attempt to strip away reinforcement learning safeguards, exposing models to unsafe or unintended behavior.

- Data-Level Threats (Corpus and Context)

Data-level attacks compromise the integrity and confidentiality of the information that fuels AI systems. For example, data poisoning and backdoor insertion embed malicious triggers into training or fine-tuning datasets, causing models to behave incorrectly only when a specific “trigger” pattern is present.

In Retrieval-Augmented Generation architectures, attackers may poison vector databases or knowledge stores, leading models to surface malicious content as trusted information. Lastly, sensitive data resurfacing exploits model memorization to extract personally identifiable information or credentials inadvertently included in training corpora.

- Infrastructure-Level Threats (Pipeline and Supply Chain)

Infrastructure-level attacks target the software and compute scaffolding that supports AI operations. Think of MLOps pipelines that may be compromised through malicious code injection in CI/CD workflows. Another example are supply-chain risks emerging from reliance on pre-trained models sourced from public repositories (e.g., Hugging Face) that may contain embedded malware or backdoors.

Additional threats include compute resource hijacking, where insecure APIs are exploited to repurpose GPU clusters for unauthorized workloads. More severe cases involve agentic execution exploits that allow attackers to control AI agents capable of executing system commands or modifying external databases.

What Is the Probabilistic Risk Shift in Enterprise AI?

The probabilistic risk shift reflects a move from securing deterministic infrastructure to securing stochastic, probabilistic AI models. Generative AI has fundamentally reshaped the enterprise security landscape, creating a bifurcated environment where organizations must leverage AI for defense while hardening models against threats like hallucination, prompt injection, and model inversion.

Compounded by the rise of shadow AI and the weaponization of AI by adversaries, the enterprise attack surface now encompasses the very logic and knowledge encoded within the enterprise’s critical assets. Conventional security tools like firewalls, data loss prevention, and static analysis are structurally ill-suited to this environment. They were designed for binary outcomes, not for the opaque, non-deterministic behavior of large language models. Continued reliance on these legacy controls creates a growing governance gap, leaving high-velocity AI adoption without high-assurance guardrails. Identifying this shift is only the diagnostic. The response must be architectural.