Executive Summary

AI risk is fundamentally different from traditional software due to its probabilistic and opaque nature. Because AI operates at the intersection of code, data, and intent, ad-hoc controls are no longer sufficient. This section outlines why institutionalized governance—grounded in industry frameworks—is the essential prerequisite for defensible, scalable, and responsible AI deployment.

In a real-world scenario, the necessity for institutionalized governance becomes undeniable the moment you discover public AI tools are actively exposing your company’s intellectual property and internal resources. Faced with this breach, the instinct is often to retreat into a reactive “block-and-deny” posture. However, we quickly realized that such a stance is strategically unsound. Instead, we pivoted to institutionalizing “safe AI”—deploying a governance framework that provided sanctioned, secure alternatives. This experience crystallized a fundamental truth: governance cannot be a blockade; it must be a path.

This shift requires a fundamental transition from ad-hoc security controls to institutionalized governance. AI systems operate at the intersection of code, data, and human intent, which means accountability can no longer reside within isolated technical silos. Responsibility must be elevated to an organizational mandate that deliberately bridges data science, cybersecurity, and legal/compliance functions.

The strategic imperative is clear as enterprises must deploy a defense-in-depth strategy that harmonizes rapid innovation with rigorous assurance. This balance cannot be achieved through bespoke, home-grown methodologies alone. AI risk is too multidimensional, and regulatory scrutiny too unforgiving, to rely on informal or inconsistent approaches.

Instead, organizations must adopt industry-validated frameworks that provide a structured vernacular for risk quantification, mitigation, and auditability. Alignment with established standards ensures that AI defense architectures are not only technically sound, but also defensible in the face of regulatory scrutiny.

The analysis that follows is grounded in leading governance and security frameworks that translate AI risk from abstract concern into operational discipline.

Strategic Alignment: The NIST AI Risk Management Framework (AI RMF)

The National Institute of Standards and Technology (NIST) AI RMF establishes a governance-oriented baseline for managing AI risk across the enterprise lifecycle. It serves as the strategic foundation for enterprise AI governance.

Unlike traditional cybersecurity frameworks that focus primarily on technical controls, the AI RMF addresses the nature of AI systems themselves—acknowledging that model risk is generated as much by data context, human interaction, and deployment environment as it is by code. The framework provides an iterative, outcome-focused structure designed to manage AI risk continuously rather than as a one-time compliance exercise.

At its core, the AI RMF decomposes AI governance into executable functions and measurable trust objectives. This structure provides enterprises with a repeatable way to align organizational accountability, technical controls, and acceptable risk outcomes across the AI lifecycle.

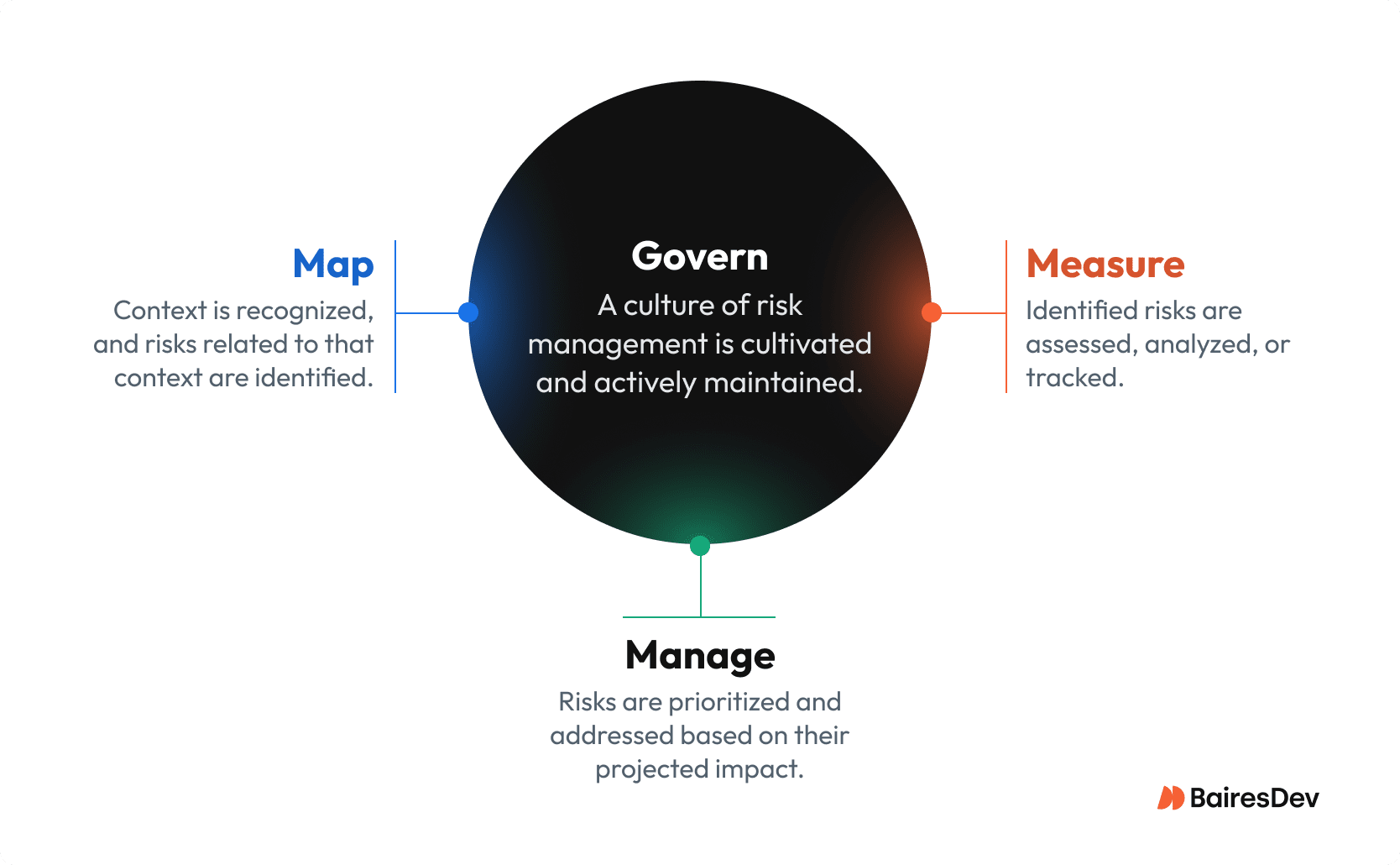

AI Risk Governance Across the Lifecycle

The AI RMF framework is architected around four cross-referencing functions that collectively define how AI risk is governed, assessed, and mitigated across the system lifecycle.

GOVERN: Accountability and Risk Ownership

This foundational function establishes the organizational “risk charter” for AI. It moves beyond high-level policy statements to define concrete roles and responsibilities for all AI actors—including developers, deployers, operators, and auditors. Its primary executive output is the explicit definition of organizational risk tolerance, ensuring that technical deployments remain aligned with legal obligations and enterprise risk appetite.

MAP: System Context and Intended Use

Risk is inherently contextual. The MAP function requires comprehensive documentation of the system’s deployment environment. It requires explicit identification of the intended purpose versus potential misuse. By clearly defining these boundaries early, organizations frame how the model is expected to behave before any technical assessment begins.

MEASURE: Risk Measurement and Validation

The MEASURE function translates qualitative concerns into observable metrics. It benchmarks system behavior against identified risks using objective testing methodologies, including algorithmic red teaming, to stress-test model behavior under adversarial and edge-case conditions. This phase provides empirical evidence to support governance decisions.

MANAGE: Risk Mitigation and Enforcement

MANAGE is the execution layer where risk decisions are enforced. Based on outputs from the MEASURE phase, organizations implement architectural and procedural controls—such as input sanitization, access constraints, and AI firewalls. Critically, this function forces an explicit determination of residual risk: whether it can be mitigated, transferred, or formally accepted prior to deployment.

What Makes an AI System Trustworthy From a Security Perspective?

From a security perspective, an AI system is trustworthy when its behavior can be evaluated, governed, and defended across its full lifecycle.

The AI RMF defines trustworthiness as a set of measurable system properties; among them, four are particularly critical from a security architecture perspective.

Security and Resiliency

AI systems must maintain functional integrity under adversarial pressure. This includes resilience against threats such as data poisoning and model inversion, as well as the ability to degrade gracefully rather than fail catastrophically during an attack.

Privacy Enhancement

Given the tendency of models to memorize training data, privacy-preserving mechanisms are required to prevent the inference or leakage of personally identifiable information (PII) and sensitive intellectual property. Techniques such as differential privacy and cryptographic protections play a central role here.

Explainability and Interpretability

Effective governance requires that system outputs be traceable and auditable. Explainability mechanisms address the “black box” problem by enabling reviewers to understand decision rationale—an essential requirement for human-in-the-loop oversight and regulatory review.

Fairness with Harmful Bias Managed

Security extends to output integrity. Governance processes must identify and manage data and algorithmic biases to prevent the amplification of systemic disparities or the generation of inequitable outcomes that could expose the enterprise to legal, ethical, and reputational risk.

Translating Governance Into Security Execution

Here’s how strategic governance actually shows up in security testing and architectural controls.

Bridging Policy and Execution

While the NIST AI Risk Management Framework establishes the strategic why and what of AI governance, it does not prescribe the specific technical heuristics required to defend AI systems in practice. Operationalizing the MAP (threat identification) and MEASURE (testing) functions therefore requires more granular threat and vulnerability frameworks that can guide day-to-day security decisions.

Closing this execution gap requires industry-standard frameworks that translate abstract risk into testable hypotheses and enforceable controls. MITRE ATLAS (Adversarial Threat Landscape for AI Systems) and the OWASP Top 10 for LLM Applications serve as the industry-standard solution catalogues that translate abstract risk into executable security mandates.

How Does MITRE ATLAS Model Adversarial AI Threats?

MITRE ATLAS models adversarial AI threats by mapping how real attackers target machine learning systems across the attack lifecycle, adapting the MITRE ATT&CK taxonomy of tactics, techniques, and procedures to the AI domain.

It shifts organizational posture from abstract anxiety—“Is our AI safe?”—to concrete, empirically grounded threat modeling, “Are we resilient against specific adversarial tactics, techniques, and procedures?” Two salient aspects of MITRE ATLAS are particularly relevant:

Role in Governance

ATLAS functions as the AI-specific kill chain. It maps adversary activity across the full attack lifecycle, from resource development through model manipulation and downstream impact.

Operational Application

ATLAS directly informs the Security Assessment Plan by defining concrete red-teaming scenarios. For example, identifying the technique AML.T0020 (Poison Training Data) enables security teams to validate specific data provenance and integrity controls. These include mechanisms such as cryptographic dataset signing, rather than reliance on generic data hygiene policies.

OWASP Top 10 for Agentic Applications 2026: Key Security Risks

The OWASP Top 10 for Agentic Applications 2026 that identifies the most critical security risks facing autonomous and agentic AI systems.

Where ATLAS focuses on adversary behavior, the OWASP framework examines the AI system’s exposed attack surface. It serves as a tactical prioritization framework for application security (AppSec) and DevOps teams by identifying the most critical vulnerabilities inherent in generative AI architectures.

From a governance perspective, the OWASP list highlights the consensus-driven “critical few” risks that must be addressed prior to deployment. In operational terms, it dictates the architectural countermeasures required in the MANAGE function. For instance, mitigating prompt injection and excessive agency requires deploying application-layer controls, such as AI firewalls or semantic guardrails, to sanitize inputs and restrict the model’s action space.

Threat-Informed AI Governance Model

By integrating these three frameworks, the enterprise establishes a cohesive “defense-in-depth” strategy that covers policy, behavior, and vulnerability:

| Framework | Domain | Primary Question Answered | Functional Role |

| NIST AI RMF | Strategy & Policy | What is our risk tolerance and desired outcome? | Defines the rules of engagement and accountability (Governance). |

| MITRE ATLAS | Threat Intelligence | How will an adversary attack this system? | Defines the specific attack vectors and TTPs to simulate (Red Teaming). |

| OWASP Top 10 | AppSec & Runtime | Where is the application most likely to break? | Defines the required architectural controls and code-level mitigations (Hardening). |

How Enterprises Institutionalize AI Governance?

Enterprises institutionalize AI governance by embedding risk ownership, validation, and enforcement into formal operating structures, rather than relying on ad-hoc controls or policy statements. This elevates governance from a technical concern to a leadership mandate.

The convergence of stochastic AI risk, as seen in the first part of this exploration, and emerging governance frameworks, requires an immediate shift in enterprise posture. Security leaders can no longer adopt a “wait and see” approach. Instead, they must design governance models that are as adaptive and continuous as the threats they are meant to counter.

The following strategic mandates define the baseline requirements for securing the enterprise AI lifecycle. Together, they move governance from conceptual alignment to operational enforcement.

Secure-by-Design Through Harmonized Frameworks

Security must be embedded into the AI lifecycle before training begins, not patched in at inference time. This requires treating governance frameworks as execution mechanisms rather than reference documents.

Enterprises should adopt the NIST AI Risk Management Framework as the non-negotiable standard for all AI initiatives, embedding the MAP and MEASURE functions directly into MLOps pipelines as formal quality gates. However, theory often hits a reality check during implementation. In a recent review of a GenAI agent implementation, we found that even with the OWASP Top 10 as a standard, it was a struggle to effectively mitigate threats like Prompt Injection and Excessive Agency. Red-teaming exercises proved that clever prompting could easily trick the model into bypassing safety guidelines, and it became clear that “prompt engineering” a model into safety simply doesn’t scale.

Moving from theory to practice requires deploying an architectural enforcement layer. Instead of relying on the model to police itself, security controls must be placed directly in the inference path. Threat intelligence must also be operationalized by requiring that all security assessments leverage MITRE ATLAS for adversary modeling and the OWASP Top 10 for LLM Applications for vulnerability scanning.

Supply chain transparency is equally critical. Enforcing an AI Bill of Materials (AI BOM) for every deployed model reduces exposure to poisoning and upstream compromise. This includes covering training data provenance, model architecture, and third-party dependencies.

Cross-Functional AI Governance Council

AI risk is inherently socio-technical and cannot be managed by security teams in isolation. Effective governance requires shared ownership across technical, legal, and ethical domains.

For unified accountability, establish standing AI governance council comprising the CISO, chief data officer, legal counsel, and ethics or privacy leadership. This body owns the GOVERN function of the NIST AI RMF and serves as the authoritative decision-maker for AI risk acceptance.

Critically, the council must define explicit enterprise risk tolerance for probabilistic failure. For example, what is the acceptable error rate for a customer-facing chatbot versus an internal code-generator? Without this definition, engineering teams operate without guardrails.

How Enterprises Address the Shadow AI Visibility Gap?

Enterprises address the shadow AI visibility gap by making unsanctioned AI usage observable rather than pushing it out of sight. Governance depends on visibility because you cannot govern what you cannot see. Ignoring unsanctioned AI usage, often referred to as the “ostrich effect,” is a primary vector for data exfiltration.

Rather than enforcing a block-by-default posture that drives usage underground, enterprises should adopt an enable-and-monitor strategy. Cloud Access Security Brokers (CASB) and secure web gateways (SWG) capable of inspecting API traffic can surface shadow usage of generative AI tools without disrupting productivity.

Visibility alone, however, is insufficient. The most effective countermeasure to shadow AI is the availability of secure, enterprise-sanctioned alternatives. Accelerating deployment of private, walled-garden LLM interfaces allows organizations to meet workforce demand while maintaining data sovereignty and auditability.

Continuous Adversarial Validation

Governance only holds if it can be enforced in practice. Given the opacity of deep learning models, standard penetration testing is insufficient. AI systems must be validated through adversarial testing that targets model behavior, not just infrastructure.

Enterprises should implement continuous AI red teaming programs that evaluate logic and alignment. This includes testing for prompt injection, jailbreaks, and hallucinations, failure modes that fall outside conventional security tooling.

Testing must also extend beyond syntactic exploits to include semantic attacks, like trying to trick the model into revealing PII. Without this logic-based testing, governance remains theoretical rather than enforceable.

Governance Defines the Rules, Architecture Enforces Them

Effective AI security is not achieved through isolated controls, but through governance that is structured, enforceable, and grounded in shared standards. Throughout this analysis, governance emerges as the mechanism that sets intent, while architecture determines whether that intent holds in practice. Frameworks such as the NIST AI Risk Management Framework, MITRE ATLAS, and the OWASP Top 10 for LLM Applications collectively replace uncertainty with clarity. These define accountability, modeling real-world threats, and prioritizing the weaknesses that matter most.

Governance, however, is only the blueprint. Policies alone cannot prevent misuse, data leakage, or adversarial manipulation at runtime. Closing the gap between intent and outcome requires security architectures capable of enforcing these rules at the speed AI systems operate. Translating governance into execution is the challenge that follows—and the focus of what comes next.