Companies aren’t struggling to imagine AI, they’re struggling to staff it. Job postings for AI engineers have exploded, salaries have soared, and hiring delays are crippling ambitious pilots.

So when MIT reporting suggests that roughly 95% of generative AI pilots aren’t producing measurable business impact, it’s worth asking why? Many AI pilots show great promise in a demo environment, but with inconsistent data and unclear ownership, they fail to deliver measurable business impact when integrated into real-world workflows. The result is a lot of demo value and no tangible results. So, your biggest obstacle to AI success isn’t the tech, it’s fielding the right team.

For any business leader, the job is to reduce the time it takes to get useful results from AI, while keeping risk low and people on board. That calls for a specific type of team. Below is a field-tested way to think about how to build your AI team with the end goal in mind.

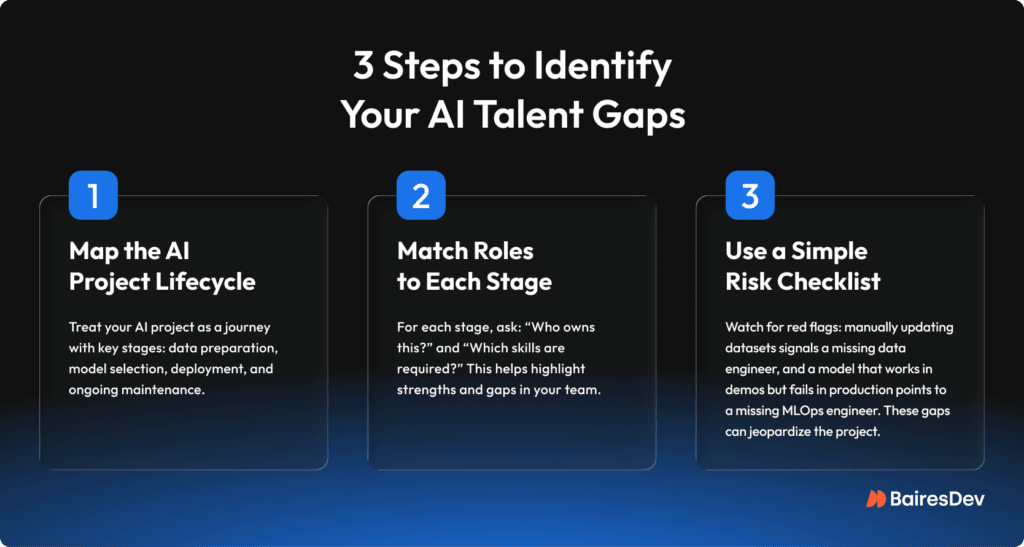

Diagnosing your AI needs

Before assembling an AI dream team, you need a clear diagnostic, with an audit and inventory intake.

Start by defining the use case in plain language

Spell out the problem you want AI to solve, the business outcome that would signal success, and the cost of doing nothing for another quarter.

Microsoft frames this well in their business envisioning guide, which suggests asking questions like what exactly are we solving, why now, how will we measure progress, and who is accountable? That kind of structure helps ensure you’re not just chasing “AI ideas” but choosing use cases that can be prioritized and defended.

In supply chain, for instance, “reduce stockouts by 20% in the top 200 SKUs” is clearer than “use predictive analytics to reduce waste.” You can work backward from a specific target like that and reverse engineer who you would need on your team to get that AI up and going.

Map the team you already have

Many organizations discover more capability than expected once they look closely. Backend engineers with strong programming skills often transition into building data or inference services. Analysts with a solid foundation in SQL and statistics can co-own early experiments and evaluation. In our own teams, developers with diverse backgrounds have successfully upskilled into AI roles. With focused pairing and a short skills sprint, hidden capacity tends to surface.

Identify the gaps that raise risk

Reliable data pipelines don’t build themselves; for that, you need data engineers. Production models need proper deployment, monitoring, and rollback, so you’ll need an MLOps engineer. If you don’t have those competencies, it can signal a potential risk if left unfilled. You do not want gaps if you really want to launch an effective AI project.

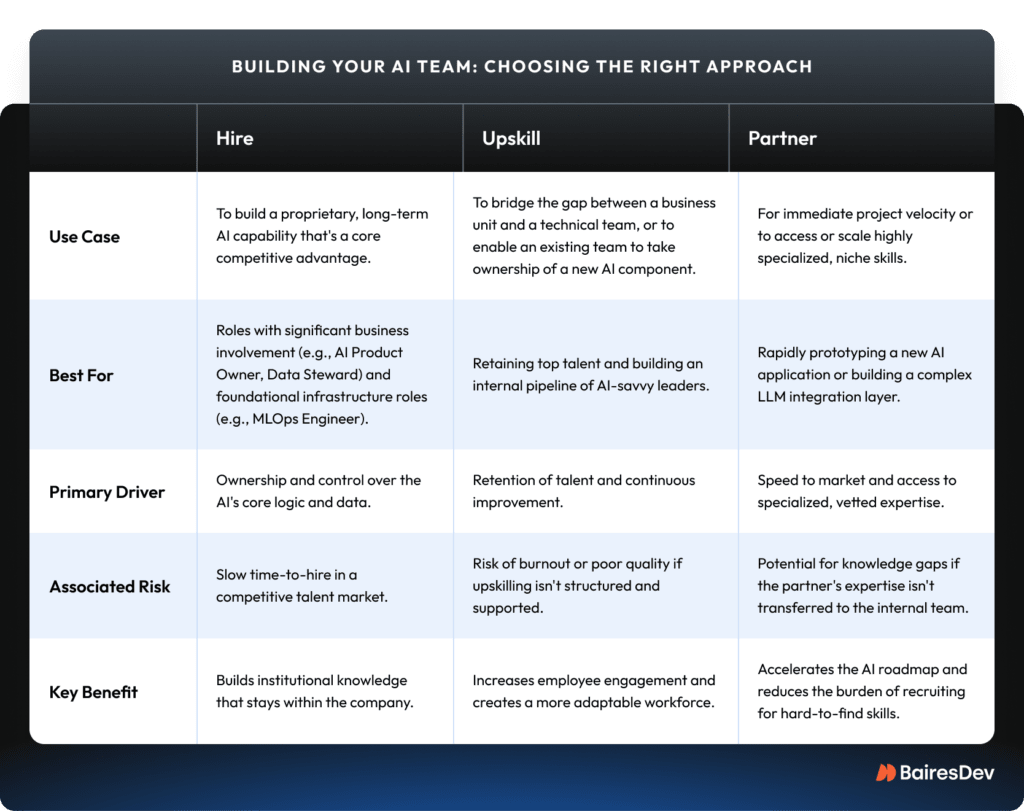

Decide whether to hire, upskill, or partner

There are three main options to fill your team’s gaps. The right choice depends on the specific role, the necessary expertise, and the project timeline.

When to Hire

Decide which roles are so central to your company’s success that they must be owned in-house. These are the people who own the business processes and the problem you are solving, ensuring your AI initiatives are always aligned with your company’s goals. This is where you hire for core competencies and internal accountability.

Think about roles like an AI Product Owner, who acts like the voice of the business, defines the problem, and prioritizes features. Or, think about a Compliance and/or Governance Lead. This role oversees compliance regulations and specific industry standards, and you don’t want to give away control of your regulatory defense, particularly when you’re handling customer data in regulated industries.

You also need to hire directly for competencies that are crucial for your long-term technical infrastructure. For example, you’ll need an MLOps engineer not just for one project, but for every AI initiative going forward. Once you move beyond pilots, you’ll be continuously deploying, monitoring, and retraining models in production. That’s a core software infrastructure need, not a one-off task. Without someone owning it, you’ll end up with a fragile system that can’t scale.

When to Upskill

Upskill when adjacent skills are close enough to bridge with structured coaching and hands-on projects. Implementing an intentional upskilling strategy helps you cash in on the talent you already trust. What doesn’t work is leaving developers to scrape together AI knowledge in isolation. This is a fast path to burnout and half-learned practices.

The reality is that many developers are already taking this into their own hands. Our Dev Barometer Q3-2025 revealed that 65% of them worry about falling behind on AI skills, and developers are now spending on average 4 hours per week on upskilling. The same survey revealed how a minority of companies have structured upskilling programs in place. To harness developers’ individual efforts, a good practice is to provide a structured, company-supported environment that encourages peer-to-peer learning like our Circles program.

This approach makes upskilling more adaptable, sustainable, and highly engaging for everyone. It shows that by investing in a peer-led community, companies can support developers who are already eager to learn, helping them turn their independent upskilling efforts into a structured, company-wide strength.

When to Partner

Bring in a partner when speed or niche depth matters more than owning every skill on day one. The goal isn’t a bigger headcount just for the sake of it; it’s about strategically scaling your team to gain momentum with AI guardrails. For instance, many enterprises accelerate timelines by partnering with specialized providers like BairesDev for LLM developers or data engineering experts.

BairesDev AI Case Study

One client developing humanoid robots needed to extend their platform with advanced AI capabilities. They required:

- ROS2 simulations fine-tuned for robotic arm automation.

- CAN bus integration to connect with specialized aircraft-towing hardware.

- Advanced object segmentation for pose detection, tool tracking, and learning from human demonstrations.

These are deep, niche skills. Upskilling existing engineers wasn’t realistic. ROS2 and CAN bus expertise takes years of hands-on work, not weeks of training. Hiring directly would have meant a slow and uncertain search in a very thin talent market. Meanwhile, the stakes were high: in robotics, flawed simulations or segmentation risk equipment damage and safety failures.

The client avoided those bottlenecks by partnering with us. Our engineers built the ROS2 simulations, wired the CAN bus integration, and delivered segmentation pipelines that worked with their hardware-agnostic platform. Their internal team stayed focused on core product development, while we accelerated the AI layer that allowed their robots to execute tasks safely and learn from demonstrations.

This case shows how easy it is to stall without the right expertise in place. A diagnostic forces the tradeoffs into the open. Instead of scrambling to “find AI talent,” you’ll know which roles matter, when to add them, and whether to build or borrow the experts you need.

The talent behind AI: who you need, why, and when

When hiring is guided by Team Topologies principles, roles are defined around outcomes rather than static job titles. This lets leaders place skills exactly where they create the most value, shorten time-to-hire, and avoid the waste of mismatched talent.

Here are the roles you’ll need for your AI project, tied to their deliverables and the tangible risks of not having them.

- Data engineers build and maintain the pipelines that move data from source systems into a usable format. Without them, your AI model is flying blind with bad data. In retail, this could mean overstocking products that don’t sell or running out of bestsellers.

- Data scientists validate and benchmark models to ensure they perform accurately and reliably. Without their checks, an LLM could confidently give wrong answers. This could be advising customers with inaccurate financial or medical details because no one built in bias or factuality tests.

- AI and ML engineers take a trained or base model and customize it according to business needs. They deploy it into a working application, connect it to the right data flows, and integrate it into company workflows. Without their work, your model is a research experiment, not a business solution.

- AI integration engineers keep complex AI systems safe and reliable in production. They add guardrails to block bad outputs, build retrieval pipelines (as in RAG systems) so models use up-to-date data, and orchestrate multi-step tasks. Without this, an oil and gas operator might get drilling recommendations based on outdated or missing sensor data, leading to the wrong maintenance schedule, misjudged pressure, or even a blowout.

- Prompt engineers design and refine the instructions that shape how LLMs respond. Their work improves accuracy, efficiency, and alignment with business logic and data context. Without them, systems can produce confident but wrong answers. In a traffic system, a poorly designed prompt could make the AI misread congestion and worsen traffic jams instead of easing flow.

- MLOps engineers keep the AI workflow stable once models go live. They manage versioning, CI/CD, monitoring, and rollback so updates do not break production. Without them, new releases can stall or ship bugs that quietly skew results. For example, a pricing model might drift and start setting prices too low, gradually eroding profit margins.

- Product owners and UX specialists ensure AI projects serve real user needs. They connect technical output to daily workflows so adoption actually happens. Without them, a good model can become a bad product. For example, imagine a school deploying an AI tutor that gives technically correct answers but in a confusing interface. Teachers abandon it because it slows class time instead of helping.

- Data stewards safeguard governance and compliance. They track lineage, enforce policies, and make outputs explainable and defensible to auditors. A bank deploying an AI credit scoring model needs to prove to regulators why a loan was denied. Without stewardship, those decisions become a black box and can’t be defended.

In short, a clear diagnostic helps you research and brainstorm exactly what you need rather than handing generic job descriptions to recruiters.

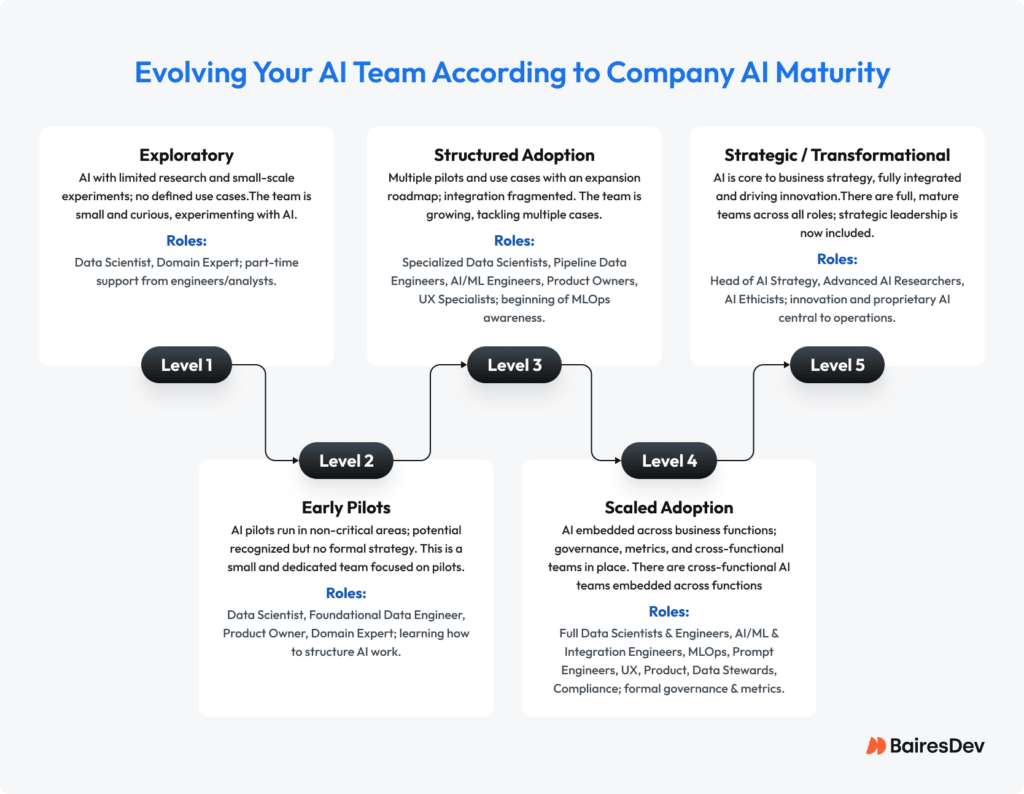

Value of roles by AI maturity change.

As AI programs mature, the mix of roles shifts. Early pilots start lean, with a small group focused on proving an idea has legs. This team might include a data engineer, a data scientist, a product owner, and a domain expert—someone who knows a workflow inside and out and can spot practical gaps the data won’t show.

Scaling is different. Once a pilot shows promise, you need deeper data engineering and stronger ML capacity to handle more volume and more variability. Domain experts from each department need to be embedded so the system reflects reality rather than assumption. A product owner can help bridge the domain experts’ expertise with the AI product. Without that alignment, seemingly strong pilots often collapse under the weight of messy data.

Over the long term, the center of gravity shifts toward operations, monitoring, compliance, and governance. You’re no longer proving an idea; you’re managing a production AI environment. Retraining pipelines, rollback procedures, audit trails, and policy alignment matter as much as model accuracy. This is where roles like MLOps engineers and data stewards move from “nice to have” to critical infrastructure.

To keep this process flexible as you grow, consider organizing teams around outcomes rather than static job titles. For example, one team might focus on a single high-value use case, while another group builds shared data pipelines or model services that support multiple products across the organization.

What makes an effective AI team today

Next, we need to get our AI team to deliver what 95% of generative AI pilots are missing: measurable, long-term impact. An effective AI team is a cross-functional group that blends strategic vision, technical expertise, and business alignment. Getting them on the same page demands the following conditions:

- Effective teams are clear about their strategic purpose. They know exactly where their work fits in the big picture. Are they building a core capability that will change company policy? A specific product feature with a clear release schedule? Or are they creating an internal tool to improve a back-office process? Each of these projects requires a different approach to risk, resource allocation, and timelines. The team must know which job they’re doing so they can align their efforts with the company’s priorities.

- They also respect specialization. There isn’t a single “AI professional” who does it all. Data engineering, evaluation, safety, and deployment are separate skills; you can cross-train, but skipping one usually shows up later as rework or stalled releases. That’s not just theory, Fastly’s 2025 developer survey found that almost 30% of senior developers edit AI-generated code so heavily that it cancels out most of the time they should have saved. In other words, even experienced engineers can’t simply “do it all” with AI tools. The value comes from having the right roles in place to evaluate, refine, and deploy properly.

- And they integrate with the business. Effective AI teams work closely with domain experts to ensure models reflect company rules, constraints, and context. Without that alignment, systems create shadow processes and extra manual reviews. Imagine if Bordeaux vineyards wanted to embrace AI. In Pomerol, irrigation is only legal under exceptional drought, must be pre-approved two days in advance, and stepping outside those rules can even cost a chateau its appellation rights. A well-integrated team would ensure an AI crop monitor understands these nuances. Otherwise, it might optimize for yield and ignore the very factors that produce premium vintages.

When these 3 conditions are in place, companies can build AI that adapts to their needs, scales with their growth, and produces results they can trust.

How businesses can set up AI teams for success long term

Treat the pilot as a learning exercise, but design for scale. While a successful pilot is a great start, a sustainable AI program requires a strategic mindset. You can plan for long-term success by focusing on these four pillars.

Scalability and MLOps

Pilots are usually bespoke. Scaling requires repeatable pipelines for testing, monitoring, retraining, and rollback, so errors don’t multiply with growth. Like any scientific experiment, every variable and step should be documented precisely enough that the process can be replicated across new teams, products, or regions. Too often, early AI wins collapse because the team wasn’t built with scalability, or to adapt as roles and needs evolve.

In the Kavango Zambezi conservation area, Nature Tech Collective piloted a digital twin using satellites, ground sensors, acoustics, and AI to track threats like poaching and habitat loss. The pilot worked in one zone, with modular components built to adapt elsewhere. But scaling required more than code: stronger data pipelines for larger inputs, MLOps to manage deployment and monitoring, and domain expertise to tune models for new environments. The takeaway is that a lean pilot can prove value, but scaling demands planning for data, operations, and context from the start.

When a logistics client’s AI workloads began to outgrow their infrastructure, our MLOps engineers rearchitected the system. They replaced manual, one-off scripts with automated pipelines for deployment, retraining, and monitoring. The result: 80% lower costs, 95% accuracy, and performance gains that proved disciplined engineering is what makes AI sustainable.

Data readiness and domain expertise

Models are only as good as their inputs. Data engineers and subject-matter experts must ensure data is clean, representative, and reliable.

Take a health agency wanting an AI system to predict outbreaks using hospital admissions, climate records, and mobility data. Early trials could fail because rural clinics lacked digital reporting, creating gaps that biased results toward cities. The model might grow overconfident in urban predictions while missing risks in underserved areas. Data engineers could fix pipelines to normalize inputs, while domain experts flag blind spots. The lesson: without solid data and contextual knowledge, forecasts collapse under real-world complexity.

One example from our own work proves the point. When a startup client led by ex-Google engineers saw its models plateau because of inconsistent training data, our data engineers stepped in to rebuild trust in the data. They automated quality checks, standardized ingestion, and implemented scalable data pipelines to ensure every model trained on reliable, verifiable inputs. That level of technical discipline and data craftsmanship is what keeps AI outcomes credible over time.

Evolving skills, soft skills, and keeping knowledge from walking out the door

AI teams aren’t static. As pilots scale, new technical roles appear, but collaboration, adaptability, and critical thinking matter just as much. At Web Summit Rio 2024, our CEO Nacho De Marco said: “AI is helping dramatically with coding and technology, so what really matters now is how you solve problems. Critical thinking — breaking complex challenges into smaller, solvable parts — is the skill that makes the difference.”

Knowledge hoarded in silos stalls progress. The teams that win document clearly, share openly, and train new members fast. Einstein wasn’t the only physicist working on relativity. Henri Poincaré also explored concepts foundational to relativity, but Einstein clearly formulated and communicated the theory that became the basis for modern applications such as GPS. The same is true in engineering teams: raw brilliance matters less if the knowledge never leaves a person’s head. Your engineers need to be collaborators.

The real differentiator in AI is the team

AI success is less about just models and more about talent and teams. Pilots fail when companies chase talent reactively, skip core skills assessments, or forget that governance and business fit matter as much as technical wins. The organizations that get it right diagnose their needs first, build or borrow the right capabilities, and evolve the mix as pilots become production systems.

At BairesDev, we’ve seen this play out across industries, from robotics to healthcare to finance. With over 200 AI specialists on staff, we help clients plug urgent gaps, stand up complete AI squads, or support internal teams with the niche skills that take years to build in-house. The result is momentum without sacrificing long-term ownership.

If the goal is AI that delivers measurable results at scale, the right team is where you start.