According to SQ Magazine, 72% of global workloads are cloud-hosted, up from 66% the previous year. 94% of enterprises now use some form of cloud service. McKinsey (2025) estimates that cloud adoption could unlock $3 trillion in EBITDA value globally by 2030.

Remember when the cloud first took off? It wasn’t long before we realized that such widespread cloud adoption also meant a big hit to many companies’ carbon footprints. With the cloud came massive data centers that needed constant cooling, forcing enterprises to get creative with energy solutions.

Then, out of nowhere, containers and Kubernetes transformed how businesses run their operations. But that shift wasn’t easy—Kubernetes brought serious challenges to developers, admins, and operations teams because it’s not a simple tool to master.

When generative AI entered the scene, new concerns emerged. Beyond the usual challenges, another big problem started to impact adoption: the skyrocketing demand for GPUs.

The Strategic Role of Cloud Computing in AI Adoption

When it comes to AI workloads—especially machine learning and generative models—companies need fast, scalable computing. Cloud platforms now make it easy to tap into powerful hardware like NVIDIA GPUs, without the upfront cost of building on-premise GPU clusters.

For teams leading AI efforts, speed is everything. Getting models up and running quickly and being able to iterate fast can make or break your edge. That’s where cloud platforms come in. They offer flexible GPU cloud solutions that balance performance with budget, making them a smart choice for enterprise AI.

Why GPUs Matter for AI

Most people associate GPUs with gaming and professional graphics. However, due to their raw compute power and parallelized architecture, GPUs can do so much more.

Traditionally, computing was handled by the Central Processing Unit (CPU) of a desktop or server. For most applications, that works just fine. But with the rise of cloud, AI, and Machine Learning services, the demand placed on CPUs quickly outpaced the ability of traditional hardware to create isolated environments for AI workloads.

Because of that, developers had to figure out a way to offload some processes. This is especially so with AI, which requires massive amounts of power for model training and inference.

AI’s Comput Bottleneck

Generative AI relies on complex machine learning algorithms to locate structures and regularities within massive datasets. As it discovers those data regularities, it acquires skills. Big data also works with large collections of data (which requires considerable computing power), but without the added stress of learning.

The learning portion of AI places extraordinary demands on hardware, not only because it has to read through a lot of data but also because the neural networks on which it depends must be trained.

According to Moore’s Law, the amount of power used by technology doubles every two years.

However, because of cloud and AI technologies, resource usage is doubling at a rate 7 times faster than previously tracked.

The only way to handle such resource demand is to offload more and more computations to the cloud.

The good news is that GPU prices have dropped since their pandemic peaks. Even so, demand and prices remain elevated. When you take into consideration the number of GPUs that are often required to handle such big computing loads, the cost can get prohibitive.

In addition to model training, the role of inference in maximizing performance in AI workloads cannot be overstated.

Cloud Takes the Spotlight

Instead of using in-house data centers (or simply a cluster of off-the-rack computers), many businesses are turning to GPU clouds to handle AI computing.

Users can access thousands of GPUs for their projects, highlighting the availability and scalability of cloud services.

First off, it’s much cheaper than purchasing numerous (costly) GPUs that are powerful enough to handle the requirements of neural networks. And because most cloud services offer “pay as you go” models, you can keep costs down with the ebb and flow of demand.

Another reason why offloading AI compute to the cloud is beneficial is that it will save your company on energy costs. The computational demand AI places on hardware requires considerable power which can cause a company’s electric bills to skyrocket.

More than anything, it’s important to understand that cloud providers simply offer unparalleled scale. Whereas many companies can afford to maintain on-premise servers for traditional services, in the realm of LLMs, this is impractical for most companies (save for industry leaders and highly-specialized AI development companies).

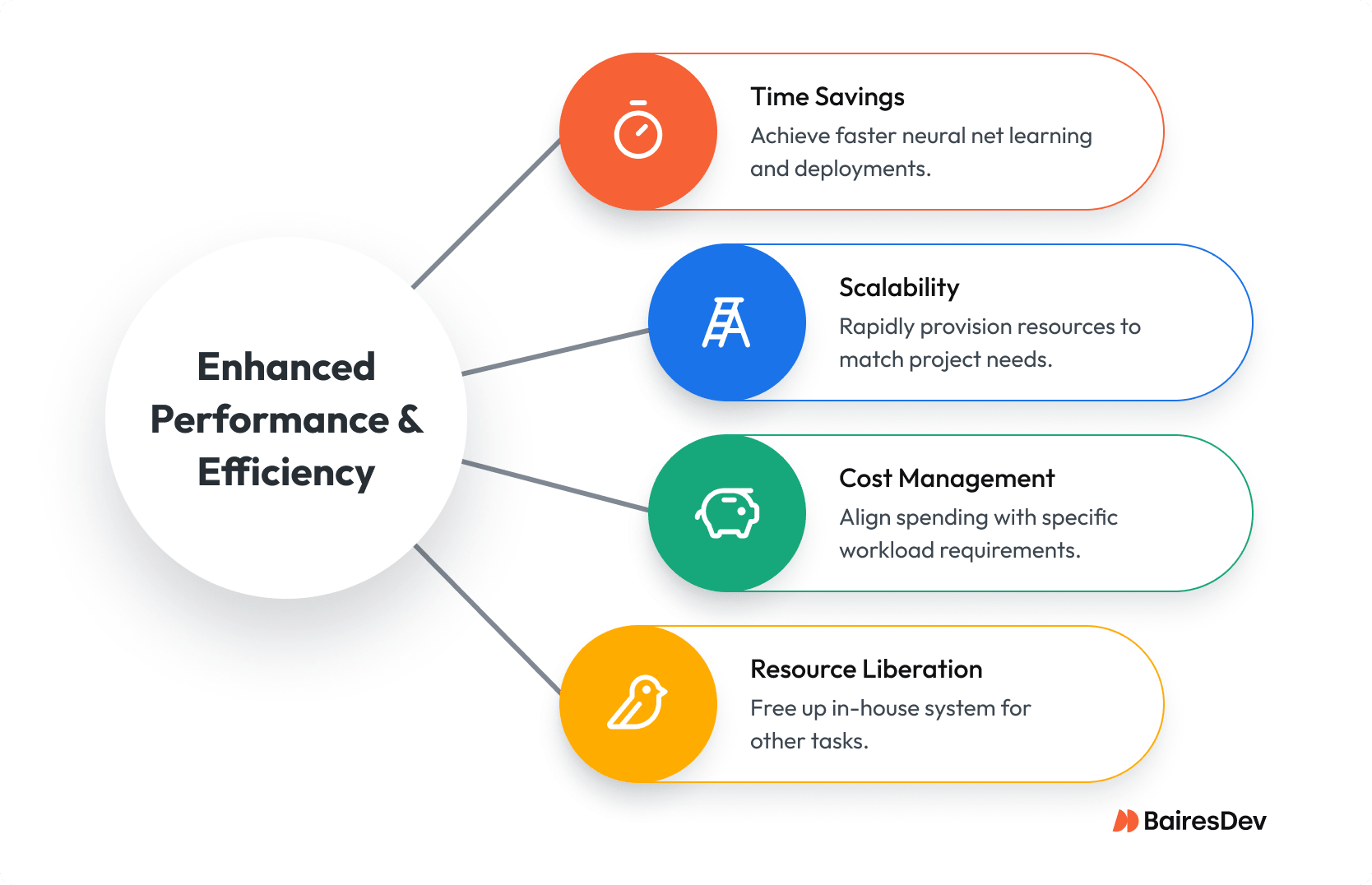

Benefits of a Cloud GPU

Scalability

Cloud GPUs can be provisioned in clusters within minutes, avoiding the long lead times associated with purchasing and installing hardware. Teams can spin resources up or down from a web dashboard, aligning capacity directly with project timelines. This immediacy reduces friction for research and deployment, while global data center networks ensure workloads can be run close to end-users.

Cost Management

Instead of overprovisioning in-house servers that may sit idle, enterprises can rent GPUs on an hourly or per-project basis. The variety of GPU tiers available means companies can align spending with specific workload requirements — from exploratory model testing to full production training. This flexibility allows organizations to control expenses while still accessing cutting-edge performance.

Time Savings

Cloud providers allow companies to build and deploy faster, freeing up scarce human resources. Your senior engineers won’t have to monitor hardware used for AI training, or worry about bottlenecks. You can launch a GPU server in just 30 seconds, highlighting the quick deployment and accessibility of these solutions.

Sure, there will be other concerns, but when using cloud computing, those systems can be easily monitored and automated. Pulling off such a feat on-premise can not only take considerable time and money but will also keep your staff so busy they might not have the availability to take on tasks like securing your network, developing new applications, iterating old applications, or patching vulnerabilities.

All of this is possible when your business opts to offload the considerable demands AI places on systems. If that sounds like something your business desperately needs, it’s time you turn to cloud GPUs to take on the heavy lifting of generative AI.

Security and Compliance

As companies use AI to handle more sensitive data, trust in cloud providers has become critical. Today’s GPU cloud platforms come with strong security features—like encryption, access controls, and compliance with standards such as HIPAA and GDPR.

Compliance isn’t just a checkbox. With AI expanding into regulated industries like healthcare, finance, and government, oversight is tightening. That’s why many enterprises choose cloud providers—not just for speed and performance, but because they know their data can scale safely and stay within legal boundaries.

Future Trends

AI workloads are set to keep growing quickly, and a few clear trends are shaping where things go next. Demand for high-performance computing — especially GPU-based cloud infrastructure — is rising as more organizations run AI and machine learning at scale. At the same time, security and compliance are becoming non-negotiable, with enterprises looking for platforms that can guarantee both performance and trust.

Frequently Asked Questions

How do cloud GPUs differ from running GPUs in-house?

Cloud GPUs let you access enterprise-grade hardware on demand without the capital expense or long procurement cycles of building your own GPU cluster. In-house setups require significant upfront investment in servers, facilities, and cooling, plus ongoing maintenance. Cloud services scale up or down instantly, which makes them far better suited for variable AI workloads where demand is hard to predict.

What are the real cost advantages of using cloud GPUs?

The primary savings come from avoiding idle infrastructure. Buying GPUs for peak demand means they often sit underutilized. You pay only for what you use, whether that’s short-term experimentation or multi-month model training. You also avoid energy and cooling costs, which can be substantial with high-performance hardware.

Are cloud GPUs secure enough for sensitive enterprise data?

Yes—leading GPU cloud providers build in multiple layers of security, including encryption, identity management, and compliance with strict regulatory standards like HIPAA, SOC 2, and GDPR. The key is selecting a provider that demonstrates a clear track record in meeting compliance requirements for industries with high data sensitivity, such as healthcare and finance.